Distance Metrics: Euclidean, Manhattan, Minkowski, Oh My!

Looking to understand the most commonly used distance metrics in machine learning? This guide will help you learn all about Euclidean, Manhattan, and Minkowski distances, and how to compute them in Python.

Image by Author

If you’re familiar with machine learning, you know that data points in the data set and the subsequently engineered features are all points (or vectors) in an n-dimensional space.

The distance between any two points also captures the similarity between them. Supervised learning algorithms such as K Nearest Neighbors (KNN) and clustering algorithms like K-Means Clustering use the notion of distance metrics to capture the similarity between data points.

In clustering, the evaluated distance metric is used to group data points together. Whereas, in KNN, this distance metric is used to find the K closest points to the given data point.

In this article, we’ll review the properties of distance metrics and then look at the most commonly used distance metrics: Euclidean, Manhattan and Minkowski. We’ll then cover how to compute them in Python using built-in functions from the scipy module.

Let’s begin!

Properties of Distance Metrics

Before we learn about the various distance metrics, let's review the properties that any distance metric in a metric space should satisfy [1]:

1. Symmetry

If x and y are two points in a metric space, then the distance between x and y should be equal to the distance between y and x.

2. Non-negativity

Distances should always be non negative. Meaning it should be greater than or equal to zero.

The above inequality holds with equality (d(x,y) = 0) if and only if x and y denote the same point, i.e., x = y.

3. Triangle Inequality

Given three points x, y, and z, the distance metric should satisfy the triangle inequality:

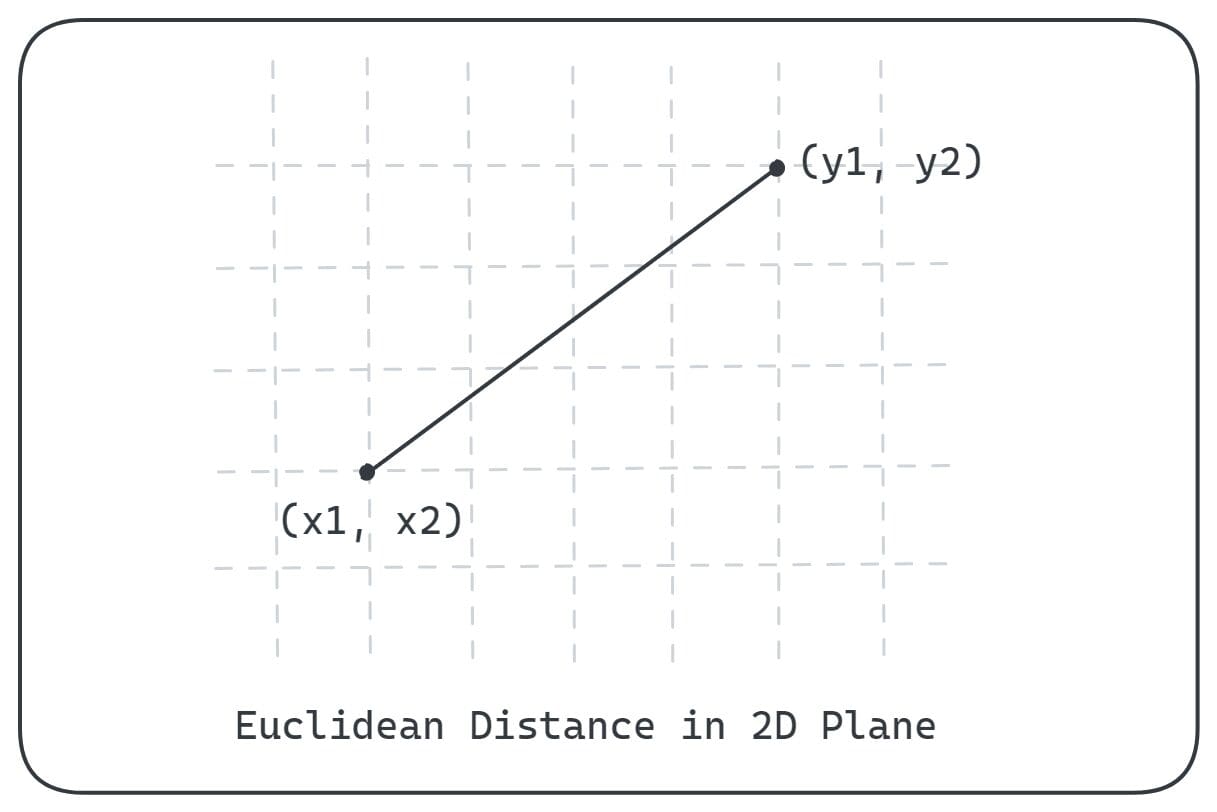

Euclidean Distance

Euclidean distance is the shortest distance between any two points in a metric space. Consider two points x and y in a two-dimensional plane with coordinates (x1, x2) and (y1, y2), respectively.

The Euclidean distance between x and y is shown:

Image by Author

This distance is given by the square root of the sum of the squared differences between the corresponding coordinates of the two points. Mathematically, the Euclidean distance between the points x and y in two-dimensional plane is given by:

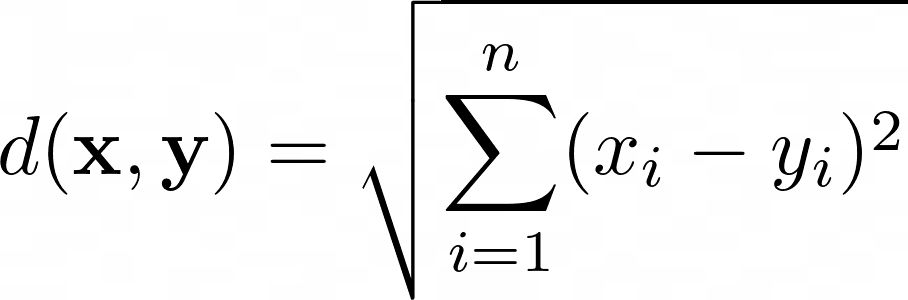

Extending to n dimensions, the points x and y are of the form x = (x1, x2, …, xn) and y = (y1, y2, …, yn), we have the following equation for Euclidean distance:

Computing Euclidean Distance in Python

The Euclidean distance and the other distance metrics in that article can be computed using convenience functions from the spatial module in SciPy.

As a first step, let’s import distance from Scipy’s spatial module:

from scipy.spatial import distance

We then initialize two points x and y like so:

x = [3,6,9]

y = [1,0,1]

We can use the euclidean convenience function to find the Euclidean distance between the points x and y:

print(distance.euclidean(x,y))

Output >> 10.198039027185569

Manhattan Distance

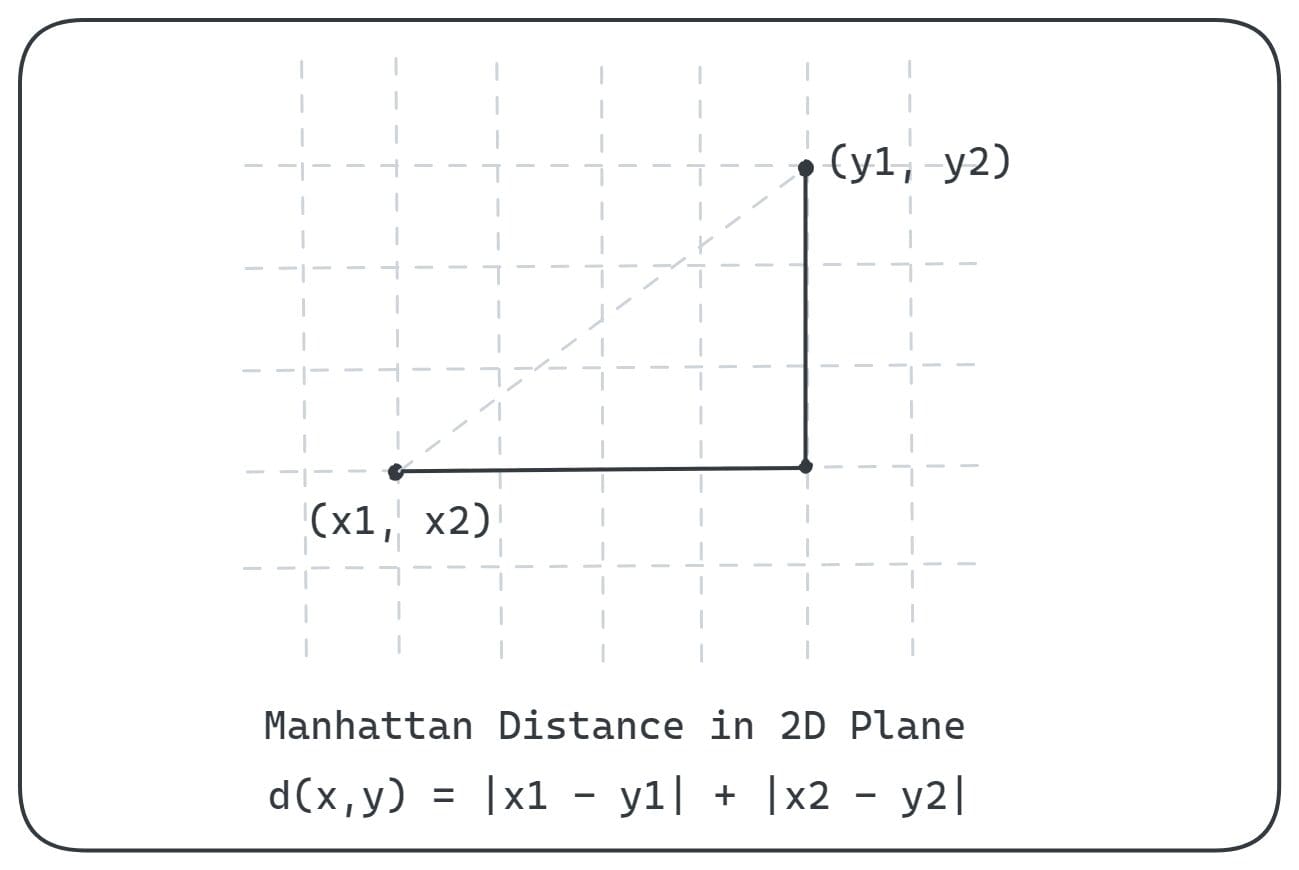

The Manhattan distance, also called taxicab distance or cityblock distance, is another popular distance metric. Suppose you’re inside a two-dimensional plane and you can move only along the axes as shown:

Image by Author

The Manhattan distance between the points x and y is given by:

In n-dimensional space, where each point has n coordinates, the Manhattan distance is given by:

Though the Manhattan distance does not give the shortest distance between any two given points, it is often preferred in applications where the feature points are located in a high-dimensional space [3].

Computing Manhattan Distance in Python

We retain the import and x and y from the previous example:

from scipy.spatial import distance

x = [3,6,9]

y = [1,0,1]

To compute the Manhattan (or cityblock) distance, we can use the cityblock function:

print(distance.cityblock(x,y))

Output >> 16

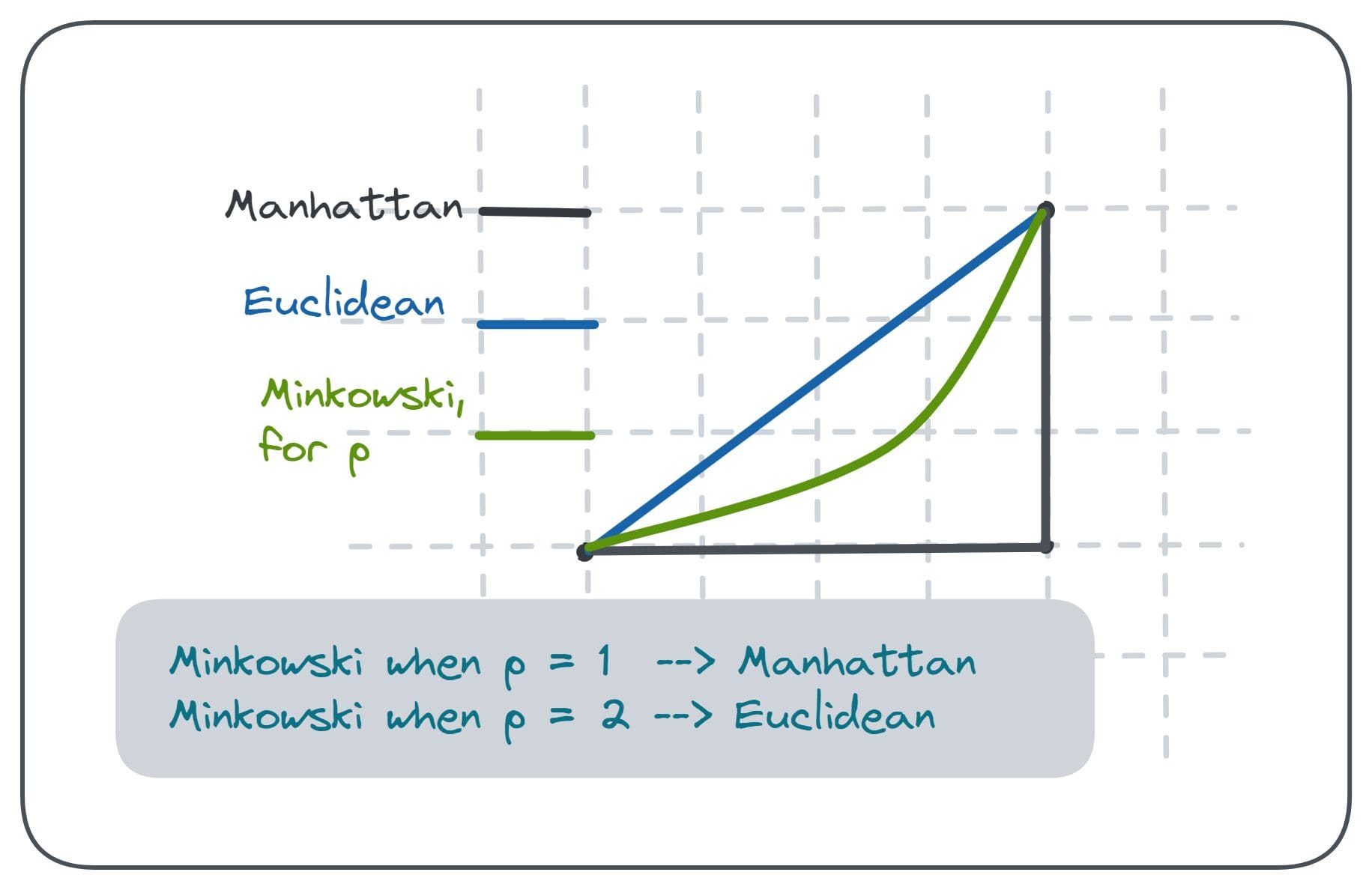

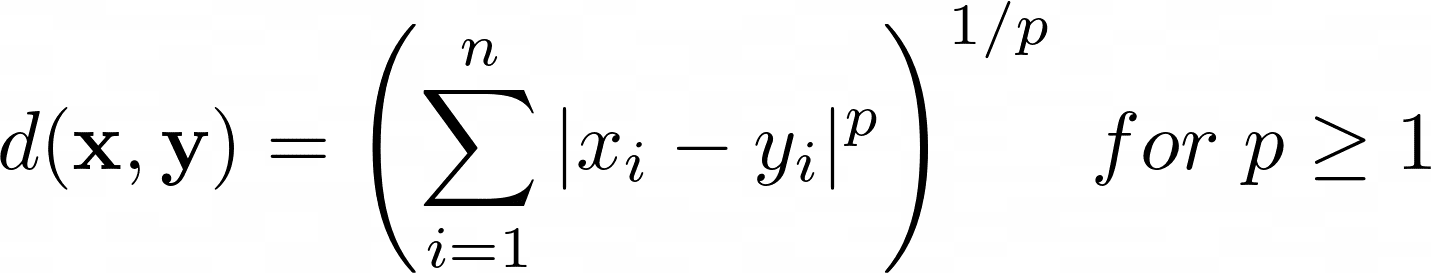

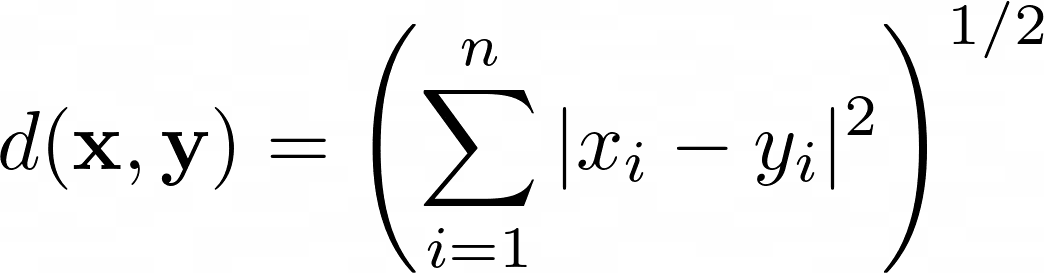

Minkowski Distance

Named after the German mathematician, Hermann Minkowski [2], the Minkowski distance in a normed vector space is given by:

It is pretty straightforward to see that for p = 1, the Minkowski distance equation takes the same form as that of Manhattan distance:

Similarly, for p = 2, the Minkowski distance is equivalent to the Euclidean distance:

Computing Minkowski Distance in Python

Let’s compute the Minkowski distance between the points x and y:

from scipy.spatial import distance

x = [3,6,9]

y = [1,0,1]

In addition to the points (arrays) between which the distance is to be calculated, the minkowski function to compute the distance also takes in the parameter p:

print(distance.minkowski(x,y,p=3))

Output >> 9.028714870948003

To verify if Minkowski distance evaluates to Manhattan distance for p =1, let’s call minkowski function with p set to 1:

print(distance.minkowski(x,y,p=1))

Output >> 16.0

Let’s also verify that Minkowski distance for p = 2 evaluates to the Euclidean distance we computed earlier:

print(distance.minkowski(x,y,p=2))

Output >> 10.198039027185569

And that’s a wrap! If you’re familiar with normed vector spaces, you should be able to see similarity between the distance metrics discussed here and Lp norms. The Euclidean, Manhattan, and Minkowski distances are equivalent to the L2, L1, and Lp norms of the difference vector in a normed vector space.

Summing Up

That's all for this tutorial. I hope you’ve now gotten the hang of the common distance metrics. As a next step, you can try playing around with the different metrics you’ve learned the next time you train machine learning algorithms.

If you’re looking to get started with data science, check out this list of GitHub repositories to learn data science. Happy learning!

References and Further Reading

[1] Metric Spaces, Wolfram Mathworld

[2] Minkowski Distance, Wikipedia

[3] On the Surprising Behavior of Distance Metrics in High Dimensional Space, CC Agarwal et al.

[4] SciPy Distance Functions, SciPy Docs

Bala Priya C is a technical writer who enjoys creating long-form content. Her areas of interest include math, programming, and data science. She shares her learning with the developer community by authoring tutorials, how-to guides, and more.