Calculate Computational Efficiency of Deep Learning Models with FLOPs and MACs

In this article we will learn about its definition, differences and how to calculate FLOPs and MACs using Python packages.

What are FLOPs and MACs?

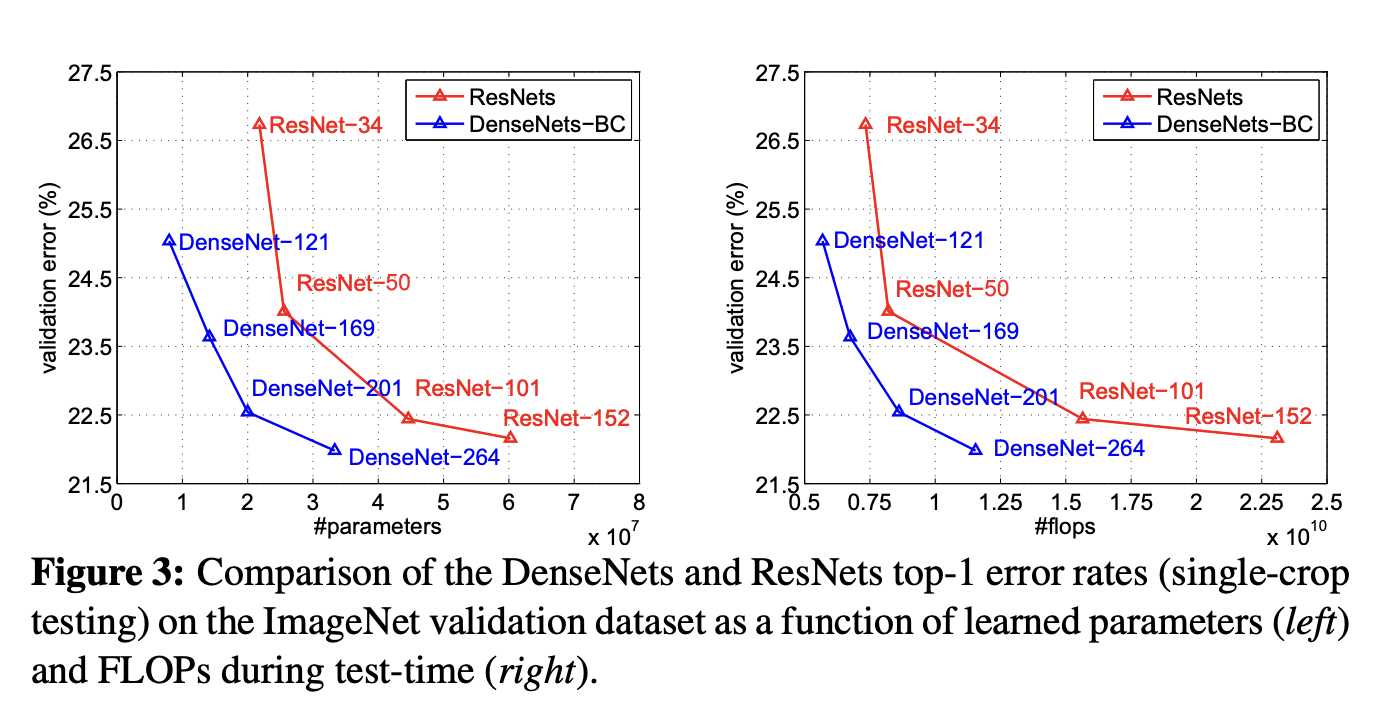

FLOPs (Floating Point Operations) and MACs (Multiply-Accumulate Operations) are metrics that are commonly used to calculate the computational complexity of deep learning models. They are a fast and easy way to understand the number of arithmetic operations required to perform a given computation. For example, when working with different model architectures such as MobileNet or DenseNet for edge devices, people use MACs or FLOPs to estimate the model performance. Also, the reason we use the word “estimate” is that both metrics are approximations instead of the actual capture of the runtime performance model. However, they still can provide very useful insights on energy consumption or computational requirements, which is quite useful in edge computing.

Figure 1: Comparison of different neural nets using FLOPs from “Densely Connected Convolutional Networks”

FLOPs specifically refer to the number of floating-point operations, which include addition, subtraction, multiplication, and division operations on floating-point numbers. These operations are prevalent in many mathematical computations involved in machine learning, such as matrix multiplications, activations, and gradient calculations. FLOPs are often used to measure the computational cost or complexity of a model or a specific operation within a model. This is helpful when we need to provide an estimation of the total arithmetic operations required, which is generally used in the context of measuring computation efficiency.

MACs, on the other hand, only count the number of multiply-accumulate operations, which involve multiplying two numbers and adding the result. This operation is fundamental to many linear algebra operations, such as matrix multiplications, convolutions, and dot products. MACs are often used as a more specific measure of computational complexity in models that heavily rely on linear algebra operations, such as convolutional neural networks (CNNs).

One thing worth mentioning here is that FLOPs cannot be the single factor that people calculate to get a sense of computation efficiency. Many other factors are considered necessary when estimating the model efficiency. For example, how parallel the system setup is; what architecture model has(e.g. group convolution costs in MACs); what computing platform the model uses(e.g. Cudnn has GPU acceleration for deep neural networks and standard operations such as forward or normalization are highly tuned).

Are FLOPS and FLOPs the Same?

FLOPS with all uppercase is the abbreviation of “floating point operations per second”, which refers to the computation speed and is generally used as a measurement of hardware performance. The "S" in the "FLOPS" stands for "second" and together with "P" (as "per"), it is generally used to represent a rate.

FLOPs(lowercase “s” stands for plural) on the other hand, refers to the floating-point operations. It is commonly used to calculate the computation complexity of an algorithm or model. However, in the discussion of AI, sometimes FLOPs can have both of the above meanings and it will leave it to the reader to identify the exact one it stands for. There have also been some discussions calling for people to abandon the usage of “FLOPs” completely and use “FLOP” instead so it’s easier to tell each one apart. In this article, we will continue to use FLOPs.

Relationship between FLOPs and MACs

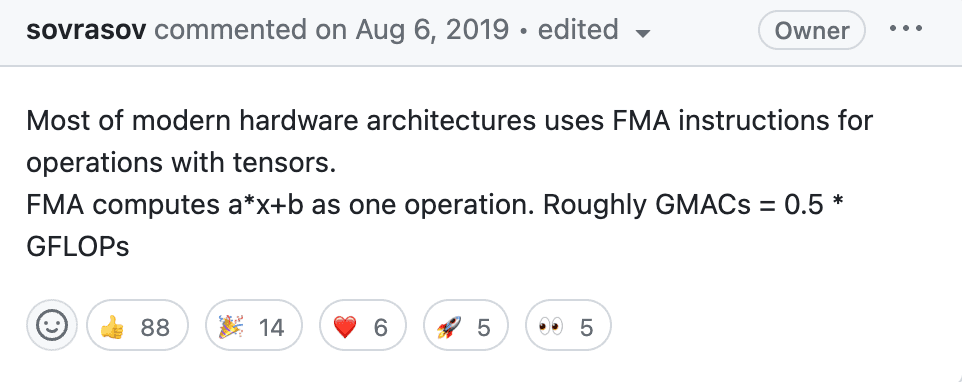

Figure 2: Relationship between GMACs and GLOPs(source)

As mentioned in the previous section, the major difference between FLOPs and MACs include what types of arithmetic operations they count and the context in which they are used. The general AI community consensus, like the GitHub comment in Figure 2, is one MACs equals roughly two FLOPs. For deep neural networks, multiply-accumulate operations are heavy in calculations therefore MACs is considered more significant.

How to Calculate FLOPs?

The good thing is there are multiple open-source packages available already for calculating FLOPs specifically so you don’t have to implement it from scratch. Some of the most popular ones include flops-counter.pytorch and pytorch-OpCounter. There are also packages such as torchstat that gives users a general network analyzer based on PyTorch. It is also worth noting that for these packages the supported layers and models are limited. So if you are running a model that consists of customized network layers you might have to calculate FLOPs yourself.

Here we show a code example to calculate FLOPs using pytorch-OpCounter and a pre-trained alexnet from torchvision:

from torchvision.models import alexnet

from thop import profile

model = alexnet()

input = torch.randn(1, 3, 224, 224)

macs, params = profile(model, inputs=(input, ))

Conclusion

In this article, we introduced the definition of FLOPs and MACs, when they are usually used and the difference between the two attributes.

References

- https://github.com/sovrasov/flops-counter.pytorch

- https://github.com/Lyken17/pytorch-OpCounter

- https://github.com/Swall0w/torchstat

- https://arxiv.org/pdf/1704.04861.pdf

- https://arxiv.org/abs/1608.06993

Danni Li is the current AI Resident at Meta. She's interested in building efficient AI systems and her current research focus is on-device ML models. She is also a strong believer of open source collaboration and utilizing community support to maximize our potential for innovation.