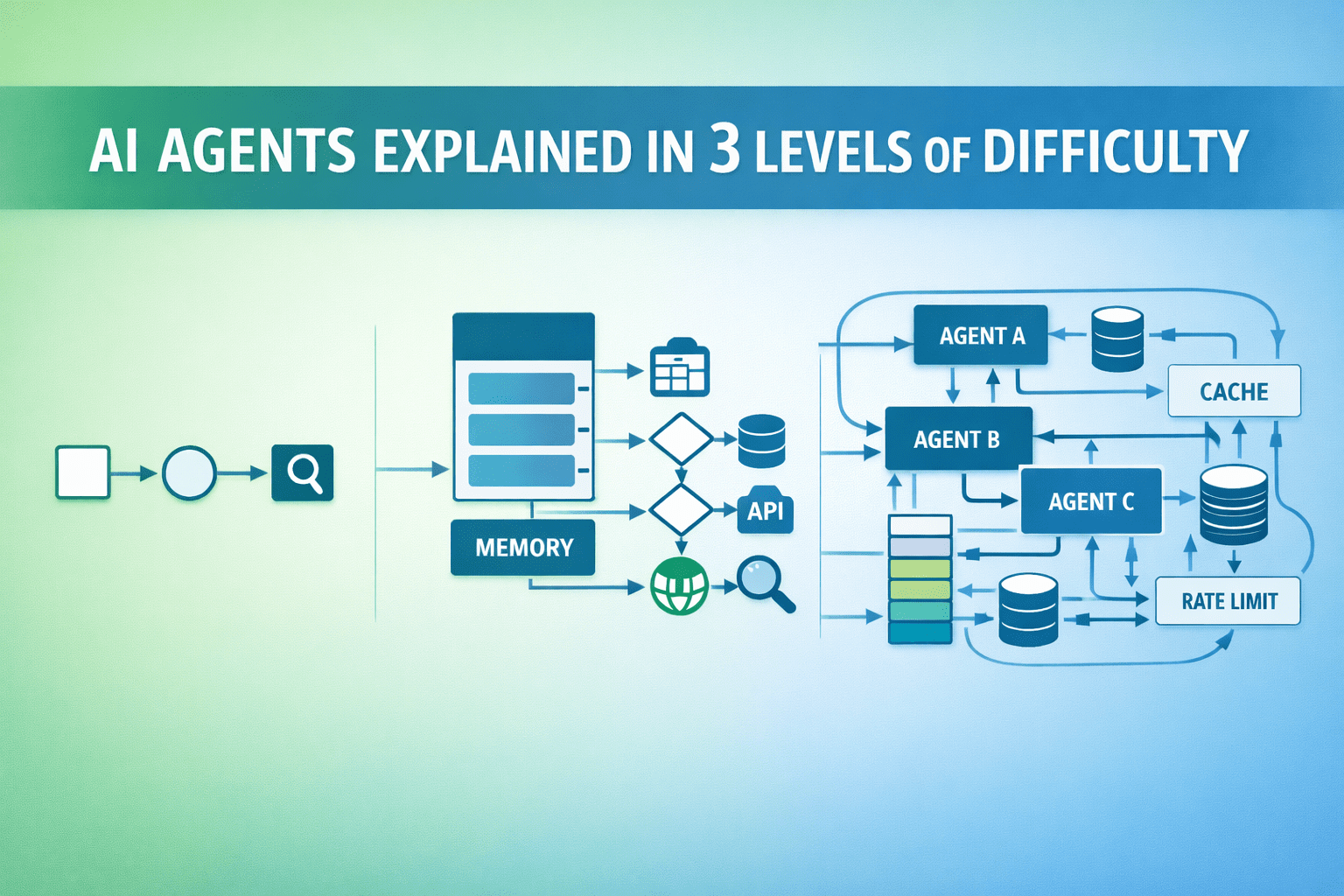

AI Agents Explained in 3 Levels of Difficulty

AI agents go beyond single responses to perform tasks autonomously. Here’s a simple breakdown across three levels of difficulty.

Image by Author

# Introduction

Artificial intelligence (AI) agents represent a shift from single-response language models to autonomous systems that can plan, execute, and adapt. While a standard large language model (LLM) answers one question at a time, an agent breaks down complex goals into steps, uses tools to gather information or take actions, and iterates until the task is complete.

Building reliable agents, however, is significantly harder than building chatbots. Agents must reason about what to do next, when to use which tools, how to recover from errors, and when to stop. Without careful design, they fail, get stuck in loops, or produce plausible-looking but incorrect results.

This article explains AI agents at three levels: what they are and why they matter, how to build them with practical patterns, and advanced architectures for production systems.

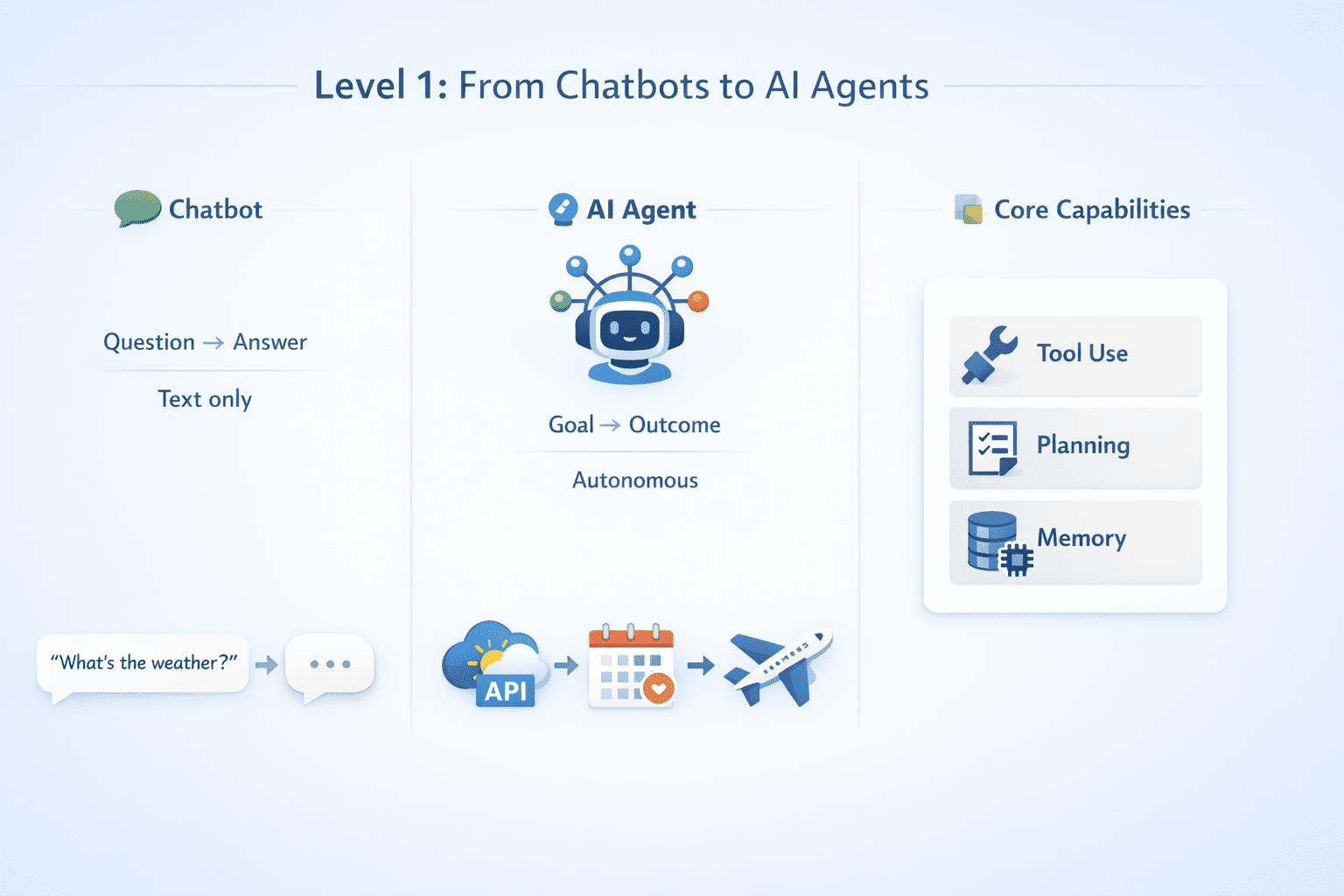

# Level 1: From Chatbots to Agents

A chatbot takes your question and gives you an answer. An AI agent takes your goal and figures out how to achieve it. The difference is autonomy.

Let’s take an example. When you ask a chatbot "What's the weather?", it generates text about weather. When you tell an agent "What's the weather?", it decides to call an application programming interface (API) for weather, retrieves real data, and reports back.

When you say "Book me a flight to Tokyo next month under $800", the agent searches flights, compares options, checks your calendar, and may even make the booking — all without you specifying how.

Agents have three core capabilities that distinguish them from traditional chatbots.

// Tool Use

Tool use is a fundamental capability that allows agents to call external functions, APIs, databases, or services. Tools give agents grounding in reality beyond pure text generation.

// Planning

Planning enables agents to break down complex requests into actionable steps. When you ask an agent to "analyze this market," it transforms that high-level goal into a sequence of concrete actions: retrieve market data, identify trends, compare to historical patterns, and generate insights. The agent sequences these actions dynamically based on what it learns at each step, adapting its approach as new information becomes available.

// Memory

Memory allows agents to maintain state across multiple actions throughout their execution. The agent remembers what it's already tried, what worked, what failed, and what it still needs to do. This persistent awareness prevents redundant actions and enables the agent to build on previous steps toward completing its goal.

The agent loop is simple: observe the current state, decide what to do next, take that action, observe the result, repeat until done. In practice, this loop runs inside a scaffolding system that manages tool execution, tracks state, handles errors, and determines when to stop.

Level 1: From Chatbots to Agents | Image by Author

# Level 2: Building AI Agents In Practice

Implementing AI agents requires explicit design choices across planning, tool integration, state management, and control flow.

// Agent Architectures

Different architectural patterns enable agents to approach tasks in distinct ways, each with specific tradeoffs. Here are the ones you will use most often.

ReAct (Reason + Act) interleaves reasoning and action in a transparent way. The model generates reasoning about what to do next, then selects a tool to use. After the tool executes, the model sees the result and reasons about the next step. This approach makes the agent's decision process visible and debuggable, allowing developers to understand exactly why the agent chose each action.

Plan-and-Execute separates strategic thinking from execution. The agent first generates a complete plan mapping out all anticipated steps, then executes each one in sequence. If execution reveals problems or unexpected results, the agent can pause and replan with this new information. This separation reduces the chance of getting stuck in local loops where the agent repeatedly tries similar unsuccessful approaches.

Reflection enables learning from failure within a single session. After attempting a task, the agent reflects on what went wrong and generates explicit lessons about its mistakes. These reflections are added to context for the next attempt, allowing the agent to avoid repeating the same errors and improve its approach iteratively.

Read 7 Must-Know Agentic AI Design Patterns to learn more.

// Tool Design

Tools are the agent's interface to capabilities. Design them carefully.

Define clear schemas for reliable tool use. Define tools with explicit names, descriptions, and parameter schemas that leave no ambiguity. A tool named search_customer_orders_by_email is far more effective than search_database because it tells the agent exactly what the tool does and when to use it. Include examples of appropriate use cases for each tool to guide the agent's decision-making.

Structured outputs make information extraction reliable and consistent. Tools should return JavaScript Object Notation (JSON) rather than prose, giving the agent structured data it can easily parse and use in subsequent reasoning steps. This eliminates ambiguity and reduces errors caused by misinterpreting natural language responses.

Explicit errors enable recovery from failures. Return error objects with codes and messages that explain exactly what went wrong.

Level 2: Building AI Agents in Practice | Image by Author

// State And Control Flow

Effective state management prevents agents from losing track of their goals or getting stuck in unproductive patterns.

Task state tracking maintains a clear record of what the agent is trying to accomplish, what steps are complete, and what remains. Keep this as a structured object rather than relying solely on conversation history, which can become unwieldy and difficult to parse. Explicit state objects make it easy to check progress and identify when the agent has drifted from its original goal.

Termination conditions prevent agents from running indefinitely or wasting resources. Set multiple stop criteria including a task completion signal, maximum iterations (typically 10—50 depending on complexity), repetition detection to catch loops, and resource limits for tokens, cost, and execution time. Having diverse stopping conditions ensures the agent can exit gracefully under various failure modes.

Error recovery strategies allow agents to handle problems without completely failing. Retry transient failures with exponential backoff to handle temporary issues like network problems. Implement fallback strategies when primary approaches fail, giving the agent alternative paths to success. When full completion isn't possible, return partial results with clear explanations of what was accomplished and what failed.

// Evaluation

Rigorous evaluation reveals whether your agent actually works in practice.

Task success rate measures the fundamental question: given benchmark tasks, what percentage does the agent complete correctly? Track this metric as you iterate on your agent design, using it as your north star for improvement. A decline in success rate indicates regressions that need investigation.

Action efficiency examines how many steps the agent takes to complete tasks. More actions isn't always worse; some complex tasks genuinely require many steps. However, when an agent takes 30 actions for something that should take 5, it indicates problems with planning, tool selection, or getting stuck in unproductive loops.

Failure mode analysis requires classifying failures into categories like wrong tool selected, correct tool called incorrectly, got stuck in loop, or hit resource limit. By identifying the most common failure modes, you can prioritize fixes that will have the biggest impact on overall reliability.

Level 2: State, Control, and Evaluation | Image by Author

# Level 3: Agentic Systems In Production

Building agents that work reliably at scale requires sophisticated orchestration, observability, and safety constraints.

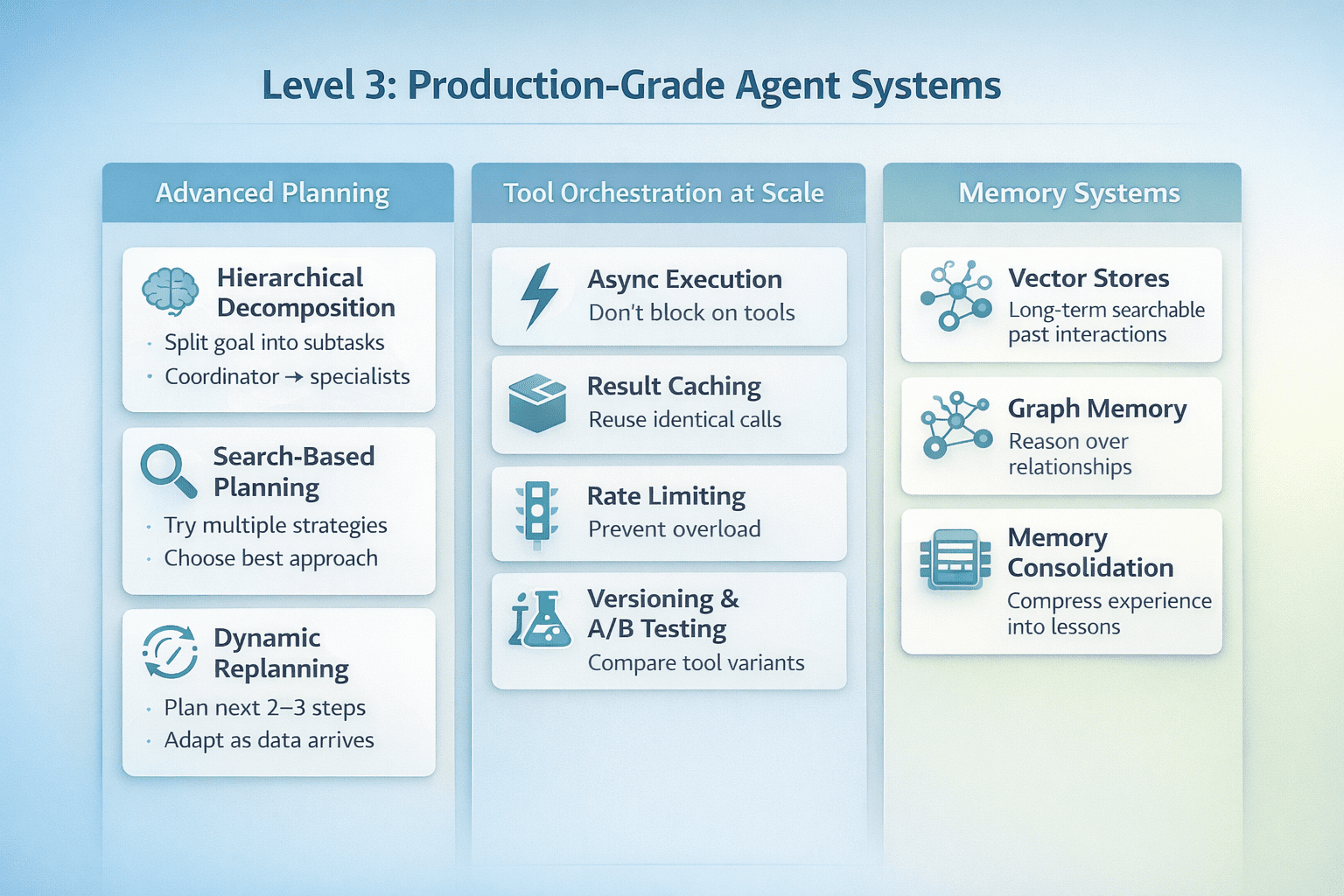

// Advanced Planning

Sophisticated planning strategies enable agents to handle complex, multi-faceted tasks that simple sequential execution cannot manage.

Hierarchical decomposition breaks complex tasks into subtasks recursively. A coordinator agent delegates to specialized sub-agents, each equipped with domain-specific tools and prompts tailored to their expertise. This architecture enables both specialization — each sub-agent becomes effective at its narrow domain — and parallelization, where independent subtasks execute simultaneously to reduce overall completion time.

You can also try search-based planning to explore multiple possible approaches before committing to one. You can interleave planning and execution for maximum adaptability. Rather than generating a complete plan upfront, the agent generates only the next 2-3 actions, executes them, observes results, and replans based on what it learned. This approach allows the agent to adapt as new information emerges, avoiding the limitations of rigid plans that assume a static environment.

// Tool Orchestration At Scale

Production systems require sophisticated tool management to maintain performance and reliability under real-world conditions.

Async execution prevents blocking on long-running operations. Rather than waiting idle while a tool executes, the agent can work on other tasks or subtasks. Result caching eliminates redundant work by storing tool outputs. Each tool call is hashed by its function name and parameters, creating a unique identifier for that exact query. Before executing a tool, the system checks if that identical call has been made recently. Cache hits return stored results immediately. This avoids redundant API calls that waste time and rate limit quota.

Rate limiting prevents runaway agents from exhausting quotas or overwhelming external services. Enforce per-tool rate limits. When an agent hits a rate limit, the system can queue requests, slow down execution, or fail more gracefully rather than causing cascading errors.

Versioning and A/B testing enable continuous improvement without risk. Maintain multiple versions of tool implementations and randomly assign agent requests to different versions. Track success rates and performance metrics for each version to validate that changes actually improve reliability before rolling them out to all traffic.

// Memory Systems

Advanced memory architectures allow agents to learn from experience and reason over accumulated knowledge.

You can store agent experiences in vector databases where they can be retrieved by semantic similarity. When an agent encounters a new task, the system retrieves similar past experiences as few-shot examples, showing the agent how it or other agents handled comparable situations. This enables learning across sessions, building organizational knowledge that persists beyond individual agent runs.

Graph memory models entities and relationships as a knowledge graph, enabling complex relational reasoning. Rather than treating information as isolated facts, graph memory captures how concepts connect. This allows multi-hop queries like "What projects is developer A working on that depend on developer B's database?" where the answer requires traversing multiple relationship edges.

Memory consolidation prevents unbounded growth while preserving learned knowledge. Periodically, the system compresses detailed execution traces into generalizable lessons — abstract patterns and strategies rather than specific action sequences. This distillation maintains the valuable insights from experience while discarding low-value details, keeping memory systems performant as they accumulate more data.

Level 3: Production-Grade Agent Systems | Image by Author

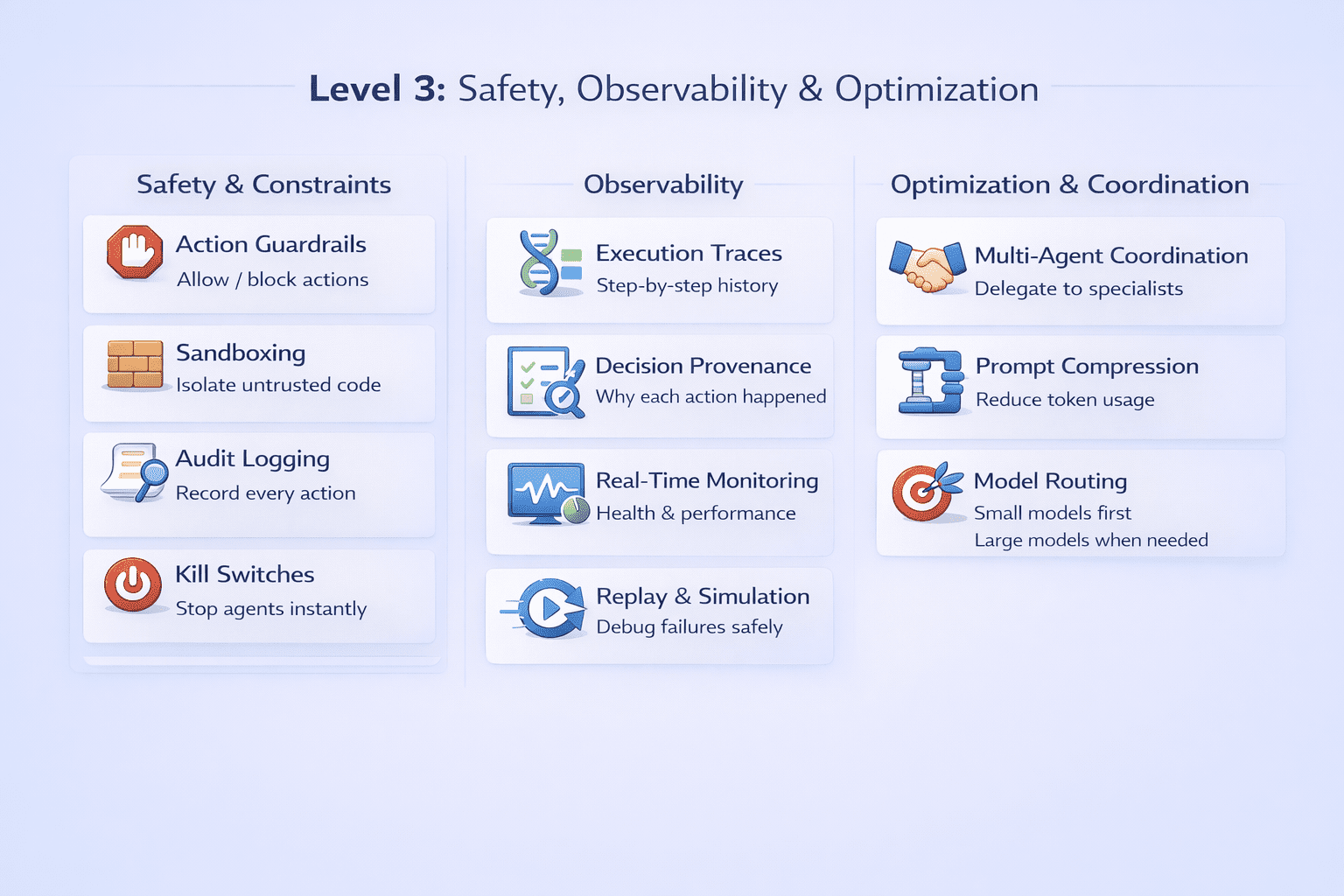

// Safety And Constraints

Production agents require multiple layers of safety controls to prevent harmful actions and ensure reliability.

Guardrails define explicit boundaries for agent behavior. Specify allowed and forbidden actions in machine-readable policies that the system can enforce automatically. Before executing any action, check it against these rules. For high-risk but sometimes legitimate actions, require human approval through an interrupt mechanism.

Sandboxing isolates untrusted code execution from critical systems. Run tool code in containerized environments with restricted permissions that limit what damage compromised or buggy code can cause.

Audit logging creates an immutable record of all agent activity. Log every action with full context including timestamp, user, tool name, parameters, result, and the reasoning that led to the decision.

Kill switches provide emergency control when agents behave unexpectedly. Implement multiple levels: a user-facing cancel button for individual tasks, automated circuit breakers that trigger on suspicious patterns like rapid repeated actions, and administrative overrides that can disable entire agent systems instantly if broader problems emerge.

// Observability

Production systems need comprehensive visibility into agent behavior to debug failures and optimize performance.

Execution traces capture the complete decision path. Record every reasoning step, tool call, observation, and decision, creating a complete audit trail. These traces enable post-hoc analysis where developers can examine exactly what the agent was thinking and why it made each choice.

Decision provenance adds rich context to action logs. For every action, record why the agent chose it, what alternatives were considered, what information was relevant to the decision, and what confidence level the agent had.

Real-time monitoring provides operational visibility into fleet health. Track metrics like number of active agents, task duration distributions, success and failure rates, tool usage patterns, and error rates by type.

Replay and simulation enable controlled debugging of failures. Capture failed execution traces and replay them in isolated debug environments. Inject different observations at key decision points to test counterfactuals: what would the agent have done if the tool had returned different data? This controlled experimentation reveals the root causes of failures and validates fixes.

// Multi-Agent Coordination

Complex systems often require multiple agents working together, necessitating coordination protocols.

Task delegation routes work to specialized agents based on their capabilities. A coordinator agent analyzes incoming tasks and determines which specialist agents to involve based on the required skills and available tools. The coordinator delegates subtasks, monitors their progress, and synthesizes results from multiple agents into a coherent final output. Communication protocols enable structured inter-agent interaction.

// Optimization

Production systems require careful optimization to meet latency and cost targets at scale.

Prompt compression addresses the challenge of growing context size. Agent prompts become large as they accumulate tool schemas, examples, conversation history, and retrieved memories. Apply compression techniques that reduce token count while preserving essential information — removing redundancy, using abbreviations consistently, and pruning low-value details.

Selective tool exposure dynamically filters which tools the agent can see based on task context. Model routing optimizes the cost-performance tradeoff by using different models for different decisions. Route routine decisions to smaller, faster, cheaper models that can handle straightforward cases. Escalate to larger models only for complex reasoning that requires sophisticated planning or domain knowledge. This dynamic routing can reduce costs by 60—80% while maintaining quality on difficult tasks.

Level 3: Safety, Observability, and Optimization | Image by Author

# Wrapping Up

AI agents represent a fundamental shift in what's possible with language models — from generating text to autonomously accomplishing goals. Building reliable agents requires treating them as distributed systems with orchestration, state management, error handling, observability, and safety constraints.

Here are a few resources to level up your agentic AI toolkit:

- Building Effective AI Agents | Anthropic

- Writing effective tools for AI agents—using AI agents | Anthropic

- OpenAI’s A Practical Guide to Building Agents

- Choose a design pattern for your agentic AI system | Cloud Architecture Center

Happy learning!

Bala Priya C is a developer and technical writer from India. She likes working at the intersection of math, programming, data science, and content creation. Her areas of interest and expertise include DevOps, data science, and natural language processing. She enjoys reading, writing, coding, and coffee! Currently, she's working on learning and sharing her knowledge with the developer community by authoring tutorials, how-to guides, opinion pieces, and more. Bala also creates engaging resource overviews and coding tutorials.