5 Machine Learning Projects You Can No Longer Overlook

We all know the big machine learning projects out there: Scikit-learn, TensorFlow, Theano, etc. But what about the smaller niche projects that are actively developed, providing useful services to users? Here are 5 such projects.

The popular machine learning projects, in general, are popular because they either provide a wide range of needed services or they were the first (or possibly best) to provide a particular niche service to users. These popular projects include Scikit-learn, TensorFlow, Theano, MXNet (maybe?), Weka (formerly), and so on. Depending on the particular ecosystem(s) you work in, and on your machine learning goals, the projects which you consider popular may differ slightly; however, they all share the similarity that they provide services to a large base of users.

But there are all sorts of smaller machine learning projects out there that people are building and using: pipelines, wrappers, high-level APIs, cleaners, etc. They provide both niche and flexible services, usually for smaller numbers of users, for all sorts of reasons. This post will present 5 such smaller projects for readers to familiarize themselves with.

I'm not necessarily suggesting you go out and try all (or any) of these; if there is some particular requirement you are looking to fill which you happen to find a corresponding tool for in this list, then by all means give it a try. The real value here, however, at least in my view, is checking out what the projects offer, how they are implemented, and what others are building to fit into their ecosystems. You might get some good ideas of where to take your own projects. But best case: something here fills a need perfectly and you solve a problem, thanks to the work the developers listed herein are doing.

There is no real criteria for these items, I'm sorry to report. They are simply a collection of interesting projects I have noted over the past few months and thought were promising enough to share with readers. Also note that I am firmly invested in the Python ecosystem, and so these tools have been discovered accordingly. I don't have any bias against any of the projects that R or C++ or any other particular environment has to offer (and may discover and share such projects in future posts); this list came together generically, however, based on my internet wanderings as I searched for useful tools.

So here they are: 5 machine learning projects you should definitely have a look at, in no particular order (but numbered like they are in order, because I like numbering things):

1. Deepy

Deepy is an extensible deep learning framework based on Theano. It provides a clean, high-level interface for components such as LSTMs, Batch Normalization, and Auto Encoders. Deepy clearly aims for simplicity, and its documentation and examples aim for the same. It also has a sister project, which uses Deepy to implement Deep Recurrent Attentive Writer (DRAW) generative models.

For an example of Deepy's simplicity and cleanliness, here's an example of a multi-layer model with dropout, from the project's Github:

# A multi-layer model with dropout for MNIST task. from deepy import * model = NeuralClassifier(input_dim=28*28) model.stack(Dense(256, 'relu'), Dropout(0.2), Dense(256, 'relu'), Dropout(0.2), Dense(10, 'linear'), Softmax()) trainer = MomentumTrainer(model) annealer = LearningRateAnnealer(trainer) mnist = MiniBatches(MnistDataset(), batch_size=20) trainer.run(mnist, controllers=[annealer])

</br class="blank" />

You may have even heard of Deepy already; its Github repo has 305 stars and has been forked 51 times, as of this writing. The project is a decent exemplar of high-level deep learning APIs and wrappers that are becoming widespread (or seem to be). Deepy is authored by Raphael Shu.

2. MLxtend

Sebastian Raschka has put together MLxtend, something he is quick to point out is a work in progress, but is also something which attempts to tick a number of different boxes. MLxtend is a collection of useful tools and extensions for machine learning tasks.

Essentially, it's just a collection of useful tools and reference implementations related to ML and data science in general. Why did I come up with it? There are a couple of reasons:

- Implementations of algorithms that I couldn't find anywhere else (e.g., the Sequential Feature Selection algorithms, the Majority Voting Classifier, the Stacking estimators, plotting decision regions, ...)

- Implementations for teaching purposes (logistic regression, softmax regression, multi-layer perceptron, PCA, kernel PCA...); these impl. focus on code readability rather than pure efficiency

- Wrappers for convenience: tensorflow softmax regression and multi-layer perceptrons, column-wise standardization for pandas data frames

This is essentially a library of commonly-used general machine learning functions that Sebastian has written and frequently uses. Additionally, Sebastian really likes to code, and thought that if he were to offer this "zoo" of different things (as he refers to it) up to others that he may keep the code "tidier" than usual.

Many of the implemented functions share similarities with scikit-learn's API, but future addition functionality will not necessarily be restricted by this. The big takeaway here: Sebastian promises that there is much more to come... so stay tuned. There's a good chance that any feature or novel algorithm that Sebastian plays with will end up being packaged in MLxtend.

3. datacleaner

datacleaner is the work of researcher Randal Olson, who is also responsible for the fantastic TPOT machine learning pipeline project. Olson bills Data Cleaner as a "Python tool that automatically cleans data sets and readies them for analysis." He is quick to declare that it is not magic, but also points out what it can do:

What datacleaner will do is save you a ton of time encoding and cleaning your data once it's already in a format that pandas DataFrames can handle.

datacleaner is a work in progress, but is currently capable of handling the following regular (and time-consuming) data cleaning operations: optionally drops rows with missing values; replaces missing values with either mode or median, on a column by column basis; encodes non-numerical variables with numerical equivalents. Randal tells us that he is looking for contributors, especially from those with more ideas on what data cleaning operations datacleaner could perform in an automated fashion.

Randal has an attention to detail that anyone who reads his blog or his Github repos already knows, and the concise documentation for this project is no exception. I have been using datacleaner recently, and so far it delivers on its promises.

4. auto-sklearn

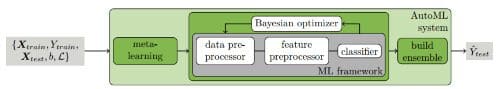

auto-sklearn is automated machine learning for the Scikit-learn environment.

auto-sklearn frees a machine learning user from algorithm selection and hyperparameter tuning. It leverages recent advantages in Bayesian optimization, meta-learning and ensemble construction. Learn more about the technology behind auto-sklearn by reading this paper published at the NIPS 2015.

Its documentation is quite thorough, and the repo includes a few concise examples. I have, admittedly, not used it yet, but many others have; it has collected nearly 400 stars on Github. Given my propensity for Scikit-learn, I imagine I will try this out in the near future.

auto-sklearn is developed mainly by the Machine Learning for Automated Algorithm Design group at the University of Freiburg.

5. Deep Mining

Deep Mining is a machine learning pipeline auto-tuner, coming to us from Sebastien Dubois of the CSAIL lab at MIT. From the repo:

This software will test iteratively, and smartly, some hyperparameter sets in order to find as quickly as possible the best ones to achieve the best classification accuracy that a pipeline can offer.

Deep Mining does not seem to be a well-known project, given its relatively modest number of repo stars; however, given that it comes out of CSAIL, and has development activity within the past month, it may be worth benchmarking this against other similar automated pipeline tools. It comes with a few examples, and its usage seems to be straightforward.

More on the methods used:

The folder GCP-HPO contains all the code implementing the Gaussian Copula Process (GCP) and a hyperparameter optimization (HPO) technique based on it. Gaussian Copula Process can be seen as an improved version of the Gaussian Process, that does not assume a Gaussian prior for the marginal distributions but lies on a more complex prior. This new technique is proved to outperform GP-based hyperparameter optimization, which is already far better than the randomized search.

A paper on the GCP approach is forthcoming.

Related: