AI and Deep Learning, Explained Simply

AI and Deep Learning, Explained Simply

AI can now see, hear, and even bluff better than most people. We look into what is new and real about AI and Deep Learning, and what is hype or misinformation.

Sci-fi level Artificial Intelligence (AI) like HAL 9000 was promised since 1960s, but PCs and robots were dumb until recently. Now, tech giants and startups are announcing the AI revolution: self-driving cars, robo doctors, robo investors, etc. PwC just said that AI will contribute $15.7 trillion to the world economy by 2030. “AI” it’s the 2017 buzzword, like “dot com” was in 1999, and everyone claims to be into AI. Don’t be confused by the AI hype. Is this a bubble or for real? What’s new from older AI flops?

AI is not easy or fast to apply. The most exciting AI examples come from universities or the tech giants. Self-appointed AI experts who promise to revolutionize any company with the latest AI in short time are doing AI misinformation, some just rebranding old tech as AI. Everyone is already using the latest AI through Google, Microsoft, Amazon etc. services. But “deep learning” will not soon be mastered by the majority of businesses for custom in-house projects. Most have insufficient relevant digital data, not enough to train an AI reliably. As a result, AI will not kill all jobs, especially because it will require humans to train and test each AI.

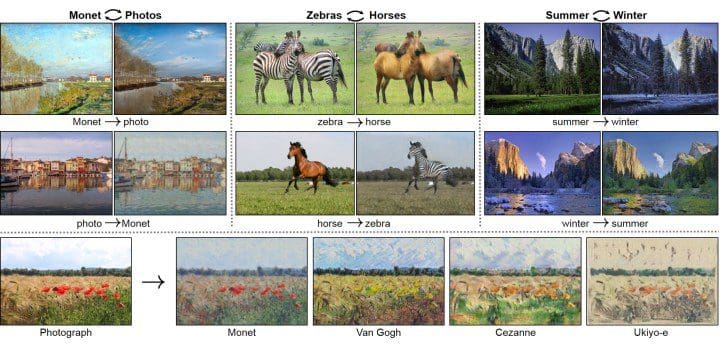

AI now can “see”, and master vision jobs, for ex. identify cancer or other diseases from medical images, statistically better than human radiologists, ophthalmologists, dermatologists, etc. AI can drive cars, read lips, etc. AI can paint in any style learned from samples (for ex., Picasso or yours), and apply the style to photos. And the inverse: guess a realistic photo from a painting, hallucinating the missing details. AIs looking at screenshots of web pages or apps, can write code producing similar pages or apps.

Fig. (Style transfer: learn from a photo, apply to another. Credits: Andrej Karpathy)

AI now can “hear”, not only to understand your voice: it can compose music in style of the Beatles or yours, imitate the voice of any person it hears for a while, and so on. The average person can’t say what painting or music is composed by humans or machines, or what voices are spoken by the human or AI impersonator.

AI trained to win at poker games learned to bluff, handling missing and potentially fake, misleading information. Bots trained to negotiate and find compromises, learned to deceive too, guessing when you’re not telling them the truth, and lying as needed. A Google translate AI trained on Japanese-English and Korean-English examples only, translated Korean-Japanese too, a language pair it was not trained on. It seems it built an intermediate language on its own, representing any sentence regardless of language.

Machine learning (ML), a subset of AI, make machines learn from experience, from examples of the real world: the more the data, the more it learns. A machine is said to learn from experience with respect to a task, if its performance at doing the task improves with experience. Most AIs are still made of fixed rules, and do not learn. I will use “ML” to refer “AI that learns from data” from now on, to underline the difference.

Artificial Neural Networks (ANN) is only one approach to ML, others (not ANN) include decision trees, support vector machines, etc. Deep learning is an ANN with many levels of abstraction. Despite the “deep” hype, many ML methods are “shallow”. Winning MLs are often a mix – a ensemble of methods, for example trees + deep learning + other, independently trained and then combined together. Each method might make different errors, so averaging their results can win, at times, over single methods.

The old AI was not learning. It was rule-based, several “if this then that” written by humans: this can be AI since it solves problems, but not ML since it does not learn from data. Most of current AI and automation systems still are rule-based code. ML is known since the 1960s, but like the human brain, it needs billions of computations over lots of data. To train an ML in 1980s PCs it required months, and digital data was rare. Handcrafted rule-based code was solving most problems fast, so ML was forgotten. But with today’s hardware (NVIDIA GPUs, Google TPUs etc.) you can train an ML in minutes, optimal parameters are known, and more digital data is available. Then, after 2010 one AI field after another (vision, speech, language translation, game playing etc.) it was mastered by MLs, winning over rules-based AIs, and often over humans too.

Why AI beat humans in Chess in 1997, but only in 2016 in Go: for problems that can be mastered by humans as a limited, well defined rule-set, for ex. beat Kasparov (then world champion) at chess, it’s enough (and best) to write a rule-based code the old way. The possible next dozen moves in Chess (8 x 8 grid with limits) are just billions: in 1997, computers simply became fast enough to try all the moves. But in Go (19 x 19 grid, free) there are more moves than atoms in the universe: no machine can try them all in billion years. It’s like trying all random letter combinations to get this article as result, or trying random paint strokes until getting a Picasso: it will never happen. The only known hope is to train an ML on the task. But ML is approximate, not exact, to be used only for intuitive tasks you can’t reduce to “if this then that” deterministic logic in reasonably few loops. ML is “stochastic”: for patterns you can analyse statistically, but you can’t predict precisely.

ML automates automation, as long as you prepared correctly the data to train from. That’s unlike manual automation where humans come up with rules to automate a task, a lot of “if this then that” describing for ex. what e-mail is likely to be spam or not, or if a medical photo represents a cancer or not. In ML instead we only feed data samples of the problem to solve: lots (thousands or more) of spam and no spam emails, cancer and no cancer photos etc., all first sorted, polished, and labeled by humans. The ML then figures out (learns) the rules by itself, magically, but it does not explains these rules. You show a photo of a cat, the ML says this is a cat, but no indication why.

(bidirectional AI transforms: Horse to Zebra, Zebra to Horse, Summer from/to Winter, Photo from/to Monet etc. credits: Jun-Yan Zhu, Taesung Park et all.)

Most ML is Supervised Learning, where the examples for training are given to ML along with labels, a description or transcription of each example. You first need a human to divide the photos of cats from those of dogs, or spam from legitimate emails, etc. If you label the data incorrectly, the ML results will be incorrect, this is very important as will be discussed later. Throwing unlabeled data to ML it’s Unsupervised Learning, where the ML discovers patterns and clusters on data, useful for exploration, but unlikely enough alone to solve your problems.

In Anomaly Detection you identify unusual things that differ from the norm, for ex. frauds or cyber intrusions. An ML trained only on old frauds it would miss the always new fraud ideas. Then, you can teach the normal activity, asking the ML to warn on any suspicious difference. Governments already rely on ML to detect tax evasion.

Reinforcement Learning is shown in the 1983 movie War Games, where a computer decides not to start World War III by playing out every scenario at light speed, finding out that all would cause world destruction. The AI discovers through millions of trial and error, within rules of a game or an environment, which actions yield the greatest rewards. AlphaGo was trained this way: it played against itself millions of times, reaching super-human skills. It made surprising moves, never seen before, that humans would consider as mistakes. But later, these was proven as brilliantly innovative tactics. The ML became more creative than humans at the Go game. At Poker or other games with hidden cards, the MLs learns to bluff and deceive too: it does what’s best to win.

The “AI effect” is when people argue that an AI it is not real intelligence. Humans subconsciously need to believe to have a magical spirit and unique role in the universe. Every time a machine outperforms humans on a new piece of intelligence, such as play chess, recognize images, translate etc., always people say: “That’s just brute force computation, not intelligence”. Lots of AI is included in many apps, but once widely used, it’s not labeled “intelligence” anymore. If “intelligence” it is only what’s not done yet by AI (what’s still unique to the brain), then dictionaries should be updated every year, like: “math it was considered intelligence until 1950s, but now no more, since computers can do it”, it’s quite strange. About “brute force”, a human brain got 100 trillion of neuronal connections, lots more than any computer on earth. ML can’t do “brute force”: trying all the combinations it would take billion years. ML do “educated guesses” using less computations than a brain. So it should be the “smaller” AI to claim that the human brain as not real intelligence, but only brute force computation.

ML is not a human brain simulator: real neurons are very different. ML it’s an alternative way to reach brain-like results, similar to a brain like a horse is similar to a car. It matters that both car and horse can transport you from point A to point B: the car do it faster, consuming more energy and lacking most horse features. Both the brain and ML run statistics (probability) to approximate complex functions: they give result only a bit wrong, but usable. MLs and brains give different results on same task, as they approximate in different way. Everyone knows that while the brain forgets things and is limited in doing explicit math, the machines are perfect for memory and math. But the old idea that machines either give exact results or are broken is wrong, outdated. Humans do many mistakes, but instead of: “this brain is broken!”, you hear: “study more!”. MLs doing mistakes are not “broken” either, they must study more data, or different data. MLs trained with biased (human generated) data will end up racist, sexist, unfair: human in the worst way. AI should not be compared only with our brain, AI it is different, and that’s an opportunity. We train MLs with our data, to imitate the human jobs, activity and brain only. But the same MLs, if trained in other galaxies, could imitate different (perhaps better) alien brains. Let’s try to think in alien ways too.

AI is getting as mysterious as humans. The idea that computers can’t be creative, liars, wrong or human-like comes from old rule-based AI, indeed predictable, but that seems changed with ML. The arguments to reduce each new capability mastered by AI as “not real intelligence” are ending. The real issue left is: general versus narrow AI.

(Please forget the general AI seen in movies. But the “narrow AI” is smart too!)

Unlike some other sciences, you can’t verify if an ML is correct using a logical theory. To judge if an ML it is correct or not, you can only test its results (errors) on unseen new data. The ML is not a black box: you can see the “if this then that” list it produces and runs, but it’s often too big and complex for any human to follow. ML it’s a practical science trying to reproduce the real world’s chaos and human intuition, without giving a simple or theoretical explanation. It gives the too big to understand linear algebra producing the results. It’s like when you have an idea which works, but you can’t explain exactly how you came up with the idea: for the brain that’s called inspiration, intuition, subconscious, while in computers it’s called ML. If you could get the complete list of neuron signals that caused a decision in a human brain, could you understand why and how really the brain took that decision? Maybe, but it’s complex.

Everyone can intuitively imagine (some even draw) the face of a person, in original and in Picasso style. Or imagine (some even play) sounds or music styles. But no one can describe, with a complete and working formula, the face, sound or style change. Humans can visualize only up to 3 dimensions: even Einstein it could not conceive, consciously, ML-like math with let’s say 500 dimensions. Such 500D math is solved by our brains all the time, intuitively, like magic. Why it is not solved consciously? Imagine if for each idea, the brain also gave us the formulas used, with thousands of variables. That extra info would confuse and slow us down a lot, and for what? No human could use pages-long math, we’re not evolved with an USB cable on the head.

AI and Deep Learning, Explained Simply

AI and Deep Learning, Explained Simply