Are physicians worried about computers machine learning their jobs?

We review JAMA article on “Unintended Consequences of Machine Learning in Medicine” and argue that a number of alarming opinions in this pieces are not supported by evidence.

Pradeep Raamana, Cross Invalidated.

The Journal of American Medical Association (JAMA) published a viewpoint titled “Unintended Consequences of Machine Learning in Medicine” [Cabitza2017JAMA]. The title is eye-catching, and it is an interesting read touching upon several important points of concern to those working at the cross roads of machine-learning (ML) and decision support systems (DSS). This viewpoint is timely, arriving at a time when others are also expressing concern about inflated expectations of machine learning and its fundamental limitations [Chen2017NEJM]. However, several points put forth as alarming in this piece are in my opinion unsupported. In this quick take, I hope to convince you that the reports of unintended consequences specifically due to ML have been greatly exaggerated.

TL;DR:

Q: Have there been unintended consequences due to ML-DSS in the past? A. Yes.

Q: Were they all due to limitations specific to ML and ML models? A. No.

Q: Where do the troubles arise from? A. Failures in design and validation of DSS.

Q: So what’s the eye-catching title about? A. An unnecessary alarm.

Q: But, does it raise any relevant issues? A. Yes, but it does not deliver on them.

Q: Do I need to read the original piece? A. Yes. This discussion is important.

Comments

The viewpoint is timely and raises several points of concern, ranging from uncertainty in medical data, difficulties in integrating contextual information and possible negative consequences. Although I agree with the viewpoint that these are important issues to be resolved, I do not share their implication (intended or not) that use of ML in medicine is causing it. Some of the keywords used in the viewpoint like overreliance, deskilling and blackbox models are over-generalizing the scope of the limitations of ML models, whether they intended it or not. To my understanding of the Viewpoint, the raised concerns are mostly due to clinical workflow management and failures therein, not due to the ML part per se, which needs to be stressed is only one component of the clinical DSS [Pusic2004BCMJ, see below].

Image from: Martin Pusic, MD, Dr J. Mark Ansermino, FFA, MMed, MSc, FRCPC. Clinical decision support systems. BCMJ, Vol. 46, No. 5, June, 2004, page(s) 236-239.

Moreover, the viewpoint completely skipped discussing the effectiveness and strengths of ML-DSS to put their limitations in perspective. Did you know that the annual cost of human and medical errors in healthcare is over $17 billions and over 250,000 American deaths [Donaldson2000NAP,Andel2012JHCF]?

I suggest you to read the original viewpoint [Cabitza2017JAMA] and read my response below to get a better perspective. I do not agree with some of the points viewpoint makes, mostly because they are either exaggerated, not sufficiently backed up or unnecessarily laying the blame on ML. I quote few statements from the Viewpoint below (organized by sections in their piece; emphases mine) and offer a point-by-point rebuttal in the indented bullet points:

Deskilling

- Viewpoint defines deskilling to be the following: “the reduction of the level of skill required to complete a task when some or all components of the task are partly automated, and which may cause serious disruptions of performance or inefficiencies whenever technology fails or breaks down”

- The deskilling point being made is similar to the general decline in our ability to multiply numbers in our head or by hand, as calculators and computers have become commonplace. Implying that we would lose the ability to multiply numbers, with “overreliance” on calculators and computers, is unnecessarily alarming. We may be slower to multiply nowadays compared to pre-calculator era, or have forgotten few speeding tricks, but I doubt we will totally forget how to multiply numbers. Using cars or motor vehicles as an example, even though automated transport has become commonplace, we never lost the ability to walk or run.

- The study cited [Hoff2011HCMR] to support this point was based on interviews with 78 U.S. primary care physicians. A survey! Based on views and experiences of primary care physicians, not objective measurements of effectiveness in a large-scale and formal study. Recent surveys and pollshave brought us to the brink of a nuclear war now!

- And the two things they studied in [Hoff2011HCMR] were the use of electronic medical records and electronic clinical guidelines. They were not even based on ML.

- The supporting study concludes “Primary care physicians perceive and experience deskilling as a tangible outcome of using particular health care innovations. However, such deskilling is, in part, a function of physicians’ own actions as well as extant pressures in the surrounding work context.” Perhaps I am missing something, but this does not imply ML or DSS to be the cause of deskilling.

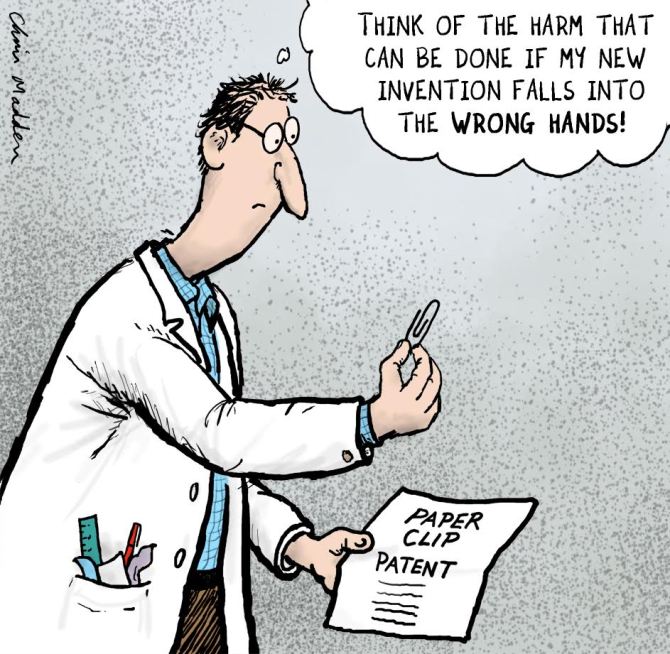

- At the risk of exaggerating it, this deskilling argument sounds to me like some physicians are worried that the “robots” are going to take their jobs!

- Another example provided to support the deskilling point is: “For example, in a study of 50 mammogram readers, there was a 14% decrease in diagnostic sensitivity when more discriminating readers were presented with challenging images marked by computer-aided detection”

- This is a selective presentation of the results in the cited study [Hoff2011HCMR]. The study also notes “We found a positive association between computer prompts and improved sensitivity of the less discriminating readers for comparatively easy cases, mostly screen-detected cancers. This is the intended effect of correct computer prompts.“. This must be noted, however small the increase may have been. Check the bottom of the post for more details.

Uncertainty in Medical Data

- In trying to show the ML-DSS are negatively affected by observer variability as well inherent uncertainties in medical data, the Viewpoint says:

“inter-observer variability in the identification and enumeration of fluorescently stained circulating tumor cells was observed to undermine the performance of ML-DSS supporting this classification task”

- The cited study [Svensson2015JIR] to support this statement clearly notes “The random forest classifier turned out to be resilient to uncertainty in the training data while the support vector machine’s performance is highly dependent on the amount of uncertainty in the training data“. This does not support the above statement, and does not imply all ML models (and hence ML-DSS) are seriously affected by uncertainties in input data.

- I agree with the authors on existence of bias, uncertainties and variability in medical data in various forms and at different stages, and these are important factors to be considered. With the emergence of wearable tech and patient monitoring leading to unobtrusive collection of larger amount of higher quality patient data, I think the future of healthcare is looking bright [Hiremath2014Mobihealth].

Importance of Context

In trying to show how ML-DSS made some mistakes in the past having not used some explicit rules, the Viewpoint makes the following statements:

- “However, machine learning models do not apply explicit rules to the data they are provided, but rather identify subtle patterns within those data.”

- While most ML models have originally been designed to learn existing patterns in data, they can certainly assist in automatically learning the rules [Kavsek2006AAI]. Moreover, Learning patterns in data and applying explicit rules are not mutually exclusive tasks in ML. And, it is possible to encode explicit knowledge-based rules into ML models such as decision trees.

- If ML models were incompletely trained (no providing enough sample for the known conditions, nor providing enough variety of conditions to reflect the real world scenarios), or insufficiently validated (incorporating known and validated truths e.g. asthma is not a protective factor for pneumonia as was noted in another example), algorithms are not to blamed for recommending what they were trained on (patients with asthma exhibited lower risk for pneumonia, as was observed in that particular dataset they were trained on).

- “this contextual information could not be included in the ML-DSS”

- This is simply wrong. At the risk of making a broad statement, I can say almost all types of information could be included in an ML model. If you can write it down or say it out loud, that information can be digitally represented and incorporated into an ML model. Whether a particular ML-DSS included context info and why is another discussion, and not incorporating context info into a ML-DSS is not the fault of ML models.

Conclusions

- Viewpoint concludes: “Use of ML-DSS could create problems in contemporary medicine and lead to misuse.”

- This is hilarious and such a lazy argument. This sounds like “use of cars could create problems in modern transport and lead to misuse”. Did people misuse cars to do bad things? Sure. Did that prevent motor vehicles from revolutionizing human mobility? No. We are almost at the entry door for self-driving cars, thanks to ML and AI, to try reduce human stress and accidents!

Where are the weaknesses in ML-DSS then?

For a general overview of challenges in designing a clinical DSS, refer to [Sittig2008JBI,Bright2012AIM]. A paper Viewpoint cites to support the point on unintended consequences is titled “Some unintended consequences of information technology in health care” [Ash2004JAMIA], which notes that “many of these errors are the result of highly specific failures in patient care information systems (PCIS) design and/or implementation.” And that’s exactly where the blame should lie. The supporting paper goes on to say: “The errors fall into two main categories: those in the process of entering and retrieving information, and those in the communication and coordination process that the PCIS is supposed to support. The authors believe that with a heightened awareness of these issues, informaticians can educate, design systems, implement, and conduct research in such a way that they might be able to avoid the unintended consequences of these subtle silent errors“. These issues identified are nothing to do with ML part per se, but are actually to do with data entry, access and communication! Hence, it is unfair to blame it all on machine learning, as the current title implies.

How would you do it?

Based on the main point the viewpoint are trying to make (which to my reading of it is garbage in garbage out), a better title for this piece could be one of the following:

- “Contextual and Clinical information must be part of the design, training and validation of a machine learning-based decision support system”.

- “Insufficient validation of the clinical decision support systems could have unintended consequences”

- or if the authors really wish to highlight the unintended part, they could go for “unintended consequences due to insufficient validation of decision support systems”

Given the broad nature of issues discussed, and the vast reach of JAMA publications (over 50K views in few days with an altmetric over 570), it is important we do not exaggerate the concerns beyond what can be supported with current evidence. With great reach, comes great responsibility.

Again, the issues raised by the viewpoint are important and we must discuss, evaluate and resolve them. We could certainly use more validation of ML-DSS, but exaggerated concerns and attributing the past failures specifically to ML are not well supported. I understand the author’s limitations in writing a JAMA Viewpoint (very short: 1200 words, few references etc). Hence, I recommend them to publish a longer piece (various options available on the internet) and build a better case. I’ll look forward to reading it and learn more.

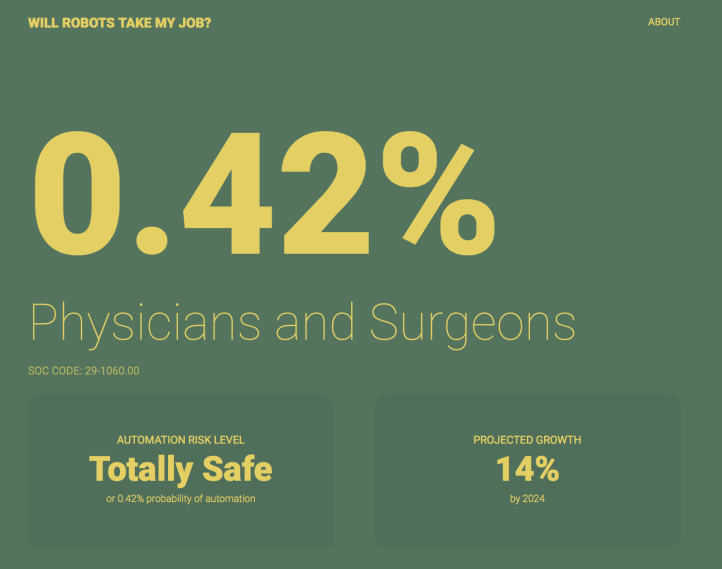

As for whether robots taking physicians jobs in the near future? Seems unlikely, with chances < 0.5%.

Conflict of interest: None.

Financial Disclosures: None.

Physician experience: None

Machine learning experience: Lot.

Disclaimer

Opinions expressed here are my own. They do not reflect the opinions or policy of any of my current, former or future employers or friends! Moreover, these comments are intended to continue the discussion on important issues, and are not intended to be in any way personal, or attack the credibility of any person or organization.

More details

- The mammogram study [Svensson2015JIR] notes the following in their abstract: “Use of computer-aided detection (CAD) was associated with a 0.016 increase in sensitivity (95% confidence interval [CI], 0.003–0.028) for the 44 least discriminating radiologists for 45 relatively easy, mostly CAD-detected cancers. However, for the 6 most discriminating radiologists, with CAD, sensitivity decreased by 0.145 (95% CI, 0.034–0.257) for the 15 relatively difficult cancers.”

- While it is certainly important to understand the causes for decrease in sensitivity for the most discriminating readers with the assistance of CAD (as it is larger than that of the increase in least discriminating readers), it is important to bear in mind that reader sensitivity is only one of multitude of factors to be considered in evaluating the effectiveness of a ML-DSS [Pusic20014BCMJ]. The authors themselves recommend this at the end: “The quality of any ML-DSS and subsequent regulatory decisions about its adoption should not be grounded only in performance metrics, but rather should be subject to proof of clinically important improvements in relevant outcomes compared with usual care, along with the satisfaction of patients and physicians.” Hence, the alarm about the use of ML-DSS leading to deskilling is weak at best, unless we see many large studies demonstrating this in a variety of DSS workflows.

References

- Andel2012JHCF: Andel, C., Davidow, S. L., Hollander, M., & Moreno, D. A. (2012). The economics of health care quality and medical errors. Journal of health care finance, 39(1), 39.

- Ash2004JAMIA: Ash, J. S., Berg, M., & Coiera, E. (2004). Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. Journal of the American Medical Informatics Association, 11(2), 104-112.

- Bright2012AIM: Bright, T. J., Wong, A., Dhurjati, R., Bristow, E., Bastian, L., Coeytaux, R. R., … & Wing, L. (2012). Effect of clinical decision-support systemsa systematic review. Annals of internal medicine, 157(1), 29-43.

- Cabitza2017JAMA: Cabitza F, Rasoini R, Gensini GF. Unintended Consequences of Machine Learning in Medicine. JAMA. 2017;318(6):517–518. doi:10.1001/jama.2017.7797

- Chen2017NEJM: Chen, J. H., & Asch, S. M. (2017). Machine Learning and Prediction in Medicine-Beyond the Peak of Inflated Expectations. The New England journal of medicine, 376(26), 2507.

- Donaldson2000NAP: Donaldson, M. S., Corrigan, J. M., & Kohn, L. T. (Eds.). (2000). To err is human: building a safer health system (Vol. 6). National Academies Press.

- Hiremath2014Mobihealth: Hiremath, S., Yang, G., & Mankodiya, K. (2014, November). Wearable Internet of Things: Concept, architectural components and promises for person-centered healthcare. In Wireless Mobile Communication and Healthcare (Mobihealth), 2014 EAI 4th International Conference on (pp. 304-307). IEEE.

- Hoff2011HCMR: Hoff T. Deskilling and adaptation among primary care physicians using two work innovations. Health Care Manage Rev. 2011;36(4):338-348.

- Kavsek2006AAI: Kavšek, B., & Lavrač, N. (2006). APRIORI-SD: Adapting association rule learning to subgroup discovery. Applied Artificial Intelligence, 20(7), 543-583.

- Povyakalo2013MDM: Povyakalo AA, Alberdi E, Strigini L, Ayton P. How to discriminate between computer-aided and computer-hindered decisions. Med Decis Making. 2013;33(1):98-107.

- Pusic20014BCMJ: Martin Pusic, MD, Dr J. Mark Ansermino, FFA, MMed, MSc, FRCPC. Clinical decision support systems. BCMJ, Vol. 46, No. 5, June, 2004, page(s) 236-239.

- Sittig2008JBI: Sittig, D. F., Wright, A., Osheroff, J. A., Middleton, B., Teich, J. M., Ash, J. S., … & Bates, D. W. (2008). Grand challenges in clinical decision support. Journal of biomedical informatics, 41(2), 387-392.

- Svensson2015JIR: Svensson CM, Hübler R, Figge MT. Automated classification of circulating tumor cells and the impact of interobsever variability on classifier training and performance. J Immunol Res. 2015;2015:573165.

Update: This post has been updated to change the style of citation from numeric to author-year-journal format for improve accuracy and maintenance.

Original. Reposted with permission.

Bio: Pradeep Raamana is a Neuroimager. Machine learner. Yuuuuge data cruncher :). Photographer. Road tripper. Shuttler. Hiker. Nature lover. He tweets at @raamana_.

Related: