New Standard Methodology for Analytical Models

Traditional methods for the analytical modelling like CRISP-DM have several shortcomings. Here we describe these friction points in CRISP-DM and introduce a new approach of Standard Methodology for Analytics Models which overcomes them.

By Olav Laudy (IBM Analytics, Asia-Pacific).

Summary

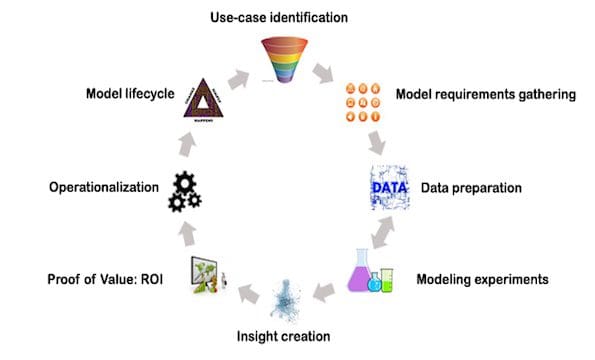

In this document, the Standard Methodology for Analytical Models (SMAM) is described. A short overview of the SMAM phases can be found in Table 1. The most frequent used methodology is the Cross Industrial Standard Processes for Data Mining (CRISP-DM)1, which has several shortcomings that translate into frequent friction points with the business when practitioners start building analytical models.

The document will start with a discussion of the phases of the CRISP-DM and highlight its shortcomings. Subsequently, the phases of the SMAM will be described. A set of tables is provided that can serve as guidance to define the creation of a new analytical model.

Table 1

|

Phase |

Description |

|

Use-case identification |

Selection of the ideal approach from a list of candidates |

|

Model requirements gathering |

Understanding the conditions required for the model to function |

|

Data preparation |

Getting the data ready for the modeling |

|

Modeling experiments |

Scientific experimentation to solve the business question |

|

Insight creation |

Visualization and dashboarding to provide insight |

|

Proof of Value: ROI |

Running the model in a small scale setting to prove the value |

|

Operationalization |

Embedding the analytical model in operational systems |

| Model lifecycle |

Governance around model lifetime and refresh |

Introduction

In the recent decades, the creation and use of analytical models has become common good in every industry. Analytical models have greatly evolved both in the depths of the mathematical techniques as well as the wide-spread application of the results. The methodology to create analytical models, however, is not well described, as can be seen by the fact that the job of the analytic practitioners (currently called data scientists; older names are statistician, data analyst and data miner) involve a lot of tacit knowledge, practical knowledge not easily reducible to articulated rules2. This informality can be seen in many areas of analytical modeling, ranging from the project methodology, the creation of the modeling& validation data, the analytical model-building approaches to model reporting. The focus of this document is project methodology.

The best known project methodology for analytical processes is Cross Industrial Standard Processes for Data Mining (CRISP-DM)1. This methodology describes six phases that show an iterative approach to the development of analytical models. Although it describes the general approach to analytical model creation (Business understanding, Data understanding, Data preparation, Modeling, Evaluation, Deployment), it lacks nuances that describe how analytical model building in a business context actually flows as an end-to-end process. The effects of the shortcomings of CRISP-DM translate into multiple friction points with the business when practitioners start building analytical models.

Shortcomings of the current methodology

Examples of these friction points will be well recognized by data scientists. Not limited to the set of examples given, friction points for the various phases include:

CRISP-DM: Business Understanding

Phase definition: this initial phase focuses on understanding the project objectives and requirements from a business perspective, and then converting this knowledge into a data mining problem definition, and a preliminary plan designed to achieve the objectives3.

Issues: this view is often understood as only the data scientist who needs to understand the business issues while the business knowing exactly what they want. In reality, often the business intends to ‘make smarter decisions by using data’, but they lack the understanding of what analytical models are, how they can or should be used and what realistic expectations are around model effectiveness. As such, the business itself needs to transform in order to work with analytical models.

Another issue with the Business Understanding phase is that project objectives and project requirements are usually originating from different parts of the organization. The objectives typically come from a higher management level than the requirements and ignoring this fact, not seldom leads to the situation where after the model has been developed, the end-users of the analytical model are required to post-rationalize the model, which leads to a lot of dissatisfaction.

CRISP-DM: Modeling

Phase definition: in this phase, various modeling techniques are selected and applied, and their parameters are calibrated to optimal values3.

Issues: although this definition gives room for trying out different techniques, it very much underestimates the amount real experimentation that is needed to get at viable results, especially if a use-case if not a common, well-known one. True experimentation may require changing to an entire different format of the data, or even a different interpretation or adjustment of the business question.

CRISP-DM: Evaluation

Phase definition: at this stage in the project, you have built a model that appears to have high quality, from a data analysis perspective. Before proceeding to final deployment of the model, it is important to more thoroughly evaluate the model, and review the steps executed to construct the model, to be certain it properly achieves the business objectives3.

Issue: thorough evaluation indeed is needed, yet the CRISP-DM methodology does not prescribe how to do this. As a result, evaluation is done on historic data, in the worst case, on a hold-out partition of the training data, in a slightly better case, on an out-of-time validation sample. As a model typically impacts an important part of the business, it is a good practice to device a proper experiment, whereby the model is tested in a limited fashion on new data. A proper experiment will also include an ROI calculation, which can be used to decide if the model is good enough to be implemented. Model evaluations often result in wry discussions with the business, who invariably expects analytical models to be accurate for 90% and up, irrespective of, and not bothered by the specific measure of accuracy. A limited experimental setting resulting in an ROI computation can help change this discussion to a more productive one.

Commonly, another issue with the evaluation phase is the lack of verbosity of the analytical model for the parties involved. One of the 9 laws of data mining states that ‘Data mining amplifies perception in the business domain’4. This very fact seems to urge that (lasting) visualization and dashboards reporting need to be integral part of the model building output. This concerns both what the model does (analytical reporting) as well as how the model impacts the business (operational reporting).

CRISP-DM: Deployment

Phase definition: depending on the requirements, the deployment phase can be as simple as generating a report or as complex as implementing a repeatable data scoring3.

Issues: besides that fact that the deployment of the model needs to be discussed very much at the identification of the use-case (considering the availability and timing of scoring data and the costs of integration), the view of a single deployment phase is too simplistic. The deployment itself involves multiple phases, and needs to be defined using its own hand-over process, as this is the moment where the data scientists hands-over the resulting model to IT or an operational team who will ensure continuous execution of the model. In addition, the life cycle of the model needs to be taken into account, both from a model effectiveness perspective as well as from a model evolution perspective.