The Artificial ‘Artificial Intelligence’ Bubble and the Future of Cybersecurity

What’s going on now in the field of ‘AI’ resembles a soap bubble. And we all know what happens to soap bubbles eventually if they keep getting blown up by the circus clowns (no pun intended!): they burst.

By Eugene Kaspersky, CEO and Co-Founder at Kaspersky Lab.

I think the recent article in the New York Times about the boom in ‘artificial intelligence’ in Silicon Valley made many people think hard about the future of cybersecurity – both the near and distant future.

I reckon questions like these will have been pondered on:

- Where’s the maniacal preoccupation with ‘AI’, which now only exists in the fantasies of futurologists going to lead to?

- How many more billions will investors put into ventures which, at best, will ‘invent’ what was invented decades ago, at worst – will turn out to be nothing more than inflated marketing… dummies?

- What are the real opportunities for the development of machine learning cybersecurity technologies?

- And what will be the role of humans experts in this brave new world?

SOMETIMES WHEN I HANG AROUND WITH A.I. ENTHUSIASTS HERE IN THE VALLEY, I FEEL LIKE AN ATHEIST AT A CONVENTION OF EVANGELICALS.

–Jerry Kaplan, computer scientist, author, futurist and serial entrepreneur (inc. co-founder of Symantec)

What’s going on now in the field of ‘AI’ resembles a soap bubble. And we all know what happens to soap bubbles eventually if they keep getting blown up by the circus clowns (no pun intended!): they burst.

Now, of course, without bold steps and risky investments a fantastical future will never become a reality. But the problem today is that along with this wave of widespread enthusiasm for ‘AI’ (remember, AI today doesn’t exist; thus the inverted commas), startup-shell-companies have started to appear.

A few start-ups? What’s the big deal, you might ask.

The big deal is that these shell-startups are attracting not millions but billions of dollars in investment – by riding the new wave of euphoria surrounding ‘AI’ machine learning. Thing is, machine learning has been around for decades: it was first defined in 1959, got going in the 70s, flourished in the 90s, and is still flourishing! Fast forward to today and this ‘new’ technology is re-termed ‘artificial intelligence’; it adopts an aura of cutting-edge science; it gets to have the glossiest brochures; it gets to have the most glamorously sophisticated marketing campaigns. And all of that is aimed at the ever-present human weakness for belief in miracles – and in conspiracy theories about so-called ‘traditional’ technologies. And sadly, the cybersecurity field hasn’t escaped this new ‘AI’ bubble…

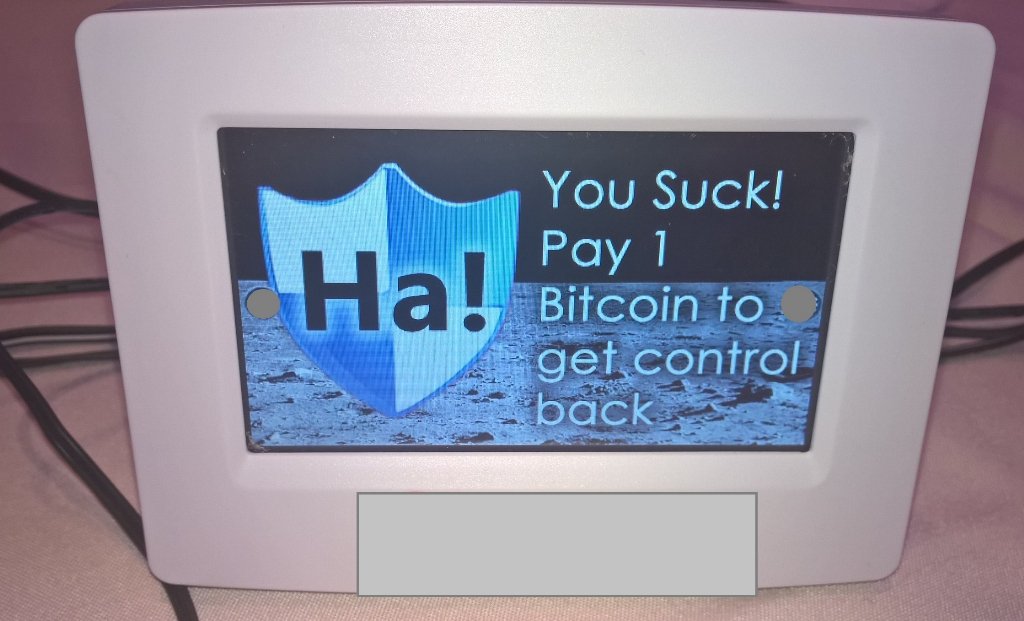

Here in cybersecurity, new ‘revolutionary’ products are rolled out which, as if by magic, at last solve all security issues and protect everyone and everything from every single cyberthreat out there in one fell swoop: The shell-startups stop at nothing to present information in such a way that manipulates public opinion and ensures widespread misunderstanding of the real situation.

Meanwhile, in actual fact, under the hood of some of these ‘revolutionary’ products there’s no ‘latest technology’ at all. Instead there’s technology as dated as, say, the steam engine! But hey, who’s looking anyway? And who’ll allow anyone to take a look anyway? More on this not-looking situation – here.

Some of these startups have even gotten as far as having an IPO. Some of the initial venture capitalists may do well – if they resell quickly; but in the longer term it’s a thoroughly disappointed stock market through and through. For a loss-making business founded on BS-marketing doesn’t pay dividends, and the sharp decline in the share price after the peak is unstoppable. Meanwhile, that ongoing shiny marketing pyramid machine needs paying for – and it doesn’t come cheap.

Let’s back up a bit here.

Venture capitalism is called ‘venture’ capitalism as it involves risk. A good venture capitalist is one who knows where it’s best to place funds – and how much funds – so as to come out with a good profit in n number of years. However, there’s a nuance when it comes to ‘AI’: not one significant AI-cybersecurity venture has made a profit yet! Er, so, like, why would any venture capitalist worth his silicon invest in an AI-venture? Good question.

The main objective of business models for most Silicon Valley startups (not all; there are some happy exceptions) is not to undertake serious, expensive research and come up with useful products/technologies that will sell well. Instead, they want to create bubbles: attracting investors, selling up quickly with a share price based on the ‘valuation of future profit’, and then… well, then the mess (massive losses suffered) will already be someone else’s problem. But wait. It gets better: revenue actually only HAMPERS such a model!

IT’S NOT ABOUT HOW MUCH YOU EARN. IT’S ABOUT WHAT YOU‘RE WORTH. AND WHO’S WORTH THE MOST? COMPANIES THAT LOSE MONEY.

Here’s a typical business model, compressed into 75 seconds by the slick media type in the TV series Silicon Valley (though outrageous and funny, it’s based on reality!):

AI-bubbles are somewhat reminiscent of the 2008 real estate market bubble in the US, which snowballed swiftly into the global financial crisis. The pyramid of investment sub-products based on subprime mortgages suited a lot of people: millions of folks did very nicely from it, thank you very much; and tens of thousands of them did amazingly from it (thanks a million:). And then – boom: the pyramid came tumbling down, the world economy began to sway, and the holes were bunged up with taxpayer money to avoid financial Armageddon – taxpayers of course being those same folks and companies that made the money in the first place. Not aware of the scam scandal of the decade? Then watch the film The Big Short.

As if that reminiscence wasn’t bad enough, the AI-bubble contains another danger – discrediting machine learning: one of the most promising sub-fields in cybersecurity.

What’s so ‘dangerous’ about that? Well, it’s only thanks to machine learning that humanity hasn’t drowned in a gigantic mass of data, which over recent decades has increased a zillion-fold.

For example, the quantity of malicious programs in 10 years has risen by around a thousand times: at the start of the century each day we analyzed ~300 different bits of malware; now that figure has had three zeros tacked to the end of it. And by how many times has the number of our analysts increased? Four. So how did we keep up? Two words: machine learning.

Fast forward to today, and 99.9% of the attacks we detect are actually detected by our automated systems. Sounds like the analysts are laughing with so little to do?! Anything but. They concentrate on fine tuning these systems to make them more effective, and on developing new ones.

For example, we have experts who do nothing but uncover reeeaaal complex cyberattacks, who then transfer their new knowledge and skills to our automated systems. Then there are data science experts who experiment with different models and methods of machine learning.

Unlike shell-startups, we protect users via a gigantic cloud infrastructure, which is able to quickly and effectively solve much more complex tasks. And yes, it’s for that reason that we apply many different models of machine learning.

The only cyberattacks we investigate manually are the odd instance of the most complex we come across. Yet even then, given an almost complete take over by machines, we still have trouble finding sufficient specialist staff. What’s more, the number of specific requirements we need to make on potential KL analysts is forever spiraling up.

Sooner or later the ‘artificial intellect saves the world’ circus act will come to an end. Experts will finally be allowed to test the BS ‘AI’ products, customers will realize they’ve been ripped off, and investors will lose interest. But how will machine learning develop further?

SILICON VALLEY HAS FACED FALSE STARTS WITH A.I. BEFORE. DURING THE 1980S, AN EARLIER GENERATION OF ENTREPRENEURS ALSO BELIEVED THAT ARTIFICIAL INTELLIGENCE WAS THE WAVE OF THE FUTURE, LEADING TO A FLURRY OF START-UPS. THEIR PRODUCTS OFFERED LITTLE BUSINESS VALUE AT THE TIME, AND SO THE COMMERCIAL ENTHUSIASM ENDED IN DISAPPOINTMENT, LEADING TO A PERIOD NOW REFERRED TO AS THE ‘A.I. WINTER.’

–John Markoff, New York Times

In the aftermath of the AI-bubble’s popping, all similar fields will inevitably suffer. “Machine learning? Neural networks? Behavioral detection? Cognitive analysis? Arrrgh – more buzzwords à la artificial intelligence? No thanks: don’t wanna know. Actually: wouldn’t touch any of that with a barge pole.”!

It gets worse: the curse of the AI-bubble will dull interest in promising technologies for many years, just like in the 80s.

Still, established vendors will continue to invest in smart technologies. For example, we’ve introduced boosting and decision tree learning technologies for detecting sophisticated targeted attacks and proactive protection against future threats (yep – threats that don’t exist yet!).

One particularly promising area of development is in increasing the complexity of the correlational picture of events across all levels of infrastructure and further machine analysis of the data landscape to detect the most complex cyberattacks out there accurately and reliably. We’ve already got these features implemented in our KATA Platform and are looking forward to developing more.

But how will honest machine learning startups fare? Alas: today’s abuse of the term AI will only slow development.

Note, however, that progress won’t stop: it will still continue, albeit at a slower tempo.

Mankind will still slowly but surely move towards automation of everything under the sun – right down to the tiniest and most trivial of everyday processes. And it won’t be just automation, but adaptive interaction between man and machine – built on super-advanced machine learning algorithms. We’re seeing this adaptive interaction already, and the speed at which it is spreading into more and more different applications is sometimes scary.

The cybersecurity field will see ever-increasing automation too.

For example, we already have a solution for embedding security in the ‘smart cities’ paradigm (including various robotic aspects like automatic road traffic management) for safe control of critical infrastructure. And the deficit of experts will become all the more acute, not so much because of the spread of technologies, but because of the ever-increasing demands on personnel’s skills. Machine learning systems for cybersecurity require encyclopedic knowledge and specific honed skills from a broad range of fields (including big data, computer crime forensics and investigations, and system and applications programming). Getting all that broad and specific knowledge and experience into one human being is a rare feat: it’s what makes them very exclusive/high-class/world-beating specialists. And teaching all that broad and specific knowledge isn’t easy either. But it still needs doing if we want to see true development of smart technologies. There’s no other way.

So who’s gonna be in charge in this brave new world of the future? Will man still control the robots, or might the robots control man?

In 1999 Raymond Kurzweil put forward a theory regarding symbiotic intellect (though others had similar ideas before): the merging of man and machine – a cybernetic organism combining the intelligence of humans and the gigantic computing power of supercomputers (“humachine intelligence”). But this isn’t science fiction – it’s already happening. And its continued development is, IMHO, not only the most likely, but also the most beneficial route forward for the development of mankind.

But will that further development of the merging of man and machine go so far as to reach the point of technological singularity? Will man lose the ability to keep afoot of what’s going on, and the machines will eventually fully and irreversibly seize control over the world?

The key feature of purely machine AI is the ability to forever improve and perfect itself without the intervention of man – an ability that may grow and grow to eventually step outside the bounds of its algorithms. In other words, purely machine-AI – is a new form of intellect. And the distant – but theoretically likely – eventual day of that ‘stepping outside the algorithm’ will signal the start of the end of our world as we know it. For the benefit of mankind, as per the laws of robotics, the machines one day could rid us of mental suffering and the burden of existence.

Or... maybe a programmer – as is always often the case – will leave a few bugs in the code? Let’s wait and see...

Bio: Eugene Kaspersky CEO and Co-Founder at Kaspersky Lab. Fighting cyberattacks for 27 years. First as an anti-virus researcher then as a businessman.

Original. Reposted with permission.

Related:

- How to Fail with Artificial Intelligence: 9 creative ways to make your AI startup fail

- Machine Learning and Cyber Security Resources

- Using Machine Learning to Detect Malicious URLs