Top 20 Deep Learning Papers, 2018 Edition

Top 20 Deep Learning Papers, 2018 Edition

Deep Learning is constantly evolving at a fast pace. New techniques, tools and implementations are changing the field of Machine Learning and bringing excellent results.

Deep Learning, one of the subfields of Machine Learning and Statistical Learning has been advancing in impressive levels in the past years. Cloud computing, robust open source tools and vast amounts of available data have been some of the levers for these impressive breakthroughs. The criteria used to select the 20 top papers is by using citation counts from academic.microsoft.com. It is important to mention that these metrics are changing rapidly so the citations valued must be considered as the numbers when this article was published.

In this list of papers more than 75% refer to deep learning and neural networks, specifically Convolutional Neural Networks (CNN). Almost 50% of them refer to pattern recognition applications in the field of computer vision. I believe tools like TensorFlow, Theano and advancements in the use of GPUs have paved the way for data scientists and machine learning engineers to extend the field.

1. Deep Learning, by Yann L., Yoshua B. & Geoffrey H. (2015) (Cited: 5,716)

Deep learning allows computational models that are composed of multiple processing layers to learn representations of data with multiple levels of abstraction. These methods have dramatically improved the state-of-the-art in speech recognition, visual object recognition, object detection and many other domains such as drug discovery and genomics.

2. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems, by Martín A., Ashish A. B., Eugene B. C., et al. (2015) (Cited: 2,423)

The system is flexible and can be used to express a wide variety of algorithms, including training and inference algorithms for deep neural network models, and it has been used for conducting research and for deploying machine learning systems into production across more than a dozen areas of computer science and other fields, including speech recognition, computer vision, robotics, information retrieval, natural language processing, geographic information extraction, and computational drug discovery.

3. TensorFlow: a system for large-scale machine learning, by Martín A., Paul B., Jianmin C., Zhifeng C., Andy D. et al. (2016) (Cited: 2,227)

TensorFlow supports a variety of applications, with a focus on training and inference on deep neural networks. Several Google services use TensorFlow in production, we have released it as an open-source project, and it has become widely used for machine learning research.

4. Deep learning in neural networks, by Juergen Schmidhuber (2015) (Cited: 2,196)

This historical survey compactly summarises relevant work, much of it from the previous millennium. Shallow and deep learners are distinguished by the depth of their credit assignment paths, which are chains of possibly learnable, causal links between actions and effects. I review deep supervised learning (also recapitulating the history of backpropagation), unsupervised learning, reinforcement learning & evolutionary computation, and indirect search for short programs encoding deep and large networks.

5. Human-level control through deep reinforcement learning, by Volodymyr M., Koray K., David S., Andrei A. R., Joel V et al (2015) (Cited: 2,086)

Here we use recent advances in training deep neural networks to develop a novel artificial agent, termed a deep Q-network, that can learn successful policies directly from high-dimensional sensory inputs using end-to-end reinforcement learning. We tested this agent on the challenging domain of classic Atari 2600 games.

6. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks, by Shaoqing R., Kaiming H., Ross B. G. & Jian S. (2015) (Cited: 1,421)

In this work, we introduce a Region Proposal Network (RPN) that shares full-image convolutional features with the detection network, thus enabling nearly cost-free region proposals. An RPN is a fully convolutional network that simultaneously predicts object bounds and objectness scores at each position.

7. Long-term recurrent convolutional networks for visual recognition and description, by Jeff D., Lisa Anne H., Sergio G., Marcus R., Subhashini V. et al. (2015) (Cited: 1,285)

In contrast to current models which assume a fixed spatio-temporal receptive field or simple temporal averaging for sequential processing, recurrent convolutional models are “doubly deep” in that they can be compositional in spatial and temporal “layers”.

8. MatConvNet: Convolutional Neural Networks for MATLAB, by Andrea Vedaldi & Karel Lenc (2015) (Cited: 1,148)

It exposes the building blocks of CNNs as easy-to-use MATLAB functions, providing routines for computing linear convolutions with filter banks, feature pooling, and many more. This document provides an overview of CNNs and how they are implemented in MatConvNet and gives the technical details of each computational block in the toolbox.

9. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks, by Alec R., Luke M. & Soumith C. (2015) (Cited: 1,054)

In this work, we hope to help bridge the gap between the success of CNNs for supervised learning and unsupervised learning. We introduce a class of CNNs called deep convolutional generative adversarial networks (DCGANs), that have certain architectural constraints, and demonstrate that they are a strong candidate for unsupervised learning.

10. U-Net: Convolutional Networks for Biomedical Image Segmentation, by Olaf R., Philipp F. &Thomas B. (2015) (Cited: 975)

There is large consent that successful training of deep networks requires many thousand annotated training samples. In this paper, we present a network and training strategy that relies on the strong use of data augmentation to use the available annotated samples more efficiently.

11. Conditional Random Fields as Recurrent Neural Networks, by Shuai Z., Sadeep J., Bernardino R., Vibhav V. et al (2015) (Cited: 760)

We introduce a new form of convolutional neural network that combines the strengths of Convolutional Neural Networks (CNNs) and Conditional Random Fields (CRFs)-based probabilistic graphical modelling. To this end, we formulate mean-field approximate inference for the Conditional Random Fields with Gaussian pairwise potentials as Recurrent Neural Networks.

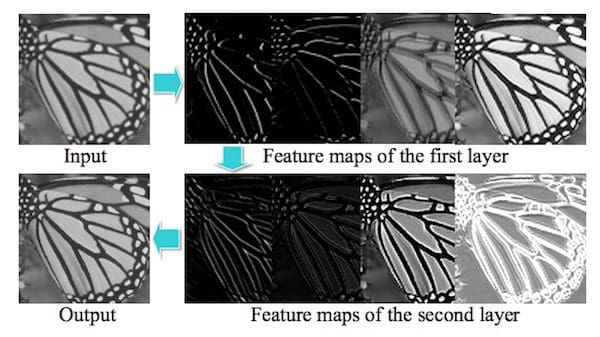

12. Image Super-Resolution Using Deep Convolutional Networks, by Chao D., Chen C., Kaiming H. & Xiaoou T. (2014) (Cited: 591)

Our method directly learns an end-to-end mapping between the low/high-resolution images. The mapping is represented as a deep convolutional neural network (CNN) that takes the low-resolution image as the input and outputs the high-resolution one

13. Beyond short snippets: Deep networks for video classification, by Joe Y. Ng, Matthew J. H., Sudheendra V., Oriol V., Rajat M. & George T. (2015) (Cited: 533)

In this work, we propose and evaluate several deep neural network architectures to combine image information across a video over longer time periods than previously attempted.

14. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning, by Christian S., Sergey I., Vincent V. & Alexander A A. (2017) (Cited: 520)

Very deep convolutional networks have been central to the largest advances in image recognition performance in recent years. With an ensemble of three residual and one Inception-v4, we achieve 3.08% top-5 error on the test set of the ImageNet classification (CLS) challenge.

15. Salient Object Detection: A Discriminative Regional Feature Integration Approach, by Huaizu J., Jingdong W., Zejian Y., Yang W., Nanning Z. & Shipeng Li. (2013) (Cited: 518)

In this paper, we formulate saliency map computation as a regression problem. Our method, which is based on multi-level image segmentation, utilizes the supervised learning approach to map the regional feature vector to a saliency score.

16. Visual Madlibs: Fill in the Blank Description Generation and Question Answering, by Licheng Y., Eunbyung P., Alexander C. B. & Tamara L. B. (2015) (Cited: 510)

In this paper, we introduce a new dataset consisting of 360,001 focused natural language descriptions for 10,738 images. This dataset, the Visual Madlibs dataset, is collected using automatically produced fill-in-the-blank templates designed to gather targeted descriptions about: people and objects, their appearances, activities, and interactions, as well as inferences about the general scene or its broader context.

17. Asynchronous methods for deep reinforcement learning, by Volodymyr M., Adrià P. B., Mehdi M., Alex G., Tim H. et al. (2016) (Cited: 472)

The best performing method, an asynchronous variant of actor-critic, surpasses the current state-of-the-art on the Atari domain while training for half the time on a single multi-core CPU instead of a GPU. Furthermore, we show that asynchronous actor-critic succeeds on a wide variety of continuous motor control problems as well as on a new task of navigating random 3D mazes using a visual input.

18. Theano: A Python framework for fast computation of mathematical expressions., by by Rami A., Guillaume A., Amjad A., Christof A. et al (2016) (Cited: 451)

Theano is a Python library that allows to define, optimize, and evaluate mathematical expressions involving multi-dimensional arrays efficiently. Since its introduction, it has been one of the most used CPU and GPU mathematical compilers especially in the machine learning community and has shown steady performance improvements.

19. Deep Learning Face Attributes in the Wild, by Ziwei L., Ping L., Xiaogang W. & Xiaoou T. (2015) (Cited: 401)

This framework not only outperforms the state-of-the-art with a large margin, but also reveals valuable facts on learning face representation. (1) It shows how the performances of face localization (LNet) and attribute prediction (ANet) can be improved by different pre-training strategies. (2) It reveals that although the filters of LNet are fine-tuned only with imagelevel attribute tags, their response maps over entire images have strong indication of face locations.

20. Character-level convolutional networks for text classification, by Xiang Z., Junbo Jake Z. & Yann L. (2015) (Cited: 401)

This article offers an empirical exploration on the use of character-level convolutional networks (ConvNets) for text classification. We constructed several largescale datasets to show that character-level convolutional networks could achieve state-of-the-art or competitive results.

Related:

- 7 Steps to Understanding Deep Learning

- Deep Learning – Past, Present, and Future

- The 10 Deep Learning Methods AI Practitioners Need to Apply

Top 20 Deep Learning Papers, 2018 Edition

Top 20 Deep Learning Papers, 2018 Edition