Using Neural Networks to Design Neural Networks: The Definitive Guide to Understand Neural Architecture Search

A recent survey outlined the main neural architecture search methods used to automate the design of deep learning systems.

Designing deep learning systems is hard and highly subjective. Any midsize neural network could contain millions of nodes and hundreds of hidden layers. Given a specific deep learning problem, there is a large number of possible neural network architectures that can serve as a solution. Typically, we need to rely on the expertise or subjective preferences of data scientists to settle on a specific approach but that seems highly unpractical. Recently, neural architecture search(NAS) has emerged as an alternative solution to this problem by making the design of deep learning systems a machine learning problem by itself. NAS is rapidly gaining popularity as an active area of research in the deep learning space. A few days ago, researchers from IBM published a survey about some of the most popular NAS methods that help us design more effective neural network architectures.

What is Neural Architecture Search?

The principles behind NAS are as simple as its implementation is complex ???? Conceptually, NAS methods use machine learning to find suitable architectures for training deep learning models. At a high level, the architecture of a deep learning model is cast as a search problem over a set of decisions that define the different components of a neural network. The raising popularity of NAS has caused an exploring in the number of techniques in this area which makes it increasingly hard to keep track of. However, most NAS methods are based in two fundamental components:

- What to search for?: A search space that constraints the different options available for the design of a specific neural networks.

- How to search?: A search algorithm defined by an optimizer that interacts with the search space.

Those two principles: the search space and the optimizer model, helps us understand the core NAS methods in the market.

Search Space

Let’s define a neural network as a function that transforms a series of inputs into a series of outputs using operations such as convolutions, pooling, actions etc. From that perspective, the search space of a NAS model constraints the combinations of operations that can be applied to a given problem. In simpler terms, the search space refer to the set of feasible solutions of a NAS method. Given a deep learning problem, there are two fundamental groups of search spaces:

- Global Search Space: This space covers graphs that represent an entire neural architecture.

- Cell Search Space: This space focuses on discovering the architecture of specific cells that can be combined to assemble the entire neural network.

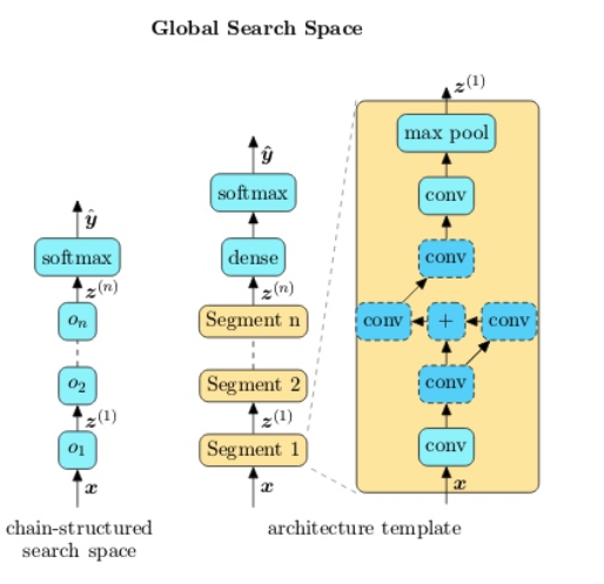

Global Search Space

The global search space is, by definition, the dimension that admits the largest degrees of freedom in terms of how to combine the different operations in a neural network. An architecture template may be assumed which limits the freedom of admissible structural choices within an architecture definition. This template often fixes certain aspects of the network graph. For instance, it may divide the architecture graph into several segments or enforce specific constraints on operations and connections both within and across these segments, thereby limiting the type of architectures that belong to a search space.

In principle, the global search space can be based on the order of operations applied in a neural network or based on higher level templates for a neural network architecture. Using those ideas, IBM identified three main types of global search spaces:

1) Chain-Structured: This search space consists of architectures that can be represented by an arbitrary sequence of ordered nodes such that for any node, the previous node is its only parent

2) Chain-Structured with Skips: A variation of the previous model that introduces arbitrary skip connections to exist between the ordered nodes of a chain-structured architecture, members belonging to this search space exhibit a wider variety of designs.

3) Architecture Template: This search space is based on architecture templates that separate neural network architectures into segments connected in a non-sequential form.

Cell Search Space

A cell-based search space builds upon the observation that many effective handcrafted architectures are designed with repetitions of fixed structures. Such architectures often consist of smaller-sized graphs that are stacked to form a larger architecture. Those smaller graphs are often referred as cells. The main benefit of the cell-based search space is that yields architectures that are smaller and more effective but can also be composed into larger architectures.

In the cell-based search space a network is constructed by repeating a structure called a cell in a prespecified arrangement as determined by a template. A cell is often a small directed acyclic graph representing a feature transformation.

Optimization Methods

After we define the search space, the next phase of the problem is to identify the optimization method required to define the neural network architecture. In recent years, researchers have explored a wide range of optimization paradigms including reinforcement learning and evolutionary algorithms for devising novel NAS methods. While the former set of methods consist in learning a policy to create networks so as to yield high performing models, the latter set of methods explores a pool of candidates and modifies them with an objective to improve performance.

Defining an optimizer in a NAS model is a black-box optimization problem which essentially means that the optimizer will be querying the target model to evaluate its performance during the process. In the current state of the market, there are three main optimization models for NAS.

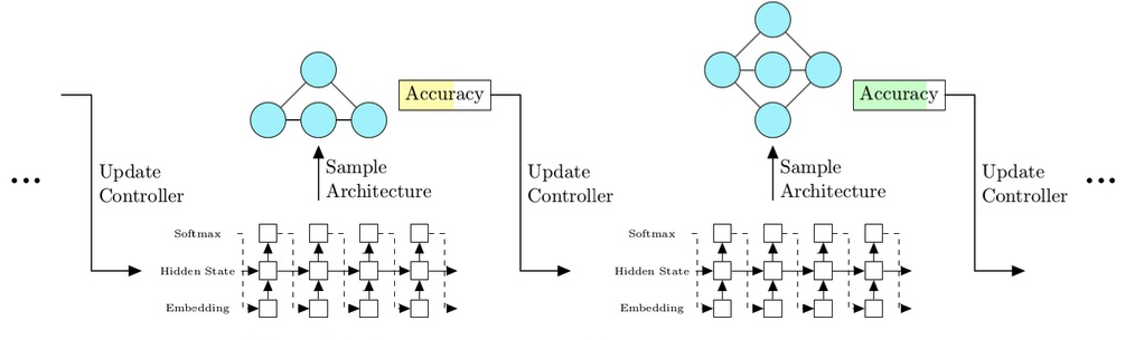

Reinforcement Learning

These optimization models leverage reinforcement learning action-reward duality to have agents that modify the architecture of a neural network and receive a reward based on its performance. In that model, a controller is regularly updating a series of criteria’s in the neural network and evaluates its accuracy.

Evolutionary Algorithms

Evolutionary algorithms are population-based global optimizer for black-box functions which consist of following essential components: initialization, parent selection, recombination and mutation, survivor selection. In the context of neural architecture search, the population consists of a pool of network architectures. A parent architecture or a pair of architectures is selected in step 1 for mutation or recombination, respectively. The steps of mutation and recombination refer to operations that lead to novel architectures in the search space which are evaluated for fitness and the process is repeated till termination.

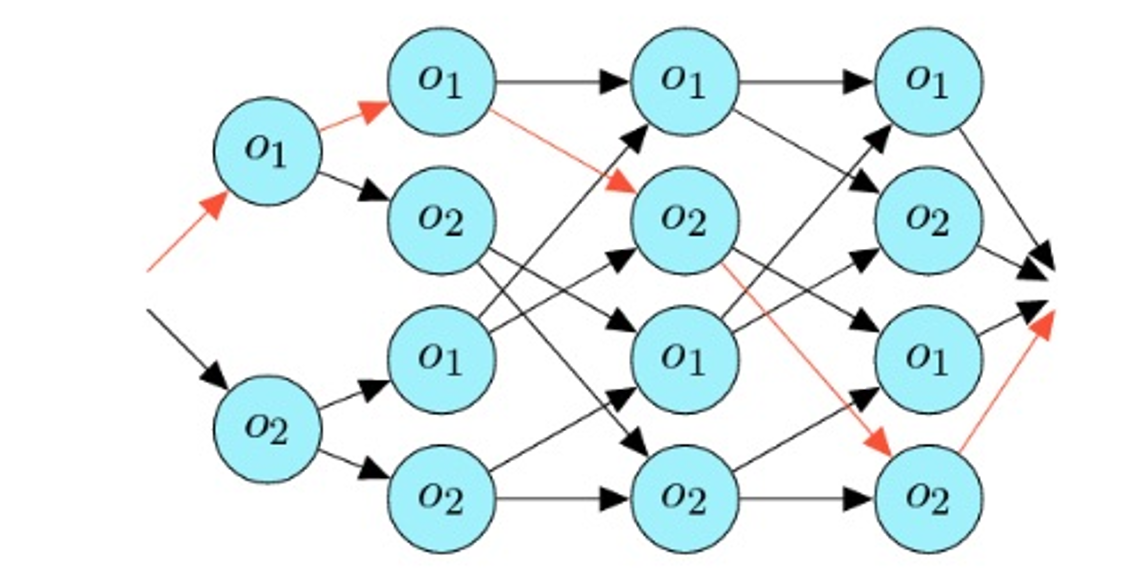

One-Shot Models

We define an architecture search method as one-shot if it trains a single neural network during the search process. This neural network is then used to derive architectures throughout the search space as candidate solutions to the optimization problem. This model uses an interconnected graph of possible components and the paths represent a potential architecture.

NAS is one of the most fascinating disciplines in the deep learning space. However, its popularity is also making the space incredibly crowded. The taxonomy based on search spaces and optimization models is a good starting point to start understanding NAS methods as one of the most effective approaches to design neural networks.

Original. Reposted with permission.

Related:

- Automate Hyperparameter Tuning for Your Models

- Research Guide for Neural Architecture Search

- Activation maps for deep learning models in a few lines of code