Hugging Face Transformers Package – What Is It and How To Use It

The rapid development of Transformers have brought a new wave of powerful tools to natural language processing. These models are large and very expensive to train, so pre-trained versions are shared and leveraged by researchers and practitioners. Hugging Face offers a wide variety of pre-trained transformers as open-source libraries, and you can incorporate these with only one line of code.

Transformers

The Transformer in NLP is a novel architecture that aims to solve sequence-to-sequence tasks while handling long-range dependencies with ease. The Transformer was proposed in the paper Attention Is All You Need. It is recommended reading for anyone interested in NLP.

NLP-focused startup Hugging Face recently released a major update to their popular “PyTorch Transformers” library, which establishes compatibility between PyTorch and TensorFlow 2.0, enabling users to easily move from one framework to another during the life of a model for training and evaluation purposes.

The Transformers package contains over 30 pre-trained models and 100 languages, along with eight major architectures for natural language understanding (NLU) and natural language generation (NLG):

- BERT (from Google);

- GPT (from OpenAI);

- GPT-2 (from OpenAI);

- Transformer-XL (from Google/CMU);

- XLNet (from Google/CMU);

- XLM (from Facebook);

- RoBERTa (from Facebook);

- DistilBERT (from Hugging Face).

The Transformers library no longer requires PyTorch to load models, is capable of training SOTA models in only three lines of code, and can pre-process a dataset with less than 10 lines of code. Sharing trained models also lowers computation costs and carbon emissions.

I am assuming that you are aware of Transformers and its attention mechanism. The prime aim of this article is to show how to use Hugging Face’s transformer library with TF 2.0,

Installation (You don't explicitly need PyTorch)

!pip install transformers

Getting started on a task with a pipeline

The easiest way to use a pre-trained model on a given task is to use pipeline(). ???? Transformers provides the following tasks out of the box:

- Sentiment analysis: is a text positive or negative?

- Text generation (in English): provide a prompt, and the model will generate what follows.

- Name entity recognition (NER): in an input sentence, label each word with the entity it represents (person, place, etc.)

- Question answering: provide the model with some context and a question, extract the answer from the context.

- Filling masked text: given a text with masked words (e.g., replaced by [MASK]), fill the blanks.

- Summarization: generate a summary of a long text.

- Language Translation: translate a text into another language.

- Feature extraction: return a tensor representation of the text.

Pipelines encapsulate the overall process of every NLP process:

- Tokenization: Split the initial input into multiple sub-entities with … properties (i.e., tokens).

- Inference: Maps every token into a more meaningful representation.

- Decoding: Use the above representation to generate and/or extract the final output for the underlying task.

The overall API is exposed to the end-user through the pipeline() method with the following structurs.

GPT-2

GPT-2 is a large transformer-based language model with 1.5 billion parameters, trained on a dataset of 8 million web pages. GPT-2 is trained with a simple objective: predict the next word, given all of the previous words within some text.

Since the goal of GPT-2 is to make predictions, only the decoder mechanism is used. So GPT-2 is just transformer decoders stacked above each other.

GPT-2 displays a broad set of capabilities, including the ability to generate conditional synthetic text samples of unprecedented quality, where the model is comfortable with large input and can generate lengthy output.

Text generation

from transformers import pipeline, set_seed

generator = pipeline('text-generation', model='gpt2')

generator("Hello, I like to play cricket,", max_length=60, num_return_sequences=7)

Output:

[{'generated_text': "Hello, I like to play cricket, but I'd rather play football! So I've decided to create a new game, The Super Bombers, on the Xbox One.\n\nAnd as a reward, you will hear the official announcement for this game!\n\nHere is what you can expect"},

{'generated_text': 'Hello, I like to play cricket, but sometimes it\'s like being a bad sportsman," he says. "Sometimes I try and make cricket harder but sometimes I am just very happy and I always try to enjoy my cricket."\n\nWhile at Middlesex, Hautek was inspired by the'},

{'generated_text': 'Hello, I like to play cricket, but I can\'t really understand what "good" and "bad" is. Do you have a definition of "good" and "bad"?\n\nYes, I think so. I mean, people who are well trained probably don\'t have that problem with'},

{'generated_text': 'Hello, I like to play cricket, I play the game of cricket." The next day, he joined the family tour with his friends. It might have been a brief break for them both that he was so involved. A few days later they met at his cricket training centre, at which the pair'},

{'generated_text': "Hello, I like to play cricket, so I wanted to play English cricket... so I called up a friend of mine and, I remember, it wasn't really English, but it actually has lots of good stuff about it.\n\n\nDUPY: It's very interesting, especially as you"},

{'generated_text': 'Hello, I like to play cricket, but I don\'t really like playing cricket in a stadium full of tourists; there\'s not really any point in playing. We played that game almost three years ago for cricket.\n\n"My favourite time about being here was last year in England. It was'},

{'generated_text': 'Hello, I like to play cricket, too. The kids of the city always play a good match, I mean, the cricket team is always very young."'}]

generator("The Indian man worked as a", max_length=10, num_return_sequences=5)

Output:

[{'generated_text': 'The Indian man worked as a waiter in Delhi.'},

{'generated_text': 'The Indian man worked as a security guard for the'},

{'generated_text': 'The Indian man worked as a waiter for around ten'},

{'generated_text': 'The Indian man worked as a waiter on a Sunday'},

{'generated_text': 'The Indian man worked as a barista in the'}

Sentiment analysis

# Allocate a pipeline for sentiment-analysis

classifier = pipeline('sentiment-analysis')

classifier('The secret of getting ahead is getting started.')

Output:

[{'label': 'POSITIVE', 'score': 0.9970657229423523}]

Question Answering

# Allocate a pipeline for question-answering

question_answerer = pipeline('question-answering')

question_answerer({

'question': 'What is Newton's third law of motion?',

'context': 'Newton's third law of motion states that, "For every action there is equal and opposite reaction"'})

Output:

{'score': 0.6062518954277039,

'start': 42,

'end': 96,

'answer': '"For every action there is equal and opposite reaction"'}

nlp = pipeline("question-answering")

context = r"""

Microsoft was founded by Bill Gates and Paul Allen in 1975.

The property of being prime (or not) is called primality.

A simple but slow method of verifying the primality of a given number n is known as trial division.

It consists of testing whether n is a multiple of any integer between 2 and itself.

Algorithms much more efficient than trial division have been devised to test the primality of large numbers.

These include the Miller-Rabin primality test, which is fast but has a small probability of error, and the AKS primality test, which always produces the correct answer in polynomial time but is too slow to be practical.

Particularly fast methods are available for numbers of special forms, such as Mersenne numbers.

As of January 2016, the largest known prime number has 22,338,618 decimal digits.

"""

#Question 1

result = nlp(question="What is a simple method to verify primality?", context=context)

print(f"Answer 1: '{result['answer']}'")

#Question 2

result = nlp(question="When did Bill gates founded Microsoft?", context=context)

print(f"Answer 2: '{result['answer']}'")

Output:

Answer 1: 'trial division.' Answer 2: '1975.'

BERT

BERT (Bidirectional Encoder Representations from Transformers) makes use of a Transformer, which learns contextual relations between words in a text. In its vanilla form, Transformer includes two separate mechanisms — an encoder that reads the text input and a decoder that produces a prediction for the task. Since BERT’s goal is to generate a language model, only the encoder mechanism is used. So BERT is just transformer encoders stacked above each other.

Text prediction

unmasker = pipeline('fill-mask', model='bert-base-cased')

unmasker("Hello, My name is [MASK].")

Output:

[{'sequence': '[CLS] Hello, My name is David. [SEP]',

'score': 0.007879073731601238,

'token': 1681,

'token_str': 'David'},

{'sequence': '[CLS] Hello, My name is Kate. [SEP]',

'score': 0.007307342253625393,

'token': 5036,

'token_str': 'Kate'},

{'sequence': '[CLS] Hello, My name is Sam. [SEP]',

'score': 0.007054011803120375,

'token': 2687,

'token_str': 'Sam'},

{'sequence': '[CLS] Hello, My name is James. [SEP]',

'score': 0.006197025533765554,

'token': 1600,

'token_str': 'James'},

{'sequence': '[CLS] Hello, My name is Charlie. [SEP]',

'score': 0.006146721541881561,

'token': 4117,

'token_str': 'Charlie'}]

Text Summarization

#Summarization is currently supported by Bart and T5.

summarizer = pipeline("summarization")

ARTICLE = """The Apollo program, also known as Project Apollo, was the third United States human spaceflight program carried out by the National Aeronautics and Space Administration (NASA), which accomplished landing the first humans on the Moon from 1969 to 1972.

First conceived during Dwight D. Eisenhower's administration as a three-man spacecraft to follow the one-man Project Mercury which put the first Americans in space,

Apollo was later dedicated to President John F. Kennedy's national goal of "landing a man on the Moon and returning him safely to the Earth" by the end of the 1960s, which he proposed in a May 25, 1961, address to Congress.

Project Mercury was followed by the two-man Project Gemini (1962-66).

The first manned flight of Apollo was in 1968.

Apollo ran from 1961 to 1972, and was supported by the two-man Gemini program which ran concurrently with it from 1962 to 1966.

Gemini missions developed some of the space travel techniques that were necessary for the success of the Apollo missions.

Apollo used Saturn family rockets as launch vehicles.

Apollo/Saturn vehicles were also used for an Apollo Applications Program, which consisted of Skylab, a space station that supported three manned missions in 1973-74, and the Apollo-Soyuz Test Project, a joint Earth orbit mission with the Soviet Union in 1975.

"""

summary=summarizer(ARTICLE, max_length=130, min_length=30, do_sample=False)[0]

print(summary['summary_text'])

Output:

The first manned flight of Apollo ran from 1961 to 1972 . The Apollo program was followed by the two-man ProjectGemini . It was the third mission to land on the Moon .

English to German translation

# English to German

translator_ger = pipeline("translation_en_to_de")

print("German: ",translator_ger("Joe Biden became the 46th president of U.S.A.", max_length=40)[0]['translation_text'])

# English to French

translator_fr = pipeline('translation_en_to_fr')

print("French: ",translator_fr("Joe Biden became the 46th president of U.S.A", max_length=40)[0]['translation_text'])

Output:

German: Joe Biden wurde der 46. Präsident der USA. French: Joe Biden est devenu le 46e président des États-Unis

Conversation

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

tokenizer = AutoTokenizer.from_pretrained("microsoft/DialoGPT-medium")

model = AutoModelForCausalLM.from_pretrained("microsoft/DialoGPT-medium")

# Let's chat for 5 lines

for step in range(5):

# encode the new user input, add the eos_token and return a tensor in Pytorch

new_user_input_ids = tokenizer.encode(input(">> User:") + tokenizer.eos_token, return_tensors='pt')

# append the new user input tokens to the chat history

bot_input_ids = torch.cat([chat_history_ids, new_user_input_ids], dim=-1) if step > 0 else new_user_input_ids

# generated a response while limiting the total chat history to 1000 tokens,

chat_history_ids = model.generate(bot_input_ids, max_length=1000, pad_token_id=tokenizer.eos_token_id)

# pretty print last output tokens from bot

print("DialoGPT: {}".format(tokenizer.decode(chat_history_ids[:, bot_input_ids.shape[-1]:][0], skip_special_tokens=True)))

Output:

>> User:Hi DialoGPT: Hi! :D >> User:How are you doing? DialoGPT: I'm doing well! How are you? >> User:I'm not that good. DialoGPT: I'm sorry. >> User:Thank you DialoGPT: No problem. I'm glad you're doing well. >> User:bye DialoGPT: Bye! :D

Named Entity Recognition

from transformers import pipeline, set_seed

nlp_token_class = pipeline('ner')

nlp_token_class('Ronaldo was born in 1985, he plays for Juventus and Portugal. ')

Output:

[{'word': 'Ronald',

'score': 0.9978647828102112,

'entity': 'I-PER',

'index': 1},

{'word': '##o', 'score': 0.99903804063797, 'entity': 'I-PER', 'index': 2},

{'word': 'Juventus',

'score': 0.9977495670318604,

'entity': 'I-ORG',

'index': 11},

{'word': 'Portugal',

'score': 0.9991246461868286,

'entity': 'I-LOC',

'index': 13}]

Features Extraction

import numpy as np

nlp_features = pipeline('feature-extraction')

output = nlp_features('output = nlp_features('Deep learning is a branch of Machine learning'))

np.array(output).shape # (Samples, Tokens, Vector Size)

Output:

(1, 10, 768)

Zero-shot Learning

Zero-Shot learning aims to solve a task without receiving any example of that task at the training phase. The task of recognizing an object from a given image where there weren’t any example images of that object during the training phase can be considered as an example of a Zero-Shot Learning task.

classifier_zsl = pipeline("zero-shot-classification")

sequence_to_classify = "Bill gates founded a company called Microsoft in the year 1975"

candidate_labels = ["Europe", "Sports",'Leadership','business', "politics","startup"]

classifier_zsl(sequence_to_classify, candidate_labels)

Output:

{'sequence': 'Bill gates founded a company called Microsoft in the year 1975',

'labels': ['business',

'startup',

'Leadership',

'Europe',

'Sports',

'politics'],

'scores': [0.6144810318946838,

0.1874515861272812,

0.18227894604206085,

0.006684561725705862,

0.0063185556791722775,

0.0027852619532495737]}

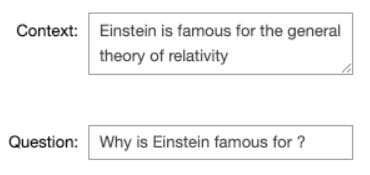

Using transformers in Widgets

import ipywidgets as widgets

nlp_qaA = pipeline('question-answering')

context = widgets.Textarea(

value='Einstein is famous for the general theory of relativity',

placeholder='Enter something',

description='Context:',

disabled=False

)

query = widgets.Text(

value='Why is Einstein famous for ?',

placeholder='Enter something',

description='Question:',

disabled=False

)

def forward(_):

if len(context.value) > 0 and len(query.value) > 0:

output = nlp_qaA(question=query.value, context=context.value)

print(output)

query.on_submit(forward)

display(context, query)

Want a lighter model?

“Distillation comes into the picture”

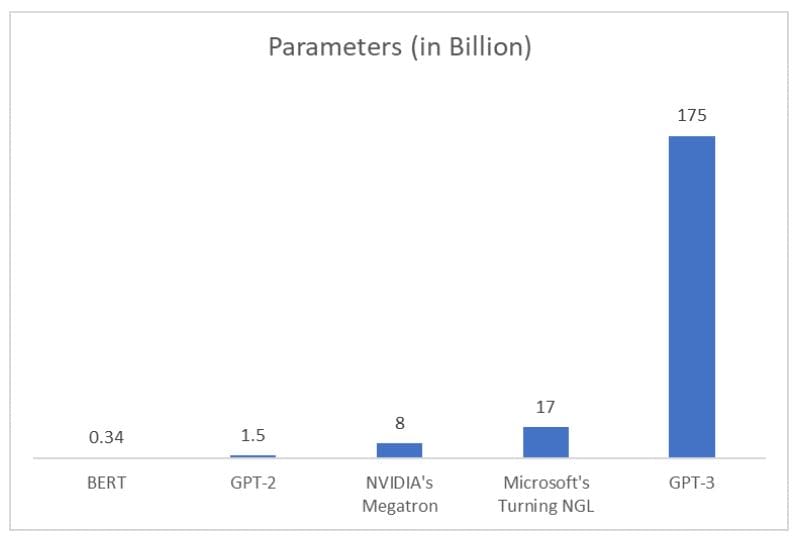

One of the main concerns while using Transformer based models is the computational power they require. All over this article, we are using the BERT model as it can be run on common machines, but that’s not the case for all of the models.

For example, Google released a few months ago T5 an Encoder/Decoder architecture based on Transformer and available in transformers with no more than 11 billion parameters. Microsoft also recently entered the game with Turing-NLG using 17 billion parameters. This kind of model requires tens of gigabytes to store the weights and a tremendous compute infrastructure to run such models, which makes it impracticable for the common man!

With the goal of making Transformer-based NLP accessible to everyone, Hugging Face developed models that take advantage of a training process called Distillation, which allows us to drastically reduce the resources needed to run such models with almost zero drops in performance.

Classifying text with DistilBERT and Tensorflow

You can find Classifying text with DistilBERT and Tensorflow in my Kaggle notebook.

You can also find Hugging Face python notebooks on using transformers for solving various use cases.

Related: