Natural Language Processing Pipelines, Explained

This article presents a beginner's view of NLP, as well as an explanation of how a typical NLP pipeline might look.

By Ram Tavva, Senior Data Scientist, Director at ExcelR Solutions

Introduction

Computers are best at dealing with structured datasets like spreadsheets and database tables. But we as humans hardly communicate in that way, most of our communications are in unstructured format - sentence, words, speech, and others, which is irrelevant to computers.

That’s unfortunate and tons of data present on the database are unstructured. But have you ever thought about how computers deal with unstructured data?

Yes, there are many solutions to this problem, but NLP is a game-changer as always.

Let’s learn more about NLP in detail...

What is NLP?

NLP stands for Natural Language Processing that automatically manipulates the natural language, like speech and text in apps and software.

Speech can be anything like text that the algorithms take as the input, measures the accuracy, runs it through self and semi-supervised models, and gives us the output that we are looking forward to either in speech or text after input data.

NLP is one of the most sought-after techniques that makes communication easier between humans and computers. If you use windows, there is Microsoft Cortana for you, and if you use macOS, Siri is your virtual assistant.

The best part is even the search engine comes with a virtual assistant. Example: Google Search Engine.

With NLP, you can type everything you would like to search, or you can click on the mic option and say, and you get the results you want to have. See how NLP is making communication easier between humans and computers. Isn’t it amazing when you see it?

Whether you want to know the weather conditions or breaking news on the internet, or roadmaps to your weekend destination NLP brings you everything you demand.

Natural Language Processing Pipelines (NLP Pipelines)

When you call NLP on a text or voice, it converts the whole data into strings, and then the prime string undergoes multiple steps (the process called processing pipeline.) It uses trained pipelines to supervise your input data and reconstruct the whole string depending on voice tone or sentence length.

For each pipeline, the component returns to the main string. Then passes on to the next components. The capabilities and efficiencies depend upon the components, their models, and training.

How NLP Makes Communication Easy Between Humans and Computers

NLP uses Language Processing Pipelines to read, decipher and understand human languages. These pipelines consist of six prime processes. That breaks the whole voice or text into small chunks, reconstructs it, analyzes, and processes it to bring us the most relevant data from the Search Engine Result Page.

Here are 6 Inside Steps in NLP Pipelines To Help Computer To Understand Human Language

Sentence Segmentation

When you have the paragraph(s) to approach, the best way to proceed is to go with one sentence at a time. It reduces the complexity and simplifies the process, even gets you the most accurate results. Computers never understand language the way humans do, but they can always do a lot if you approach them in the right way.

For example, consider the above paragraph. Then, your next step would be breaking the paragraph into single sentences.

- When you have the paragraph(s) to approach, the best way to proceed is to go with one sentence at a time.

- It reduces the complexity and simplifies the process, even gets you the most accurate results.

- Computers never understand language the way humans do, but they can always do a lot if you approach them in the right way.

# Import the nltk library for NLP processes import nltk # Variable that stores the whole paragraph text = "..." # Tokenize paragraph into sentences sentences = nltk.sent_tokenize(text) # Print out sentences for sentence in sentences: print(sentence)

When you have paragraph(s) to approach, the best way to proceed is to go with one sentence at a time.

It reduces the complexity and simplifies the process, even gets you the most accurate results.

Computers never understand language the way humans do, but they can always do a lot if you approach them in the right way.

Word Tokenization

Tokenization is the process of breaking a phrase, sentence, paragraph, or entire documents into the smallest unit, such as individual words or terms. And each of these small units is known as tokens.

These tokens could be words, numbers, or punctuation marks. Based on the word’s boundary - ending point of the word. Or the beginning of the next word. It is also the first step for stemming and lemmatization.

This process is crucial because the meaning of the word gets easily interpreted through analyzing the words present in the text.

Let’s take an example:

That dog is a husky breed.

When you tokenize the whole sentence, the answer you get is [‘That’, ‘dog’, ‘is’, a, ‘husky’, ‘breed’].

There are numerous ways you can do this, but we can use this tokenized form to:

- Count the number of words in the sentence.

- Also, you can measure the frequency of the repeated words.

Natural Language Toolkit (NLTK) is a Python library for symbolic and statistical NLP.

import nltk sentence_data = "That dog is a husky breed. They are intelligent and independent." nltk_tokens = nltk.sent_tokenize(sentence_data) print (nltk_tokens)

Output:

[‘That dog is a husky breed.’, ‘They are intelligent and independent.’]

Parts of Speech Prediction For Each Token

In a part of the speech, we have to consider each token. And then, try to figure out different parts of the speech - whether the tokens belong to nouns, pronouns, verbs, adjectives, and so on.

All these help to know which sentence we all are talking about.

Let’s knock out some quick vocabulary:

Corpus: Body of text, singular. Corpora are the plural of this.

Lexicon: Words and their meanings.

Token: Each “entity” that is a part of whatever was split up based on rules.

import nltk

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize, sent_tokenize

stop_words = set(stopwords.words('english'))

// Dummy text

txt = Everything is all about money.\

# sent_tokenize is one of the instances of

# PunktSentenceTokenizer from the nltk.tokenize.punkt module

tokenized = sent_tokenize(txt)

for i in tokenized:

# Word tokenizers is used to find the words

# and punctuation in a string

wordsList = nltk.word_tokenize(i)

# removing stop words from wordList

wordsList = [w for w in wordsList if not w in stop_words]

# Using a Tagger. Which is part-of-speech

# tagger or POS-tagger.

tagged = nltk.pos_tag(wordsList)

print(tagged)

Output:

[('Everything', 'NN'), ('is', 'VBZ'),

('all', 'DT'),('about', 'IN'),

('money', 'NN'), ('.', '.')]

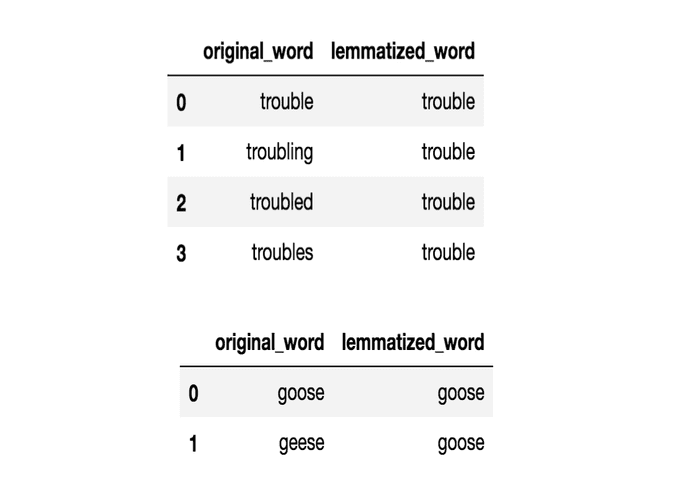

Text Lemmatization

English is also one of the languages where we can use various forms of base words. When working on the computer, it can understand that these words are used for the same concepts when there are multiple words in the sentences having the same base words. The process is what we call lemmatization in NLP.

It goes to the root level to find out the base form of all the available words. They have ordinary rules to handle the words, and most of us are unaware of them.

Identifying Stop Words

When you finish the lemmatization, the next step is to identify each word in the sentence. English has a lot of filler words that don’t add any meaning but weakens the sentence. It’s always better to omit them because they appear more frequently in the sentence.

Most data scientists remove these words before running into further analysis. The basic algorithms to identify the stop words by checking a list of known stop words as there is no standard rule for stop words.

One example that will help you understand identifying stop words better is:

# importing NLTK library stopwords

import nltk

from nltk.corpus import stopwords

nltk.download('stopwords')

nltk.download('punkt')

from nltk.tokenize import word_tokenize

## print(stopwords.words('english'))

# random sentence with lot of stop words

sample_text = "Oh man, this is pretty cool. We will do more such things."

text_tokens = word_tokenize(sample_text)

tokens_without_sw = [word for word in text_tokens if not word in stopwords.words('english')]

print(text_tokens)

print(tokens_without_sw)

Output:

Tokenize Texts With Stop Words:

[‘Oh’, ‘man’,’,’ ‘this’, ‘is’, ‘pretty’, ‘cool’, ‘.’, ‘We’, ‘will’, ‘do’, ‘more’, ‘such’, ’things’, ‘.’]

Tokenize Texts Without Stop Words:

[‘Oh’, ‘man’, ’,’ ‘pretty’, ‘cool’, ‘.’, ‘We’, ’things’, ‘.’]

Dependency Parsing

Parsing is divided into three prime categories further. And each class is different from the others. They are part of speech tagging, dependency parsing, and constituency phrasing.

The Part-Of-Speech (POS) is mainly for assigning different labels. It is what we call POS tags. These tags say about part of the speech of the words in a sentence. Whereas the dependency phrasing case: analyzes the grammatical structure of the sentence. Based on the dependencies in the words of the sentences.

Whereas in constituency parsing: the sentence breakdown into sub-phrases. And these belong to a specific category like noun phrase (NP) and verb phrase (VP).

Final Thoughts

In this blog, you learned briefly about how NLP pipelines help computers understand human languages using various NLP processes.

Starting from NLP, what are language processing pipelines, how NLP makes communication easier between humans? And six insiders involved in NLP Pipelines.

The six steps involved in NLP pipelines are - sentence segmentation, word tokenization, part of speech for each token. Text lemmatization, identifying stop words, and dependency parsing.

Bio: Ram Tavva is Senior Data Scientist, Director at ExcelR Solutions.

Related:

- 6 NLP Techniques Every Data Scientist Should Know

- Using NLP to improve your Resume

- Hugging Face Transformers Package – What Is It and How To Use It