Exclusive: Interview with Rich Sutton, the Father of Reinforcement Learning

My exclusive interview with Rich Sutton, the Father of Reinforcement Learning, on RL, Machine Learning, Neuroscience, 2nd edition of his book, Deep Learning, Prediction Learning, AlphaGo, Artificial General Intelligence, and more.

I met Rich Sutton back in 1980s, when he and I, both fresh PhDs, joined GTE Laboratories in Boston area.

I was doing research into Intelligent Databases and he was working on Reinforcement Learning department, but our GTE Labs projects were far from real-world deployment. We frequently played chess, where we were about equal, but Rich was far ahead of me in Machine Learning. Rich is both a brilliant researcher and a very nice and modest person. He says in the interview below that the idea of "Reinforcement Learning" was obvious, but there is a huge distance between having an idea and developing it into a working, mathematically-based theory, which is what Rich and Andrew Barto - his PhD thesis advisor - did for Reinforcement Learning. RL was a major part of the recent success of AlphaGo Zero, and if Artificial General Intelligence (AGI) will be developed at some point, RL is likely to play a major role in it.

Rich Sutton, Ph.D. is currently

professor of Computer Science, iCORE chair at the University of Alberta, and a Distinguished Research Scientist

at DeepMind. He is one of the founding fathers of Reinforcement Learning (RL), an increasingly important part of Machine Learning and AI. His significant contributions to RL include temporal difference learning and policy gradient methods. He is the author of a widely acclaimed book (with Andrew Barto)

"Reinforcement Learning, an introduction" - cited over 25,000 times,

with 2nd edition coming soon.

Rich Sutton, Ph.D. is currently

professor of Computer Science, iCORE chair at the University of Alberta, and a Distinguished Research Scientist

at DeepMind. He is one of the founding fathers of Reinforcement Learning (RL), an increasingly important part of Machine Learning and AI. His significant contributions to RL include temporal difference learning and policy gradient methods. He is the author of a widely acclaimed book (with Andrew Barto)

"Reinforcement Learning, an introduction" - cited over 25,000 times,

with 2nd edition coming soon.

He received BA in Psychology from Stanford (1978) and MS (1980) and PhD (1984) in Computer science from U. of Massachusetts at Amherst. His doctoral dissertation was entitled "Temporal Credit Assignment in Reinforcement Learning", where he introduced actor-critic architectures and "temporal credit assignment".

From 1985 to 1994 Sutton was a Principal Member of Technical Staff at GTE Laboratories. He then spent 3 years at UMass Amherst as a Senior Research Scientist, and after that 5 years at the AT&T Shannon Laboratory as Principal Technical Staff Member. Since 2003 he is Professor and iCORE Chair in the Dept. of Computing Science at the University of Alberta, where he leads the Reinforcement Learning and Artificial Intelligence Laboratory (RLAI). Starting June 2017, Sutton also co-leads a new Alberta office of DeepMind.

Rich also keeps a blog/personal page at incompleteideas.net.

Gregory Piatetsky: 1. What are the main ideas in Reinforcement Learning (RL) and how it is different from Supervised Learning?

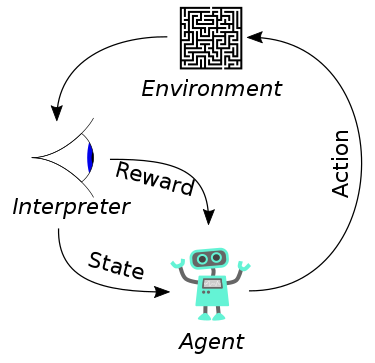

The typical RL scenario: an agent takes actions in an environment, which is interpreted into a reward and a representation of the state, which are fed back into the agent.

Source: Wikipedia

Rich Sutton:

Reinforcement learning is learning from rewards, by trial and error, during normal interaction with the world. This makes it very much like natural learning processes and unlike supervised learning, in which learning only happens during a special training phase in which a supervisory or teaching signal is available that will not be available during normal use.

For example, speech recognition is currently done by supervised learning, using large datasets of speech sounds and their correct transcriptions into words. The transcriptions are the supervisory signals that will not be available when new speech sounds come in to be recognized. Game playing, on the other hand, is often done by reinforcement learning, using the outcome of the game as a reward. Even when you play a new game you will see whether you win or lose, and can use this with reinforcement learning algorithms to improve your play. A supervised learning approach to game playing would instead require examples of "correct" moves, say from a human expert. This would be handy to have, but it is not available during normal play, and would limit the skill of the learned system to that of the human expert. In reinforcement learning you make do with less informative training information, with the advantage that that information is more plentiful and is not limited by the skill of the supervisor.

GP: 2. The second edition of your classic book with Andrew Barto: "Reinforcement Learning, an introduction" is coming soon (when?). What are the main advances covered in the second edition, and can you tell us about new chapters on the intriguing connections between reinforcement learning and psychology (Ch. 14) and neuroscience (Ch. 15)?

RS: The complete draft of the second edition is currently available

on the web at richsutton.com.

Andy Barto and I are putting some final finishing touches on it: validating all the references, things like that. It will be printed in a physical form early next year.

richsutton.com.

Andy Barto and I are putting some final finishing touches on it: validating all the references, things like that. It will be printed in a physical form early next year.

A lot has happened in reinforcement learning in the twenty years since the first edition. Perhaps the most important of these is the huge impact reinforcement learning ideas have had on neuroscience, where the now-standard theory of brain reward systems is that they are an instance of temporal-difference learning (one of the fundamental learning methods of reinforcement learning).

In particular, the theory now is that a primary role of the neurotransmitter Dopamine is to carry the temporal-difference error, also called the reward-prediction error. This has been a huge development with many sources, ramifications, and tests, and our treatment in the book can only summarize them. This and other developments are covered in Chapter 15, and Chapter 14 summarizes their important precursors in psychology.

Overall the second edition is at about two-thirds larger than the first. There are now five chapters on function approximation instead of one. There are the two new chapters on psychology and neuroscience. There is also a new chapters on the frontiers of reinforcement learning, including a section on its societal implications. And everything has been updated and extended throughout the book. For example, the new applications chapter covers Atari game playing and AlphaGo Zero.

GP: 3. What is Deep Reinforcement Learning - how it is different from RL?

RS: Deep reinforcement learning is the combination of deep learning and reinforcement learning. These two kinds of learning address largely orthogonal issues and combine nicely. In short, reinforcement learning needs methods for approximating functions from data to implement all of its components - value functions, policies, world models, state updaters - and deep learning is the latest and most successful of recently developed function approximators. Our textbook covers mainly linear function approximators, while giving the equations for the general case. We cover neural networks in the applications chapter and in one section, but to learn fully about deep reinforcement learning one would have to complement our book with, say, the Deep Learning book by Goodfellow, Bengio, and Courville.

GP: 4. RL had great success in games, for example with AlphaGo Zero. What other areas you expect RL to do well?

RS: Well, of course I believe that in some sense reinforcement learning is the future of AI. Reinforcement learning is the best representative of the idea that an intelligent system must be able to learn on its own, without constant supervision. An AI has to be able to tell for itself if it is right or wrong. Only in this way can it scale to really large amounts of knowledge and general skill.

GP: 5. Yann LeCun commented that AlphaGo Zero success is hard to generalize to other domains because it played millions of games a day, but you cannot run real-world faster than real time. Where RL is not currently successful (eg when the feedback is sparse) and how that can be fixed?

RS: As Yann would readily agree, the key is to learn from ordinary unsupervised data. Yann and I would also agree, I think, that in the near term this will be done by focusing on "prediction learning". Prediction learning may shortly become a strong buzzword. It means just what you might think. It means predicting what will happen, and then learning based on what actually does happen. Because you learn from what happens, no supervisor is needed to tell you what you should have predicted. But because you find out what happens just by waiting, you do essentially have a supervisory signal. Prediction learning is the unsupervised supervised learning. Prediction learning is likely to be where big advances in applications will occur.

The only question is whether you want to see prediction learning more as an outgrowth of supervised learning or of reinforcement learning. Students of reinforcement learning know that reinforcement learning has a major subproblem known as the "prediction problem" and that solving it efficiently is the focus of much of the algorithmic work. In fact, the first paper discussing temporal-difference learning is entitled "Learning to predict by the methods of temporal differences".

GP: 6. When you worked on RL in 1980s, did you think it will achieve such success?

RS: RL was definitely out of fashion in the 1980s. It essentially didn't exist as a scientific or engineering idea. But it was nevertheless an obvious idea. Obvious to psychologists and obvious to ordinary people. And so it seemed plain to me that it was the thing to work on and that it would eventually be recognized.

GP: 7. What are the next research directions for RL and what are you working on now ?

RS: Beyond prediction learning, I would say that a next big step will come when we have systems that plan with a learned model of the world. We currently have excellent planning algorithms, but only when the model is provided to them, as seen in all the game-playing systems, in which the model is provided by the rules of the game (and by self-play). But we don't have the analog of the rules of the game for the real world. We need the laws of physics, yes, but we also need to know a myriad of other things, from how to walk and see to how other people will respond to what we do. The Dyna system in Chapter 8 of our textbook describes an integrated planning and learning system, but it is limited in several ways. Chapter 17 sketches ways in which the limitations might be overcome. I will be starting from there.

GP: 8. RL may be central to the development of Artificial General Intelligence (AGI). What is your opinion - will researchers develop AGI in a foreseeable future? If yes, will it be a great benefit to humanity or will it be an existential threat to humanity, as Elon Musk warns?

RS: I view artificial intelligence as the attempt to understand the human mind by making things like it. As Feynman said, "what i cannot create, i do not understand". In my view, the main event is that we are about to genuinely understand minds for the first time. This understanding alone will have enormous consequences. It will be the greatest scientific achievement of our time and, really, of any time. It will also be the greatest achievement of the humanities of all time - to understand ourselves at a deep level. When viewed in this way it is impossible to see it as a bad thing. Challenging yes, but not bad. We will reveal what is true. Those who don't want it to be true will see our work as bad, just as when science dispensed with notions of soul and spirit it was seen as bad by those who held those ideas dear. Undoubtedly some of the ideas we hold dear today will be similarly challenged when we understand more deeply how minds work.

I view artificial intelligence as the attempt to understand the human mind by making things like it. As Feynman said, "what i cannot create, i do not understand". In my view, the main event is that we are about to genuinely understand minds for the first time. This understanding alone will have enormous consequences. It will be the greatest scientific achievement of our time and, really, of any time. It will also be the greatest achievement of the humanities of all time - to understand ourselves at a deep level. When viewed in this way it is impossible to see it as a bad thing. Challenging yes, but not bad. We will reveal what is true. Those who don't want it to be true will see our work as bad, just as when science dispensed with notions of soul and spirit it was seen as bad by those who held those ideas dear. Undoubtedly some of the ideas we hold dear today will be similarly challenged when we understand more deeply how minds work.

For more on the impact of AI on society, I would refer your readers to the final section of our textbook and to my comments in this video (https://www.youtube.com/watch?v=QqLcniN2VAk)

GP: 9. What do you like to do when you are away from computers and smartphones? What recent book you read and liked?

RS: I am a lover of nature and a student of speculative ideas in philosophy, economics, and science. I recently read and enjoyed "Seveneves" by Neal Stephenson, "Sapiens" by Yuval Harari, and "The Creature from Jekyll Island" by G. Edward Griffin.

Related:

Rich Sutton, Ph.D. is currently

professor of Computer Science, iCORE chair at the University of Alberta, and a Distinguished Research Scientist

at DeepMind. He is one of the founding fathers of Reinforcement Learning (RL), an increasingly important part of Machine Learning and AI. His significant contributions to RL include temporal difference learning and policy gradient methods. He is the author of a widely acclaimed book (with Andrew Barto)

"Reinforcement Learning, an introduction" - cited over 25,000 times,

with 2nd edition coming soon.

Rich Sutton, Ph.D. is currently

professor of Computer Science, iCORE chair at the University of Alberta, and a Distinguished Research Scientist

at DeepMind. He is one of the founding fathers of Reinforcement Learning (RL), an increasingly important part of Machine Learning and AI. His significant contributions to RL include temporal difference learning and policy gradient methods. He is the author of a widely acclaimed book (with Andrew Barto)

"Reinforcement Learning, an introduction" - cited over 25,000 times,

with 2nd edition coming soon.

He received BA in Psychology from Stanford (1978) and MS (1980) and PhD (1984) in Computer science from U. of Massachusetts at Amherst. His doctoral dissertation was entitled "Temporal Credit Assignment in Reinforcement Learning", where he introduced actor-critic architectures and "temporal credit assignment".

From 1985 to 1994 Sutton was a Principal Member of Technical Staff at GTE Laboratories. He then spent 3 years at UMass Amherst as a Senior Research Scientist, and after that 5 years at the AT&T Shannon Laboratory as Principal Technical Staff Member. Since 2003 he is Professor and iCORE Chair in the Dept. of Computing Science at the University of Alberta, where he leads the Reinforcement Learning and Artificial Intelligence Laboratory (RLAI). Starting June 2017, Sutton also co-leads a new Alberta office of DeepMind.

Rich also keeps a blog/personal page at incompleteideas.net.

Gregory Piatetsky: 1. What are the main ideas in Reinforcement Learning (RL) and how it is different from Supervised Learning?

The typical RL scenario: an agent takes actions in an environment, which is interpreted into a reward and a representation of the state, which are fed back into the agent.

Source: Wikipedia

For example, speech recognition is currently done by supervised learning, using large datasets of speech sounds and their correct transcriptions into words. The transcriptions are the supervisory signals that will not be available when new speech sounds come in to be recognized. Game playing, on the other hand, is often done by reinforcement learning, using the outcome of the game as a reward. Even when you play a new game you will see whether you win or lose, and can use this with reinforcement learning algorithms to improve your play. A supervised learning approach to game playing would instead require examples of "correct" moves, say from a human expert. This would be handy to have, but it is not available during normal play, and would limit the skill of the learned system to that of the human expert. In reinforcement learning you make do with less informative training information, with the advantage that that information is more plentiful and is not limited by the skill of the supervisor.

GP: 2. The second edition of your classic book with Andrew Barto: "Reinforcement Learning, an introduction" is coming soon (when?). What are the main advances covered in the second edition, and can you tell us about new chapters on the intriguing connections between reinforcement learning and psychology (Ch. 14) and neuroscience (Ch. 15)?

RS: The complete draft of the second edition is currently available

on the web at

richsutton.com.

Andy Barto and I are putting some final finishing touches on it: validating all the references, things like that. It will be printed in a physical form early next year.

richsutton.com.

Andy Barto and I are putting some final finishing touches on it: validating all the references, things like that. It will be printed in a physical form early next year.

A lot has happened in reinforcement learning in the twenty years since the first edition. Perhaps the most important of these is the huge impact reinforcement learning ideas have had on neuroscience, where the now-standard theory of brain reward systems is that they are an instance of temporal-difference learning (one of the fundamental learning methods of reinforcement learning).

In particular, the theory now is that a primary role of the neurotransmitter Dopamine is to carry the temporal-difference error, also called the reward-prediction error. This has been a huge development with many sources, ramifications, and tests, and our treatment in the book can only summarize them. This and other developments are covered in Chapter 15, and Chapter 14 summarizes their important precursors in psychology.

Overall the second edition is at about two-thirds larger than the first. There are now five chapters on function approximation instead of one. There are the two new chapters on psychology and neuroscience. There is also a new chapters on the frontiers of reinforcement learning, including a section on its societal implications. And everything has been updated and extended throughout the book. For example, the new applications chapter covers Atari game playing and AlphaGo Zero.

GP: 3. What is Deep Reinforcement Learning - how it is different from RL?

RS: Deep reinforcement learning is the combination of deep learning and reinforcement learning. These two kinds of learning address largely orthogonal issues and combine nicely. In short, reinforcement learning needs methods for approximating functions from data to implement all of its components - value functions, policies, world models, state updaters - and deep learning is the latest and most successful of recently developed function approximators. Our textbook covers mainly linear function approximators, while giving the equations for the general case. We cover neural networks in the applications chapter and in one section, but to learn fully about deep reinforcement learning one would have to complement our book with, say, the Deep Learning book by Goodfellow, Bengio, and Courville.

GP: 4. RL had great success in games, for example with AlphaGo Zero. What other areas you expect RL to do well?

RS: Well, of course I believe that in some sense reinforcement learning is the future of AI. Reinforcement learning is the best representative of the idea that an intelligent system must be able to learn on its own, without constant supervision. An AI has to be able to tell for itself if it is right or wrong. Only in this way can it scale to really large amounts of knowledge and general skill.

GP: 5. Yann LeCun commented that AlphaGo Zero success is hard to generalize to other domains because it played millions of games a day, but you cannot run real-world faster than real time. Where RL is not currently successful (eg when the feedback is sparse) and how that can be fixed?

RS: As Yann would readily agree, the key is to learn from ordinary unsupervised data. Yann and I would also agree, I think, that in the near term this will be done by focusing on "prediction learning". Prediction learning may shortly become a strong buzzword. It means just what you might think. It means predicting what will happen, and then learning based on what actually does happen. Because you learn from what happens, no supervisor is needed to tell you what you should have predicted. But because you find out what happens just by waiting, you do essentially have a supervisory signal. Prediction learning is the unsupervised supervised learning. Prediction learning is likely to be where big advances in applications will occur.

The only question is whether you want to see prediction learning more as an outgrowth of supervised learning or of reinforcement learning. Students of reinforcement learning know that reinforcement learning has a major subproblem known as the "prediction problem" and that solving it efficiently is the focus of much of the algorithmic work. In fact, the first paper discussing temporal-difference learning is entitled "Learning to predict by the methods of temporal differences".

GP: 6. When you worked on RL in 1980s, did you think it will achieve such success?

RS: RL was definitely out of fashion in the 1980s. It essentially didn't exist as a scientific or engineering idea. But it was nevertheless an obvious idea. Obvious to psychologists and obvious to ordinary people. And so it seemed plain to me that it was the thing to work on and that it would eventually be recognized.

GP: 7. What are the next research directions for RL and what are you working on now ?

RS: Beyond prediction learning, I would say that a next big step will come when we have systems that plan with a learned model of the world. We currently have excellent planning algorithms, but only when the model is provided to them, as seen in all the game-playing systems, in which the model is provided by the rules of the game (and by self-play). But we don't have the analog of the rules of the game for the real world. We need the laws of physics, yes, but we also need to know a myriad of other things, from how to walk and see to how other people will respond to what we do. The Dyna system in Chapter 8 of our textbook describes an integrated planning and learning system, but it is limited in several ways. Chapter 17 sketches ways in which the limitations might be overcome. I will be starting from there.

GP: 8. RL may be central to the development of Artificial General Intelligence (AGI). What is your opinion - will researchers develop AGI in a foreseeable future? If yes, will it be a great benefit to humanity or will it be an existential threat to humanity, as Elon Musk warns?

RS:

I view artificial intelligence as the attempt to understand the human mind by making things like it. As Feynman said, "what i cannot create, i do not understand". In my view, the main event is that we are about to genuinely understand minds for the first time. This understanding alone will have enormous consequences. It will be the greatest scientific achievement of our time and, really, of any time. It will also be the greatest achievement of the humanities of all time - to understand ourselves at a deep level. When viewed in this way it is impossible to see it as a bad thing. Challenging yes, but not bad. We will reveal what is true. Those who don't want it to be true will see our work as bad, just as when science dispensed with notions of soul and spirit it was seen as bad by those who held those ideas dear. Undoubtedly some of the ideas we hold dear today will be similarly challenged when we understand more deeply how minds work.

I view artificial intelligence as the attempt to understand the human mind by making things like it. As Feynman said, "what i cannot create, i do not understand". In my view, the main event is that we are about to genuinely understand minds for the first time. This understanding alone will have enormous consequences. It will be the greatest scientific achievement of our time and, really, of any time. It will also be the greatest achievement of the humanities of all time - to understand ourselves at a deep level. When viewed in this way it is impossible to see it as a bad thing. Challenging yes, but not bad. We will reveal what is true. Those who don't want it to be true will see our work as bad, just as when science dispensed with notions of soul and spirit it was seen as bad by those who held those ideas dear. Undoubtedly some of the ideas we hold dear today will be similarly challenged when we understand more deeply how minds work.

For more on the impact of AI on society, I would refer your readers to the final section of our textbook and to my comments in this video (https://www.youtube.com/watch?v=QqLcniN2VAk)

GP: 9. What do you like to do when you are away from computers and smartphones? What recent book you read and liked?

RS: I am a lover of nature and a student of speculative ideas in philosophy, economics, and science. I recently read and enjoyed "Seveneves" by Neal Stephenson, "Sapiens" by Yuval Harari, and "The Creature from Jekyll Island" by G. Edward Griffin.

Related:

- Machine Learning Algorithms: Which One to Choose for Your Problem

- 3 different types of machine learning

- AlphaGo Zero: The Most Significant Research Advance in AI