Getting Started with spaCy for Natural Language Processing

spaCy is a Python natural language processing library specifically designed with the goal of being a useful library for implementing production-ready systems. It is particularly fast and intuitive, making it a top contender for NLP tasks.

In a series of previous posts, we have looked at some general ideas related to textual data science tasks, be they natural language processing, text mining, or something different yet closely related. In the most recent of these posts, we covered a text data preprocessing walkthrough using Python. More specifically, we looked at a text preprocessing walkthrough using Python and NLTK. While we did not go any further than data preprocessing with NLTK, the toolkit could, theoretically, be used for further analysis tasks.

While NLTK is a great natural language... well, toolkit (hence the name), it is not optimized for building production systems. This may or may not be of consequence if using NLTK only to preprocess your data, but if you are planning an end-to-end NLP solution and are selecting an appropriate tool to build said system, it may make sense to preprocess your data with the same.

While NLTK was built with learning NLP in mind, spaCy is specifically designed with the goal of being a useful library for implementing production-ready systems.

spaCy is designed to help you do real work — to build real products, or gather real insights. The library respects your time, and tries to avoid wasting it. It's easy to install, and its API is simple and productive. We like to think of spaCy as the Ruby on Rails of Natural Language Processing.

spaCy is opinionated, in that it does not allow for as much mixing and matching of what could be considered NLP pipeline modules, the argument being that particular lemmatizers, for example, are not optimized to play well with particular tokenizers. While the tradeoff is less flexibility in some aspects of your NLP pipeline, the result should be increased performance.

You can get a quick overview of spaCy and some of its design philosophy and choices in this video, a talk given by spaCy developers Matthew Honnibal and Ines Montani, from a SF Machine Learning meetup co-hosted with the Data Institute at USF.

spaCy bills itself as "the best way to prepare text for deep learning." Since much of the previous walkthrough did not use NLTK (the task-dependent noise removal as well as a few steps in the normalization process), we won't repeat the entire post here using spaCy instead of NLTK in particular spots, since that would be a waste of everyone's time. Instead, we will investigate how some of that same functionality we employed NLTK for could be accomplished with spaCy, and then move on to some additional tasks which could be considered preprocessing, depending on your end goal, but which may also bleed into the subsequent steps of textual data science tasks.

Getting Started

Before doing anything, you need to have spaCy installed, as well as its English language model.

$ pip3 install spacy $ python3 -m spacy download en

We need a sample of text to use:

sample = u"I can't imagine spending $3000 for a single bedroom apartment in N.Y.C."

Now let's import spaCy, along with displaCy (for visualizing some of spaCy's modeling) and a list of English stop words (we'll use them below). We also load the English language model as a Language object (we will call it 'nlp' out of spaCy convention), and then call the nlp object on our sample text, which returns a processed Doc object (which we cleverly call 'doc').

import spacy

from spacy import displacy

from spacy.lang.en.stop_words import STOP_WORDS

nlp = spacy.load('en')

doc = nlp(sample)

And there you have it. From the spaCy documentation:

Even though a

Docis processed – e.g. split into individual words and annotated – it still holds all information of the original text, like whitespace characters. You can always get the offset of a token into the original string, or reconstruct the original by joining the tokens and their trailing whitespace. This way, you'll never lose any information when processing text with spaCy.

This is core to spaCy's design philosophy; I encourage you to watch this video.

Now let's try a few things. Note how little code is required to perform said things.

Tokenization

Printing out tokens of our sample is straightforward:

# Print out tokens

for token in doc:

print(token)

I ca n't imagine spending $ 3000 for a single bedroom apartment in N.Y.C.

Recall from above that a Doc object is processed as it is passed to the Language object, and so tokenization has already been completed at this point; we are just accessing those existing tokens. From the documentation:

A Doc is a sequence of

Tokenobjects. Access sentences and named entities, export annotations to numpy arrays, losslessly serialize to compressed binary strings. TheDocobject holds an array ofTokenCstructs. The Python-levelTokenandSpanobjects are views of this array, i.e. they don't own the data themselves.

We didn't discuss the specifics of spaCy's implementation, but getting to know what its underlying code looks like -- specifically how it was written (and why) -- gives you the insight needed to understand why it's so very fast. For a third time, I encourage you to watch this video.

Though it is not necessary, given differences in design, if you want to pull these tokens out into a list of their own (similar to how we did in the NLTK walkthrough):

# Store tokens as list, print out tokens = [token for token in doc] print(tokens)

[I, ca, n't, imagine, spending, $, 3000, for, a, single, bedroom, apartment, in, N.Y.C.]

Identifying Stop Words

Let's identify stop words. We imported the word list above, so it's just a matter of iterating through the tokens stored in the Doc object and performing a comparison:

for word in doc:

if word.is_stop == True:

print(word)

ca for a in

Note that we did not touch the tokens list created above, and rely only on the pre-processed Doc object.

Part-of-speech Tagging

A Doc object holds Token objects, and you can read the Token documentation for an idea of what data each token already possesses before we even ask for it, and for specific info on what is being shown below.

for token in doc:

print(token.text, token.lemma_, token.pos_, token.tag_, token.dep_,

token.shape_, token.is_alpha, token.is_stop)

I -PRON- PRON PRP nsubj X True False ca can VERB MD aux xx True True n't not ADV RB neg x'x False False imagine imagine VERB VB ROOT xxxx True False spending spend VERB VBG xcomp xxxx True False $ $ SYM $ nmod $ False False 3000 3000 NUM CD dobj dddd False False for for ADP IN prep xxx True True a a DET DT det x True True single single ADJ JJ amod xxxx True False bedroom bedroom NOUN NN compound xxxx True False apartment apartment NOUN NN pobj xxxx True False in in ADP IN prep xx True True N.Y.C. n.y.c. PROPN NNP pobj X.X.X. False False

While this is a lot of info as is, and could be useful in all sorts of ways for a given NLP goal, let's simply visualize it using displaCy for a more concise view:

displacy.render(doc, style='dep', jupyter=True, options={'distance': 70})

Named Entity Recognition

A Doc object has already processed named entities as well. Access them with:

# Print out named entities

for ent in doc.ents:

print(ent.text, ent.start_char, ent.end_char, ent.label_)

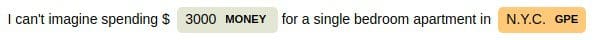

3000 26 30 MONEY N.Y.C. 65 71 GPE

As can be determined from the code above, first the named entity is output, followed by its starting character index in the document, then its ending character index, and finally its entity type (label).

displaCy's visualization is handy again, here:

displacy.render(doc, style='ent', jupyter=True)

I reiterate: scroll back over this post and look at how little code was required to accomplish the tasks we undertook.

This only scratches the surface of what we may want to accomplish with NLP or a tool as powerful as spaCy. As should be obvious, NLP preprocessing tasks, as well as those of other data forms (along with text and string manipulation), can often be accomplished with a variety of strategies, tools, and libraries. In my view, spaCy excels due to its ease of use and API simplicity. I encourage anyone who has been following these NLP-related posts I have been writing to check spaCy out if they are serious about natural language processing.

To gain more of an introduction to spaCy, I recommend these resources:

- spaCy 101: Everything you need to know

- Language Processing Pipelines

- Increasing data science productivity; founders of spaCy & Prodigy (SF Machine Learning meetup talk)

- Matthew Honnibal - Designing spaCy: Industrial-strength NLP (PyData Berlin 2016 talk)

Related:

- Text Data Preprocessing: A Walkthrough in Python

- A Framework for Approaching Textual Data Science Tasks

- A General Approach to Preprocessing Text Data