Reinforcement Learning: The Business Use Case, Part 2

In this post, I will explore the implementation of reinforcement learning in trading. The Financial industry has been exploring the applications of Artificial Intelligence and Machine Learning for their use-cases, but the monetary risk has prompted reluctance.

By Aishwarya Srinivasan, Deep Learning Researcher

In my previous post, I focused on the understanding of computational and mathematical perspective of reinforcement learning, and the challenges we face when using the algorithm on business use cases.

In this post, I will explore the implementation of reinforcement learning in trading. The Financial industry has been exploring the applications of Artificial Intelligence and Machine Learning for their use-cases, but the monetary risk has prompted reluctance. Traditional algorithmic trading has evolved in recent years and now high-computational systems automates the tasks, but traders still build the policies that govern choices to buy and sell. An algorithmic model for buying stocks based on a list of valuation and growth metric conditions might define a “buy” or “sell” signal that would in turn be triggered by some specific rules that the trader has defined.

For example, an algorithmic approach might be as simple as buying the S&P index whenever it closes above the high of the past 30 days or liquidate the position whenever it closes below the low of the past 30 days. Such rules could be trend-following, counter-trend, or based on patterns in nature. Different technical analysts would inevitably defined both the pattern and confirmation conditions differently. In order for this approach to be systematic, the trader would have to specify precise mathematical conditions to unambiguously define whether or not a head-and-shoulders pattern had been formed, as well as precise conditions that would define a confirmation of the pattern.

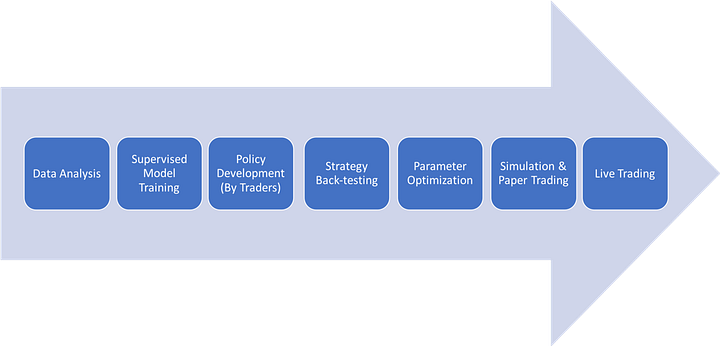

In the arena of advanced machine learning in the present financial market, we can look to the appearance in October 2017 of AI-based Exchange Traded Funds (ETFs) from EquBot. EquBot automates these ETFs to compile the market information from thousands of US companies, over a million market signals, quarterly news articles, and social media postings. A given ETF might select 30 to 70 companies with high opportunities for market appreciation and it will continue to learn with every trade. Another well-known market player, Horizons, launched a similar Active AI Global ETF, which Horizons developed using supervised machine learning that includes policy building from traders. With a supervised learning approach, the human traders help to choose thresholds, account for latencies, estimate fees, and so on.

Fig 1: Pipeline for Trading using Supervised Learning

Of course if it’s going to be fully automated, an AI-driven trading model has to do more than predict prices. It needs a rule-based policy that takes as input the stock price and then decides whether to buy, sell, or hold.

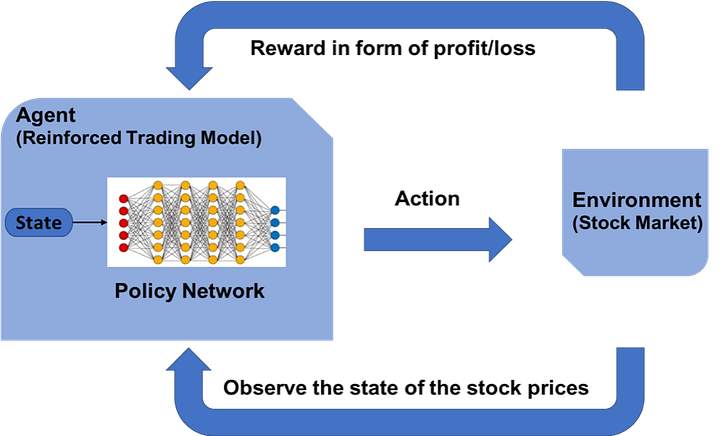

In June 2018, Morgan Stanley appointed Micheal Kearns, a computer scientist from the University of Pennsylvania, in an effort to expand the use of artificial intelligence. In an interview with Bloomberg, Dr. Kearns pointed out that, “While standard machine learning models make predictions on prices, they don’t specify the best time to act, the optimal size of a trade or its impact on the market.” He added, “With reinforcement learning, you are learning to make predictions that account for what effects your actions have on the state of the market.”

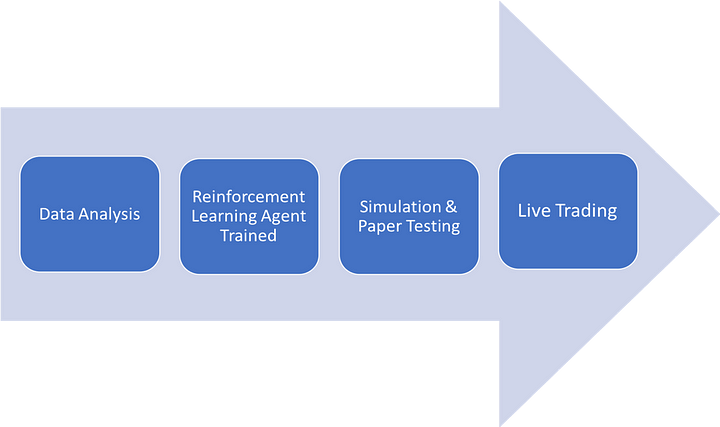

Reinforcement learning allows for end-to-end optimization and maximizes the reward. Crucially, the RL agent itself adjusts the parameters in order to zero in on the optimal result. For instance, we can imagine large negative reward whenever there’s a drawdown of more than 30%, which forces the agent to consider a different policy. We can also build simulations to improve responses in critical situations. For example, we can simulate latencies within the reinforcement learning environment in order to generate a negative reward for the agent. That negative reward in turn compels the agent to learn work-arounds for latencies. Similar strategies allow the agent to auto-tune over time, continually making it more powerful and adaptable.

Fig 2: Pipeline for trading using reinforcement learning Model

Here at IBM, we’ve built a sophisticated system on the DSX platform that makes financial trades using the power of reinforcement learning. The model winds around training on the historical stock price data using stochastic actions at each time step, and we calculate the reward function based on the profit or loss for each trade.

‘ IBM Data Science Experience is an enterprise data science platform that provides teams with the broadest set of open source and data science tools for any skill set, the flexibility to build and deploy anywhere in a multicloud environment, and the ability to operationalize data science results faster.’

The following diagrams weave together the reinforcement learning methodology with the use case of financial trading.

Fig 3: Reinforcement learning trading model

We measure the performance of the reinforced trading model with the alpha metric (the active return on an investment), and also evaluate the performance of the investment against the market index representing market movement overall. Finally, we assess the model against a simple Buy-&-Hold strategy and against ARIMA-GARCH. We found that the model had much-refined moderation according to the market movements, and could even capture the head-and-shoulder patterns, which are non-trivial trends that can signal reversals in the market.

Reinforcement learning might not apply in every business use case, but its ability to capture the subtleties of financial trading certainly demonstrate its sophistication, power, and greater potential.

Stay tuned as we test the power of reinforcement learning across more business use cases!

Bio: Aishwarya Srinivasan: MS Data Science - Columbia University || IBM - Data Science Elite || Unicorn in Data Science || Scikit-Learn Contributor || Deep Learning Researcher

Original. Reposted with permission.

Related:

- 5 Things You Need to Know about Reinforcement Learning

- Explaining Reinforcement Learning: Active vs Passive

- When reinforcement learning should not be used?