Explaining Reinforcement Learning: Active vs Passive

We examine the required elements to solve an RL problem, compare passive and active reinforcement learning, and review common active and passive RL techniques.

This post assumes that you are familiar with the basics of Reinforcement Learning(RL) and Markov Decision Processes, if not please refer to this previous post first.

In this post we would look into,

- What are required elements to solve an RL problem?

- What is meant by passive and active reinforcement learning and how do we compare the two?

- What are some common active and passive RL techniques?

What are the required elements to solve an RL problem?

Let’s consider a problem where the agent can be in various states and can choose an action from a set of actions. Such type of problems are called Sequential Decision Problems. An MDP captures a fully observable, non-deterministic environment with Markovian Transition Model and additive rewards in which such an agent acts. The solution to an MDP is an optimal policy which refers to the choice of action for every state that maximizes overall cumulative reward. Thus, the transition model that represents an agent’s environment(when the environment is known) and the optimal policy which decides what action the agent needs to perform in each state are required elements for training the agent learn a specific behavior.

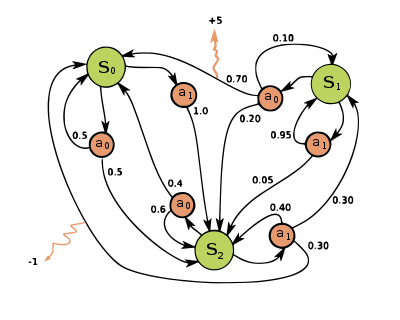

Fig. 1: Markov Decision Process (source: https://en.wikipedia.org/wiki/Markov_decision_process)

What is meant by passive and active reinforcement learning and how do we compare the two?

Both active and passive reinforcement learning are types of RL. In case of passive RL, the agent’s policy is fixed which means that it is told what to do. In contrast to this, in active RL, an agent needs to decide what to do as there’s no fixed policy that it can act on. Therefore, the goal of a passive RL agent is to execute a fixed policy (sequence of actions) and evaluate it while that of an active RL agent is to act and learn an optimal policy.

What are some common active and passive RL techniques?

Passive Learning

As the goal of the agent is to evaluate how good an optimal policy is, the agent needs to learn the expected utility Uπ(s) for each state s. This can be done in three ways.

Example: AI Playing Supermario using Deep RL

- Direct Utility Estimation:

In this method, the agent executes a sequence of trials or runs (sequences of states-actions transitions that continue until the agent reaches the terminal state). Each trial gives a sample value and the agent estimates the utility based on the samples values. Can be calculated as running averages of sample values. The main drawback is that this method makes a wrong assumption that state utilities are independent while in reality they are Markovian. Also, it is slow to converge.

Suppose we have a 4x3 grid as the environment in which the agent can move either Left, Right, Up or Down(set of available actions). An example of a run,

Total reward starting at (1,1) = 0.72

2. Adaptive Dynamic Programming(ADP)

ADP is a smarter method than Direct Utility Estimation as it runs trials to learn the model of the environment by estimating the utility of a state as a sum of reward for being in that state and the expected discounted reward of being in the next state.

Where R(s) = reward for being in state s, P(s'|s, π(s)) = transition model, γ = discount factor and Uπ(s) = utility of being in state s'.

It can be solved using value-iteration algorithm. The algorithm converges fast but can become quite costly to compute for large state spaces. ADP is a model based approach and requires the transition model of the environment. A model-free approach is Temporal Difference Learning.

- Temporal Difference Learning (TD)

TD learning does not require the agent to learn the transition model. The update occurs between successive states and agent only updates states that are directly affected.

Where α = learning rate which determines the convergence to true utilities.

While ADP adjusts the utility of s with all its successor states, TD learning adjusts it with that of a single successor state s'. TD is slower in convergence but much simpler in terms of computation.

Fig 2: Robots learning to play a game of chess (source: https://robotnor.no/expertise/fields-of-competence/robot-learning/)

Active Learning

- ADP with exploration function

As the goal of an active agent is to learn an optimal policy, the agent needs to learn the expected utility of each state and update its policy.

Can be done using a passive ADP agent and then using value or policy iteration it can learn optimal actions. But this approach results into a greedy agent.

Hence, we use an approach that gives higher weights to unexplored actions and lower weights to actions with lower utilities.

Where f(u,n) is the exploration function that increases with expected value u and decreases with number of tries n

R+ is an optimisic reward and Ne is the number of times we want an agent to be forced to pick an action in every state. The exploration function converts a passive agent into an active one.

- Q-Learning

Q-learning is a TD learning method which does not require the agent to learn the transitional model, instead learns Q-value functions Q(s, a) .

Q-values can be updated using the following equation,

Next action can be selected using the following policy,

Again this is simpler to compute but slower than ADP.

Table 1: Comparison of active and passive learning methods

| Fixed Policy (Active) | Policy not fixed (Passive) | |

|---|---|---|

|

Model-free (real world) |

Temporal Difference Learning (TD) | Q-learning |

|

Model-based (simulation) |

Adaptive Dynamic Programming(ADP) | ADP with proper exploration function |

I’d recommend the following resources to gain a deeper understanding of these concepts,

- Reinforcement Learning-An Introduction - By Richard Sutton and Andrew Barto

- Artificial Intelligence: A Modern Approach - Stuart Russell and Peter Norvig

- Teaching material - by David Silver

Related:

- 5 Things You Need to Know about Reinforcement Learning

- Resurgence of AI During 1983-2010

- Exclusive: Interview with Rich Sutton, the Father of Reinforcement Learning

- When reinforcement learning should not be used?