7 AI Use Cases Transforming Live Sports Production and Distribution

Here are 7 powerful AI led use cases both for linear television and for OTT apps that are transforming the live sports production landscape.

By Adrish Bera, Prime Focus Technologies

Today, advanced Artificial Intelligence (AI) and Machine Learning (ML) led solutions are capable of identifying specific game objects, constructs, players, events and actions. This aids in near real-time content discovery and helps sports producers create sports highlight packages automatically even when the game is in progress. Sports fans are always looking for new ways to engage with sports that bring them closer to the real-time action. Modern AI & ML technologies provide some truly immersive experiences that the fan demands.

Here are 7 powerful AI led use cases both for linear television and for OTT apps that are transforming the live sports production landscape:

1. Cataloguing Sports archive, discovery and search for storytelling aid

Leveraging AI lead automatic content recognition, the game content is tagged segment by segment. This makes the live match as well as entire sports archive searchable for every possible action and drama by the game producers

2. Auto-highlight package creation

Once the content is tagged automatically and exhaustively, machines can cut highlight packages based on pre-defined and auto-learnable rules

3. Interactive TV experience

Broadcasters can enable set top box providers or cable operators with dynamic match content, compelling stories and highlight clips during the match. These clips can be overlay-ed and delivered on top of live content as extra – accessible with the “Active TV” type button of the remote device.

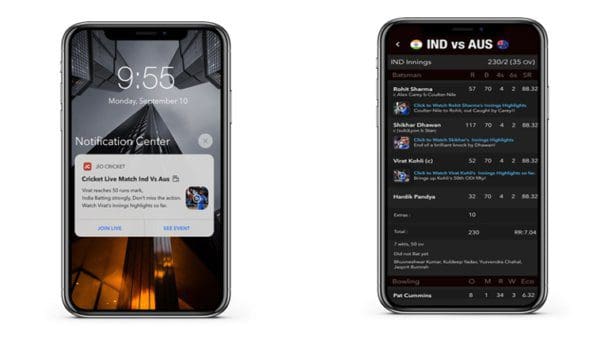

4. Video notifications for OTT Apps

Users automatically get notified about key events of a match (like 4, 6, wicket for Cricket, Goal for Soccer) as soon as the event occurs. They can play these videos with a single click.

5. Video Scorecards on OTT apps

AI-generated video clips can be added to Scorecards or the commentary text feed. This makes boring, textual scorecards and commentary come alive.

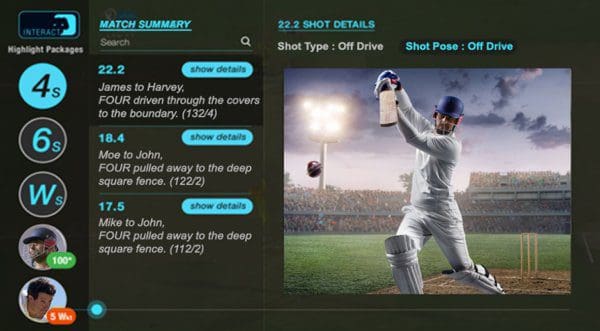

6. Immersive OTT experiences

The game data extracted using AI engines can be overlaid on top of the video to provide an “Amazon X-Ray” type experience. Users can explore different facets of the game in greater detail, without having to compromise the live viewing experience.

7. Personalised playlists & search for OTT app

Users can enjoy auto-curated highlights packages or playlists as the game progresses. They can use free text search capabilities to look for match events, and can also generate their own personalized playlists.

How the use cases are delivered

To deliver the powerful experiences listed above, we need to be able to identify and tag sporting events exhaustively, and with high accuracy and speed. The more nuanced the tags, the richer the downstream use cases. Today the sports producers try to achieve this by deploying an army of operators who watch the game in real time and tag key attributes ferociously minute by minute. Then there are a number of editors who search these tags and to assemble the highlight clips. This operation is not scalable and hence the viewers finally could see a limited variety of highlights and sometimes after hours of actual action taking place.

AI & ML change the landscape drastically – by delivering required accuracy, variety, speed and scale. AI can detect actions fast and within seconds can create an exhaustive set of highlight packages to see the game in hundreds of different angles.

Machine Learning for Sports

Machine Learning is based on the premise that a machine needs to be supplied with large volumes of training data to build a classification algorithm. Once the algorithm is built, it can predict results with the new set of data (test data). By this logic, if we feed the machine a lot of footage of a particular sport, it should begin to understand the sport’s actions. The reality is however quite different, as each sport is complex and unique. Even an uninitiated adult human would not be able to decipher a game like cricket, if he/she is just left with thousands of hours of match footage.

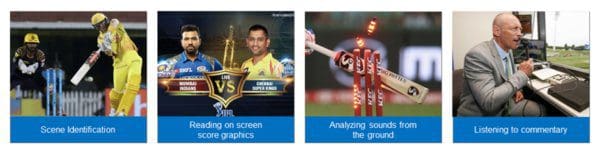

We need to therefore codify basic game logic & rules, and apply ML within a focused context for the machine to mimic human cognition. Let’s take the example of cricket to understand this better. While watching a cricket match, we decipher and appreciate the game through different elements in the match content. These are: 1) Our knowledge of the game actions like bowling, fielding, umpire signals etc. 2) On-screen graphics telling us the score highlights and the current state of the game 3) Sounds from the stadium like ball hitting bat, applause, appeal etc. and 4) Experts’ commentary.

To help machines understand the game like a human, we need to build a model based on these varied inputs. Typically, we deploy different kinds of neural networks as well as Computer Vision techniques to decipher various aspect of the four elements listed above. A wide variety of classification and cognition engines are used in tandem to “discern” the game from different perspectives.

To train these engines, we need to sift through hundreds of hours of match footage and annotate different frames, objects, actions etc. and generate training data for ML. These cognition engines are then stitched together using game logic and understanding of sports production in order to catalogue the game, segment by segment. E.g. for cricket, we deploy more than 11 such engines to extract 25+ attributes per ball including batsman, non-striker, bowler, runs scored, type of shot, fours, sixes, replays, crowd excitement levels, celebrations, wickets, bowling type, ball synopsis etc.

Search and Discovery

To make an archive or the footage of a single match discoverable, AI-generated metadata should be part of a search index that uses an advanced semantic search engine like Elastic Search. Techniques that help deliver sharper search results include Natural Language Processing (NLP), Key Entity Recognition, Stemming, Thesaurus, Fuzzy Logic and Duplication Removal.

Highlight Creation

Once the AI engine catalogues a game thoroughly, the next challenge is to create instant highlight packages that capture the game’s key events and drama. For spectators, excitement levels typically peak during the high points of a game. For instance, in Soccer this could be when a goal is scored or missed. Visual clues like a referee/umpire signal or text overlay on screen also denote key events. A highlight creation engine can tap these high points and attributes to create a simple highlight package.

But creating compelling highlights involves much more. For instance, a good cricket match highlight package is not just a combination of fours, sixes and wickets. The human editor artfully cuts a package that captures suspense, comedy, drama and tells a compelling story. If a batsman is beaten a couple of times before being out, the editor shows all 3 balls – not just the last one. Other elements like tournament montages, pre-match ceremony, player entry, toss of coin etc. also need to be learned and included in highlights.

AI-generated highlights is created using learnable business rules that are built from past match highlight productions. These need to be improved over time as the engine learns what works better and what does not. These rules are based on comprehensive attributes/tags for each match segment. Much like a human editor, machines can also bring in AI-based audio smoothening, scene transitions between two segments, smooth commentary cuts etc., so that human editors need to put in minimal effort to do Quality Check (QC) and finishing.

Technology and Logistics Challenges

No two sports are alike. Given the complexities of each sport, producers cannot use off-the-shelf, 3rd party video recognition engines from the likes of Google, Microsoft and IBM Watson to tag content, discover meaningful clips or create highlights. One needs to build and train custom models to tag sports content effectively. Also, specific models need to be built for specific sports – incorporating game logic, expert methods and historical learning. This is a painstaking process, but any attempt to create a generic sport model is likely to fail.

Each sport is also produced differently. For example, in Soccer a movement towards a goal is shown as a combination of long and close-up shots taken from different camera angles. For cricket or baseball, the camera tries to keep the ball in the middle of the frame while the batsman is hitting. We need to train our engines with specific knowledge of these nuances and production techniques.

Today, producers need to swiftly publish highlight clips to platforms like Facebook, Twitter and YouTube, for which they need game tagging and highlights generation to take place quickly. AI engines with both on-premise and cloud hosting capabilities can help achieve the necessary speed.

Future of Sports Production with AI is now and in here

The audience today expects to engage with the game more and more intimately and in personalised ways. Application and adoption of AI/ML is the only way to deliver these expectations. And there is no short cut. AI-led specific custom models for each sport to be deployed at scale and fine tunes for several months to achieve expected results. Like all other fields, AI will finally help automate routine tasks, enabling human resources to focus their intellect on far more creative pursuits.

Bio: Adrish Bera is the SVP - Online Video Platform, Artificial Intelligence and Machine Learning at Prime Focus Technologies (PFT) - building cutting edge video cognition capabilities through AI and ML for media and entertainment vertical to bring automation and efficiency in scale. Prior to PFT, Adrish was the CEO and a co-founder of Apptarix, creator of TeleTango, a social OTT platform. Adrish is an industry veteran with 20 years of experience in mobile software and telecom industry doing end-to-end product management, product development and Software engineering. He is also a technology blogger with broad interests in Technology marketing, Smartphones, emerging market, social media and digital advertisement.

Related:

- Top 10 Data Science Use Cases in Energy and Utilities

- Top 8 Data Science Use Cases in Marketing

- Using Time Series Encodings to Discover Baseball History’s Most Interesting Seasons