Top 10 Must-Know Machine Learning Algorithms for Data Scientists – Part 1

New to data science? Interested in the must-know machine learning algorithms in the field? Check out the first part of our list and introductory descriptions of the top 10 algorithms for data scientists to know.

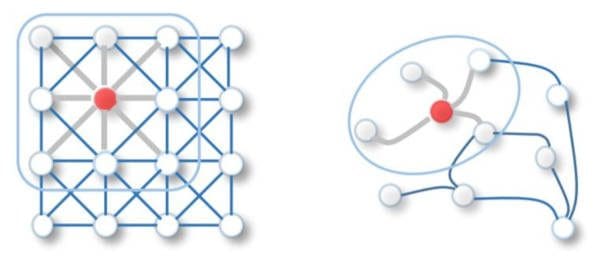

Image source: A Comprehensive Survey on Graph Neural Networks

Wading through the vast array of information for data science newcomers on machine learning algorithms can be a difficult and time-consuming process. Figuring out which algorithms are widely used and which are simply novel or interesting is not just an academic exercise; determining where to concentrate your time and focus during the early days of study can determine the difference between getting your career off to a quick start and experiencing an extended ground delay.

How, exactly, does one discriminate between immediately useful algorithms worthy of attention and, well, not so much? Determining how to come up with an objective list of machine learning algorithms of authority is inherently difficult, but it seems that going directly to practitioners for their feedback may be the optimal approach. Such a process presents a whole host of difficulties of its own, as could be easily imagined, and results of such surveys are few and far between.

KDnuggets, however, conducted such a survey within the last few years, asking respondents "Which Data Science / Machine Learning methods and algorithms did you use in 2018/2019 for a real-world application?" Of course, as alluded to above, such surveys are hindered by self-selection, lack of verifiability of participants, trust in the actual responses, etc., but this survey represents the most recent, most far-reaching, and ultimately the best source we have available to us.

And, so, this is the source we will use to identify the top 10 machine learning algorithms being used, and, as such, the top 10 must-know algorithms for data scientists. The first 5 of these top 10 must-know algorithms are introduced below, with a brief overview of what the algorithms are and how they work. We will followup with part 2 in the coming weeks.

Note the following:

- we have skipped over entries which do not map to machine learning algorithms (e.g. "visualization", "descriptive statistics", "text analytics")

- we have treated the entry "regression" separately as both "linear regression" and "logistic regression"

- we have swapped the entry "ensemble methods" for "bagging", a particular ensemble method, as a few other ensemble methods are also individually represented in this list

- we have skipped over any neural network entries, as these techniques combine architecture with a variety of different algorithms to achieve their goals, aspects which are beyond the scope of this discussion

1. Linear Regression

Regression is a time-tested manner for approximating relationships among a given collection of data, and the recipient of unhelpful naming via unfortunate circumstances.

Linear regression is a simple algebraic tool which attempts to find the “best” (straight, for the purposes of this discussion) line fitting 2 or more attributes, with one attribute (simple linear regression), or a combination of several (multiple linear regression), being used to predict another, the class attribute. A set of training instances is used to compute the linear model, with one attribute, or a set of attributes, being plotted against another. The model then attempts to identify where new instances would lie on the regression line, given a particular class attribute.

The relationship between predictor and response variables (x and y, respectively) can be expressed via the following equation, with which everyone reading this is undoubtedly familiar: .

m and b are the regression coefficients, and denote line slope and y-intercept, respectively. I suggest you check your elementary school algebra notes if you are having trouble recalling :)

The equation for multiple linear regression is generalized for n attributes is:

2. Decision Trees

In machine learning, decision trees have been used for decades as effective and easily understandable data classifiers (contrast that with the numerous blackbox classifiers in existence). Over the years, a number of decision tree algorithms have resulted from research, 3 of the most important, influential, and well-used being:

Iterative Dichotimiser 3 (ID3) - Ross Quinlan's precursor to the C4.5

- Iterative Dichotimiser 3 (ID3) - Ross Quinlan's precursor to the C4.5

- C4.5 - one of the most popular classifiers of all time, also from Quinlan

- CART - independently invented around the same time as C4.5, also still very popular

ID3, C4.5, and CART all adopt a top-down, recursive, divide-and-conquer approach to decision tree induction.

Over the years, C4.5 has become a benchmark against which the performance of newer classification algorithms are often measured. Quinlan’s original implementation contains proprietary code; however, various open-source versions have been coded over the years, including the (at one time very popular) Weka Machine Learning Toolkit's J48 decision tree algorithm.

The model- (or tree-) building aspect of decision tree classification algorithms are composed of 2 main tasks: tree induction and tree pruning. Tree induction is the task of taking a set of pre-classified instances as input, deciding which attributes are best to split on, splitting the dataset, and recursing on the resulting split datasets until all training instances are categorized. Tree pruning involves reducing the size of decision trees by eliminating branches which are redundant or non-essential to the classification process.

While building our tree, the goal is to split on the attributes which create the purest child nodes possible, which would keep to a minimum the number of splits that would need to be made in order to classify all instances in our dataset. This purity is generally measured by one of a number of different attribute selection measures.

There are 3 prominent attribute selection measures for decision tree induction, each paired with one of the 3 prominent decision tree classifiers.

- Information gain - used in the ID3 algorithm

- Gain ratio - used in the C4.5 algorithm

- Gini index - used in the CART algorithm

In ID3, purity is measured by the concept of information gain, based on the work of Claude Shannon, which relates to how much would need to be known about a previously-unseen instance in order for it to be properly classified. In practice, this is measured by comparing entropy, or the amount of information needed to classify a single instance of a current dataset partition, to the amount of information to classify a single instance if the current dataset partition were to be further partitioned on a given attribute.

One of the most important takeaways from this discussion should be that decision tree is a classification strategy as opposed to some single, well-defined classification algorithm. While we have had a brief look at 3 separate decision tree algorithm implementations, there are a variety of ways to configure different aspects of each. Indeed, any algorithm which seeks to classify data, and takes a top-down, recursive, divide-and-conquer approach to crafting a tree-based graph for subsequent instance classification, regardless of any other particulars (including attribution split selection methods and optional tree-pruning approach) would be considered a decision tree.

3. k-means Clustering

k-means is a simple, yet often effective, approach to clustering. Traditionally, k data points from a given dataset are randomly chosen as cluster centers, or centroids, and all training instances are plotted and added to the closest cluster. After all instances have been added to clusters, the centroids, representing the mean of the instances of each cluster are re-calculated, with these re-calculated centroids becoming the new centers of their respective clusters.

At this point, all cluster membership is reset, and all instances of the training set are re-plotted and re-added to their closest, possibly re-centered, cluster. This iterative process continues until there is no change to the centroids or their membership, and the clusters are considered settled.

Convergence is achieved once the re-calculated centroids match the previous iteration’s centroids, or are within some preset margin. The measure of distance is generally Euclidean in k-means, which, given 2 points in the form of (x, y), can be represented as:

Of technical note, especially in the era of parallel computing, iterative clustering in k-means is serial in nature; however, the distance calculations within an iteration need not be. Therefore, for sets of a significant size, distance calculations are a worthy target for parallelization in the k-means clustering algorithm.

Also, while we have just described a particular method of clustering using cluster mean values and Euclidean distance, it should not be difficult to imagine a variety of possible other methods using, say, cluster median values or any number of other distance metrics (cosine, Manhattan, Chebyshev, etc.)

4. Bagging

In a given scenario, it may prove more useful than not to to chain or group classifiers together, using the techniques of voting, weighting, or combination to pursue the most accurate classifier possible. Ensemble learners are classifiers which provide this functionality in a variety of ways. Bagging is an example of an ensemble learner.

Bagging operates by simple concept: build a number of models, observe the results of these models, and settle on the majority result. I recently had an issue with the rear axle assembly in my car: I wasn't sold on the diagnosis of the dealership, and so I took it to 2 other garages, both of which agreed the issue was something different than the dealership suggested. Voila. Bagging in action.

I only visited 3 garages in my example, but you could imagine that accuracy would likely increase if I had visited tens or hundreds of garages, especially if my car's problem was one of a more complex nature. This holds true for bagging, and the bagged classifier often is significantly more accurate than single constituent classifiers. Also note that the type of constituent classifier used are inconsequential; the resulting model can be made up of any single classifier type.

Bagging is short for bootstrap aggregation, so named because it takes a number of samples from the dataset, with each sample set being regarded as a bootstrap sample. The results of these bootstrap samples are then aggregated.

5. Support Vector Machines

As mentioned, Support Vector Machines (SVMs) are a particular classification strategy. SMVs work by transforming the training dataset into a higher dimension, which is then inspected for the optimal separation boundary, or boundaries, between classes. In SVMs, these boundaries are referred to as hyperplanes, which are identified by locating support vectors, or the instances that most essentially define classes, and their margins, which are the lines parallel to the hyperplane defined by the shortest distance between a hyperplane and its support vectors. Consequently, SVMs are able to classify both linear and nonlinear data.

The grand idea with SVMs is that, with a high enough number of dimensions, a hyperplane separating a particular class from all others can always be found, thereby delineating dataset member classes. When repeated a sufficient number of times, enough hyperplanes can be generated to separate all classes in n-dimensional space. Importantly, SVMs look not just for any separating hyperplane but the maximum-margin hyperplane, being that which resides equidistance from respective class support vectors.

Central to SVMs is the kernel trick, which enables comparison between original data and potential higher dimensionality feature space transformations of this data in order to determine if such transformation facilitates the separation of data classes, which would put us in a position where hyperplanes could separate classes. The kernel trick is essential as it can make potentially otherwise intractable transformation computations feasible, with these comparisons of original and high-dimensional feature space date enabled by pairwise similarity comparisons.

When data is linearly-separable, there are many separating lines that could be chosen. Such a hyperplane can be expressed as

where W is a vector of weights, b is a scalar bias, and X are the training data (of the form (x1, x2, ...)). If our bias, b, is thought of as an additional weight, the equation can be expressed as

which can, in turn, be rewritten as a pair of linear inequalities, solving to greater or less than zero, either of which satisfied indicate that a particular point lies above or below the hyperplane, respectively. Finding the maximum-margin hyperplane, or the hyperplane that resides equidistance from the support vectors, is done by combining the linear inequalities into a single equation and transforming them into a constrained quadratic optimization problem, using a Lagrangian formulation and solving using Karush-Kuhn-Tucker conditions, which is beyond what we will discuss here.

So there you have 5 must-know algorithms for data scientists, with must-know being defined as the most utilized in practice, and the most utilized informed by the results of a recent KDnuggets survey. We will followup with the second part of this list in the coming weeks. Until then, we hope you find this list and simple explanations helpful, and that you are able to branch out to learn more about each of these form here.

Related: