Machine Learning & Artificial Intelligence: Main Developments in 2016 and Key Trends in 2017

Machine Learning & Artificial Intelligence: Main Developments in 2016 and Key Trends in 2017

As 2016 comes to a close and we prepare for a new year, check out the final instalment in our "Main Developments in 2016 and Key Trends in 2017" series, where experts weigh in with their opinions.

At KDnuggets, we try to keep our finger on the pulse of main events and developments in industry, academia, and technology. We also do our best to look forward to key trends on the horizon.

We recently asked some of the leading experts in Big Data, Data Science, Artificial Intelligence, and Machine Learning for their opinion on the most important developments of 2016 and key trends they 2017.

To get up to speed on our first 2 posts published outlining expert opinions, see the following:

- Big Data: Main Developments in 2016 and Key Trends in 2017

- Data Science & Predictive Analytics: Main Developments in 2016 and Key Trends in 2017

In the final post of the series, we bring you the collected responses to the question:

"What were the main Artificial Intelligence/Machine Learning related events in 2016 and what key trends do you see in 2017?"

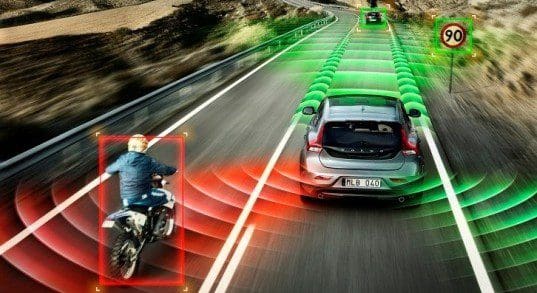

Common themes include the triumphs of deep neural networks, reinforcement learning's successes, AlphaGo as exemplar of the power of both of these phenomena in unison, the application of machine learning to the Internet of Things, self-driving vehicles, and automation, among others.

We generally asked participants to keep their responses to within 100 words or so, but were amenable to longer answers if the situation warranted. Without further delay, here is what we found.

Yaser Abu-Mostafa, Caltech (in consultation with Professor Hsuan-Tien Lin and Professor Malik Magdon-Ismail)

2016 and 2017 are an exciting time for Machine Learning. There are two trends that have been accelerating. First, the showcases that prove ML to be an extraordinarily powerful technology. The recent successes of AlphaGo and inhuman encryption are compelling examples. Second, the expanding reach of ML applications. More complex tasks, more domains, and more acceptance of ML as the way to exploit data everywhere. The Google/Microsoft/Facebook/IBM AI partnerships are there for a reason.

Xavier Amatriain, VP Engineering at Quora

2016 may very well go down in history as the year of “the Machine Learning hype”. Everyone now seems to be doing machine learning, and if they are not, they are thinking of buying a startup to claim they do.

Now, to be fair, there are reasons for much of that “hype”. Can you believe that it has been only a year since Google announced they were open sourcing Tensor Flow? TF is already a very active project that is being used for anything ranging from drug discovery to generating music. Google has not been the only company open sourcing their ML software though, many followed lead. Microsoft open sourced CNTK, Baidu announced the release of PaddlePaddle, and Amazon just recently announced that they will back MXNet in their new AWS ML platform. Facebook, on the other hand, are basically supporting the development of not one, but two Deep Learning frameworks: Torch and Caffe. On the other hand, Google is also supporting the highly successful Keras, so things are at least even between Facebook and Google on that front.

Besides the “hype” and the outpour of support from companies to machine learning open source projects, 2016 has also seen a great deal of applications of machine learning that were almost unimaginable a few months back. I was particularly impressed by the quality of Wavenet’s audio generation. Having worked on similar problems in the past I can appreciate those results. I would also highlight some of the recent results in lip reading, a great application of video recognition that is likely to be very useful (and maybe scary) in the near future. I should also mention Google’s impressive advances in machine translation. It is amazing to see how much this area has improved in a year.

As a matter of fact, machine translation is not the only interesting advance we have seen in machine learning for language technologies this past year. I think it is very interesting to see some of the recent approaches to combine deep sequential networks with side-information in order to produce richer language models. In “A Neural Knowledge Language Model”, Bengio’s team combines knowledge graphs with RNNs, and in “Contextual LSTM models for Large scale NLP Tasks”, the DeepMind folks incorporate topics into the LSTM model. We have also seen a lot of interesting work in modeling attention and memory for language models. As an example, I would recommend “Ask Me Anything: Dynamic Memory Networks for NLP”, presented in this year’s ICML.

I could not finish this review of 2016 without some mention of advances in my main area of expertise: Recommender Systems. Of course Deep Learning has also impacted this area. While I would still not recommend DL as the default approach to recommender systems, it is interesting to see how it is already being used in practice, and in large scale, by products like Youtube. That said, there has been interesting research in the area that is not related to Deep Learning. The best paper award in this year’s ACM Recsys went to “Local Item-Item Models For Top-N Recommendation”, an interesting extension to Sparse Linear Methods (i.e. SLIM) using an initial unsupervised clustering step. Also, “Field-aware Factorization Machines for CTR Prediction”, which describes the winning approach to the Criteo CTR Prediction Kaggle Challenge is a good reminder that Factorization Machines are still a good tool to have in your ML toolkit.

I could probably go on for a couple of pages just listing impactful advances in machine learning in the last 12 months. Note that I haven’t even listed any of the breakthroughs related to image recognition or deep reinforcement learning, or obvious applications such as self-driving cars or game playing, which all saw huge advances in 2016. Not to mention all the controversy around how machine learning is having or could have negative effects on society and the rise of discussions around algorithmic bias and fairness.

So, what should we expect for 2017? It is hard to say given how fast things are moving in the area. I am sure we will have a hard time just digesting what we will see in the NIPS conference in a few days. I am definitely looking forward to many machine learning advances in the areas that I care the most about: personalization/recommendations, and natural language processing. I am sure, for example, that in the next few months we will see how ML can tackle the problem of fake news. But, of course, I also hope to see more self driving cars on the roads and machine learning being put to good use for health-related applications or for creating a better informed and more just society.

Yoshua Bengio, Professor, Department of Computer Science and Operations Research, Université de Montréal, Canada Research Chair in Statistical Learning Algorithms, etc.

The main events of 2016 from my point of view have been in the areas of deep reinforcement learning, generative models, and neural machine translation. First we had AlphaGo (DeepMind's network which beat the Go world champion using deep RL). Over the whole year we have seen a series of papers showing the success of generative adversarial networks (for unsupervised learning of generative models). Also in the area of unsupervised learning, we have seen the unexpected success of auto-correlation neural networks (like the WaveNet paper from DeepMind). Finally, just about a month ago we have seen the crowning of neural machine translation (which was initiated in part by my lab since 2014) with Google bringing this technology to the scale of Google Translate and obtaining really amazing results (approaching very significantly human-level performance).

I believe these are good indicators for the progress to be expected in 2017: more advances in unsupervised learning (which remains a major challenge, we are very far from human abilities in that respect) and in the ability of computers to understand and generate natural language, probably first with chatbots and other dialogue systems. Another likely trend is the increase in research and results of applying deep learning in the healthcare domain, on a variety of types of data, including medical images, clinical data, genomic data, etc. Progress in computer vision will continue as we see more applications, including of course self-driving cars but I have the impression that in general the community is under-estimating the challenges ahead before reaching true autonomy.

Pedro Domingos, Professor of computer science at UW and author of 'The Master Algorithm'

The main event of 2016 was AlphaGo's win. Two areas where we might see substantial progress in 2017 are chatbots and self-driving cars, just because so many major companies are investing heavily in them. On the more fundamental side, one thing we'll probably see is increasing hybridization of deep learning with other ML/AI techniques, as is typical for a maturing technology.

Oren Etzioni, CEO of the Allen Institute for Artificial Intelligence. He was a Professor at U. Washington, founder/co-founder of several companies including Farecast and Decide, and the author of over 100 technical papers.

The tremendous success of AlphaGo is the crowning achievement for an exciting 2016. In 2017, we will see more reinforcement learning in neural networks, more research on neural networks in NLP & vision. However, the challenges of neural networks with limited labeled data, exemplified by systems like Semantic Scholar, remain formidable and will occupy us for years to come. These are still early days for Deep Learning and more broadly for Machine Learning.

Ajit Jaokar, #Datascience, #IoT, #MachineLearning, #BigData, Mobile,#Smartcities, #edtech (@feynlabs + @countdowncode) Teaching (@forumoxford + @citysciences)

2017 will be a big year for both IoT and AI. As per my recent KDnuggets post, AI will be a core competency for Enterprises. For IoT, this would mean the ability to build and deploy models across platforms (Cloud, Edge, Streaming). This ties continuous learning to the vision of continuous improvement through AI. It also needs to new competencies as AI and devops converge.

Neil Lawrence, Professor of Machine Learning at the University of Sheffield

I think things are progressing much as we might expect at the moment. Deep learning methods are being intelligently deployed on very large data sets. For smaller data sets I think we'll see some interesting directions on model repurposing, i.e the reuse of pre-trained deep learning models. There are some interesting open questions around how best to do this. A further trend has been the increasing press focus on the field. Including mainstream articles on papers placed on Arxiv that have not yet been reviewed. This appetite for advance was also present last year but I think this year we've seen it accelerate. In response I think academics should probably become a lot more careful about how they choose to promote their work (for example on social media), particularly when it is unreviewed.

Randal Olson, Senior Data Scientist at the University of Pennsylvania Institute for Biomedical Informatics

Automated Machine Learning (AutoML) systems started becoming competitive with human machine learning experts in 2016. Earlier this year, an MIT group created a Data Science Machine that beat hundreds of teams in the popular KDD Cup and IJCAI machine learning competitions. Just this month, our in-house AutoML system, TPOT, started ranking in the 90th percentile on several Kaggle competitions. Needless to say, I am confident that AutoML systems will start replacing human experts for standard machine learning analyses in 2017.

Charles Martin, Data Scientist & Machine Learning Expert

2016 has been the watershed year for Deep learning. We have had a year with Google Tensorflow, and the applications keep pouring in. Combined with say Keras, Jupyter Notebooks, and GPU-enabled AWS nodes, Data Science teams have the infrastructure on-demand to start building truly innovative learning applications and start generating revenue fast. But they may not have the talent? It is not about coding. It is not an infrastructure play. It is very different from traditional analytics, and no one really understands Why Deep Learning Works. Still, let's face it, it is all Google and Facebook talk about! And the C-suite is listening. In 2017, companies will be looking to bring best-of-breed Deep Learning technologies in house to improve the bottom line.

Matthew Mayo, Data Scientist, Deputy Editor of KDnuggets

The big story of 2016 has to be the accelerated returns we are seeing from deep learning. The (not solely) neural network-based "conquering" of Go is likely the most prominent example, but there are others. Looking forward to 2017, I would expect that the continued advancements in neural networks will remain the big story. However, automated machine learning will quietly become an important event in its own right. Perhaps not as sexy to outsiders as deep neural networks, automated machine learning will begin to have far-reaching consequences in ML, AI, and data science, and 2017 will likely be the year this becomes apparent.

Brandon Rohrer, Data Scientist at Facebook

In 2016 machines read lips more accurately than humans (arxiv.org/pdf/1611.05358.pdf), type from dictation faster than humans (arxiv.org/abs/1608.07323) and create eerily realistic human speech (arxiv.org/pdf/1609.03499.pdf). These are the results of exploring novel architectures and algorithms. Convolutional Neural Networks are being modified beyond recognition and combined with reinforcement learners and time-aware methods to open up new application areas. In 2017 I expect a few more human level benchmarks to fall, particularly those that are vision-based and thus amenable to CNNs. I also expect (and hope!) that our community forays into the nearby territories of decision making, non-vision feature creation and time-aware methods will become more frequent and fruitful. Together these make intelligent robots possible. If we are really lucky, 2017 will bring us a machine that can beat humans at making a cup of coffee.

Daniel Tunkelang, Data science, Engineering, and Leadership

The biggest story of 2016 was AlphaGo defeating Lee Sedol, the human world champion of Go. It was a surprise even to the AI community, and it will be remembered as the tipping point in the rise of deep learning. 2016 was the year of deep learning and AI. Chatbots, self-driving cars, and computer-aided diagnosis have unlocked the possibilities of what we can do by throwing enough GPUs at the right training data. 2017 will bring us successes and disillusionments. Technologies like TensorFlow will commodify deep learning, and AI will be something we take for granted in consumer products. But we'll hit the limits of what we can model and optimize. We'll have to confront the biases in our data. And we'll grow up and realize that we're nowhere close to general AI or the singularity.

Related:

Machine Learning & Artificial Intelligence: Main Developments in 2016 and Key Trends in 2017

Machine Learning & Artificial Intelligence: Main Developments in 2016 and Key Trends in 2017