Are Deep Neural Networks Creative?

Deep neural networks routinely generate images and synthesize text. But does this amount to creativity? Can we reasonably claim that deep learning produces art?

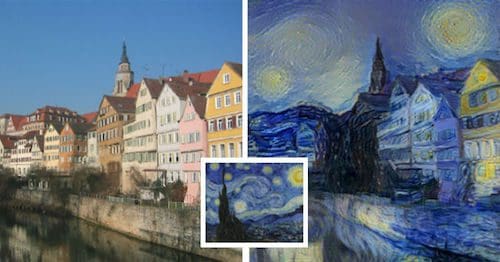

Are deep neural networks creative? It seems like a reasonable question. Google's "Inceptionism" technique transforms images, iteratively modifying them to enhance the activation of specific neurons in a deep net. The images appear trippy, transforming rocks into buildings or leaves into insects. Another neural generative model, introduced by Leon Gatys of the University of Tubingen in Germany, can extract the style from one image (say a painting by Van Gogh), and apply it to the content of another image (say a photograph).

Generative adversarial networks (GANs), introduced by Ian Goodfellow, are capable of synthesizing novel images by modeling the distribution of seen images. Additionally, character-level recurrent neural network (RNN) language models now permeate the internet, appearing to hallucinate passages of Shakespeare, Linux source code, and even Donald Trump's hateful Twitter ejaculations. Clearly, these advances emanate from interesting research and deserve the fascination they inspire.

In this blog post, rather than address the quality of the work (which is admirable), or explain the methods (which has been done, ad nauseum), we'll instead address the question, can these nets reasonably be called "creative"?Already, some make the claim. The landing page for deepart.io, a site which commercializes the "Deep Style" work, proclaims "TURN YOUR PHOTOS INTO ART". If we accept creativity as a prerequisite for art, the claim is made here implicitly.

In an article on Engadget.com, Aaron Souppouris described a character-based RNN, suggesting that higher sampling temperatures make the network "more creative". In this view, creativity is the opposite of coherence.

Can we accept a view of creativity which consists primarily of stochasticity? Does it make any sense to accept a definition by which creativity is maximized by a random number generator? In this view, creativity reduces to entropy maximization. Interestingly, this seems opposite to views on creativity espoused by deep learning pioneer Juergen Schmidhuber, who suggests that low entropy, in the form of short description length is a defining characteristic of art. More importantly, it seems at odds with the notion of creativity we attribute to humans. It seems uncontroversial to label Charlie Parker, Beethoven, Dostoevsky, and Picasso, as creative, and yet they their work is clearly coherent.

Sampling is Not Enough

The algorithms, to which we seem tempted to hastily ascribe creativity, tend to fall into two classes. First, there are deterministic processes like Inceptionism and Deep Style. Then there are stochastic models, like RNN language models and generative adversarial networks, which model the distribution underlying training data, providing a sampling mechanism for generating new images/text from the approximate distribution. Note: Given random auto-encoder weights, Deep Style could be grouped in the category of stochastic generating mechanism. Similarly, Inceptionism could be made stochastic by randomly choosing the nodes to enhance.

In the deterministic case, creativity enters the process in two places. First, the creators of Inceptionism and Deep Style are themselves exceptionally creative. These papers introduced new and captivating tools that did not previously exist. Secondly, the wielder of these tools can make creative decisions about what images to process and what settings of the model's parameters to use. But of course, just as a camera cannot truthfully be described as creative, these tools have no agency. While the capabilities are impressive, with respect to a creative process, these tools function as super-cool Photoshop filters but do not themselves express creativity.

Assessing the creative capacity of stochastic models seems more difficult. The capacity of GANs and RNNs to generate images on their own, requiring only a random seed, captures our imaginations. Recent research papers and blog posts, including my own, tend to anthropomorphize these models, describing their generative process as an act of "hallucination". However, I'd argue that these models, while exciting and promising, do not meet a reasonable bar to be called "creative".

Consider the airplane. At one point, there were no airplanes on earth. Then some creative humans invented an airplane. Of course, in a world without airplanes, no amount of random sampling from the distribution of existing technologies could produce an airplane. Creativity, as we apply the term most superlatively, refers not to those individuals who produce unbiased samples imitating that which exists, but to those with a capacity for divergent thinking, producing ideas and art, that is simultaneously compelling and yet surprising. This work doesn't reflect the distribution of previous work, but actually shifts it. That is, following the invention of airplanes, the probability of observing one became substantially higher.

The same can be said of artists considered profoundly creative. Consider for example Bach, whose development of counterpoint altered musical composition, or Charlie Parker, whose improvisational language, termed bebop, altered instrumental improvisation across decades and musical styles. In contrast, artists and writers who convincingly imitate, might more accurately be described as masterful than as creative. The papers introducing GANS and Deep Style are themselves creative precisely because they opened new areas of research.

Is it Possible to Build a Creative Machine?

It seems necessary to clarify that I hold no religious antogonism to the idea of a creative machine. The human brain is itself a creative machine, and it would be absurd to suggest that creativity is a property of carbon that could not be reproduced in silicon.

However, we should hesitate before attributing the human virtue of creativity to today's models. Deterministic image generators are fascinating, but exhibit no agency. Stochastic models that produce realistic output might possess something akin to mastery. But for AI to exhibit creativity, it must do more than imitate. Perhaps reinforcement learners, upon discovering novel strategies, do exhibit creativity. AlphaGo for example achieved superhuman performance at playing GO, discovering strategies that diverged dramatically from those known to human players. This system might reasonably be called creative. Still, humans exhibit creativity not only in producing strategies, but also in the broader domain of inventing problems to solve. It seems inevitable that truly creative AI will come. And while we should consider the possibility seriously, we should be wary of premature proclamations and the temptation to anthropomorphize our algorithms.

Zachary Chase Lipton is a PhD student in the Computer Science Engineering department at the University of California, San Diego. Funded by the Division of Biomedical Informatics, he is interested in both theoretical foundations and applications of machine learning. In addition to his work at UCSD, he has interned at Microsoft Research Labs and as a Machine Learning Scientist at Amazon, and is a Contributing Editor at KDnuggets.

Zachary Chase Lipton is a PhD student in the Computer Science Engineering department at the University of California, San Diego. Funded by the Division of Biomedical Informatics, he is interested in both theoretical foundations and applications of machine learning. In addition to his work at UCSD, he has interned at Microsoft Research Labs and as a Machine Learning Scientist at Amazon, and is a Contributing Editor at KDnuggets.

Related:

- Does Deep Learning Come from the Devil?

- MetaMind Competes with IBM Watson Analytics and Microsoft Azure Machine Learning

- Deep Learning and the Triumph of Empiricism

- The Myth of Model Interpretability

- (Deep Learning’s Deep Flaws)’s Deep Flaws

- Data Science’s Most Used, Confused, and Abused Jargon