Deep Learning: The Free eBook

Deep Learning: The Free eBook

"Deep Learning" is the quintessential book for understanding deep learning theory, and you can still read it freely online.

In the past few weeks, KDnuggets has brought a selection of free data science-related ebooks to our readers. This week we will continue this new tradition, and will do so by looking at one of the most influential books in the space of the past five years.

Deep Learning, by Ian Goodfellow, Yoshua Bengio and Aaron Courville, was originally released in 2016 as one of the first books dedicated to the at-the-time exploding field of deep learning. Not only was it a first, it was also written by a team of standout researchers at the forefront of developments at the time, and has remained a highly -influential and -regarded work in deep neural networks.

This is a bottom-up, theory-heavy treatise on deep learning. This is not a book full of code and corresponding comments, or a surface-level hand wavy overview of neural networks. This is an in-depth mathematics-based explanation of the field.

Like many others who set out to perfect their understanding of deep learning when it was released, this book is a personal favorite of mine. I have never treated it as a book to read cover to cover at a single effort; instead, I find myself reading chapters and selections of chapters over long periods of time. In fact, though I have owned a paper copy of the book since it was first released, I can admit that I have never read the entire book; on the other hand, there are a few chapters I have read more than once.

The book's table of contents are as follow:

- Introduction

Part I: Applied Math and Machine Learning Basics

- Linear Algebra

- Probability and Information Theory

- Numerical Computation

- Machine Learning Basics

Part II: Modern Practical Deep Networks

- Deep Feedforward Networks

- Regularization for Deep Learning

- Optimization for Training Deep Models

- Convolutional Networks

- Sequence Modeling: Recurrent and Recursive Nets

- Practical Methodology

- Applications

Part III: Deep Learning Research

- Linear Factor Models

- Autoencoders

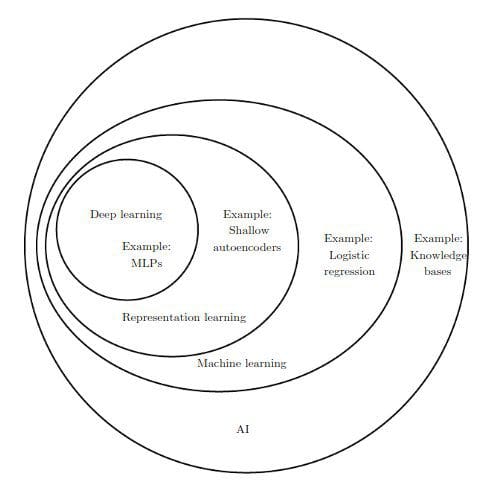

- Representation Learning

- Structured Probabilistic Models for Deep Learning

- Monte Carlo Methods

- Confronting the Partition Function

- Approximate Inference

- Deep Generative Models

I actually want to come clean about something at this point: this "ebook" isn't exactly an ebook at all. While the book exists in full format on the book's website, their is no single, bundled collection of downloadable chapters of this book available, for contractual reasons between the authors and their publisher. Instead, chapters can be freely read one by one on the website. If this poses a problem for you, or if you find the book worthy enough that you would like a physical copy for future reference, you can always shell out the cash for your very own.

The book goes beyond only being helpful for deep learning. The chapter on machine learning basics, for example, is incredibly well written and one of the best introductions to the topics I have read, striking a good balance between approachability and in-depth explanation. It is also commendable that the book also spends time on other important foundational topics such as linear algebra, probability, information theory, and numerical computation.

The book's introduction gives a rich and detailed overview of neural network history, which itself makes for an interesting read and provides a frozen-in-time perspective of the state of affairs when the book was written amidst the deep learning explosion. Something which was of particular importance at the time of the book's original publication as it was generally not broadly understood, and which now acts as a sign of how far the expectations and general understanding of neural networks has come in a short time, Deep Learning sets out early on to dispel the "neural network as a biological brain" fallacy immediately in the books' introduction:

Media accounts often emphasize the similarity of deep learning to the brain. While it is true that deep learning researchers are more likely to cite the brain as an influence than researchers working in other machine learning fields, such as kernel machines or Bayesian statistics, one should not view deep learning as an attempt to simulate the brain. Modern deep learning draws inspiration from many fields, especially applied math fundamentals like linear algebra, probability, information theory, and numerical optimization. While some deep learning researchers cite neuroscience as an important source of inspiration, others are not concerned with neuroscience at all.

If you are looking to learn deep neural networks from the bottom up, with a heavy focus on theory and gaining an understanding of the mathematics involved, Deep Learning is likely the book for you. Check out the book's website to see if you agree, and start reading the book for free today.

Related:

- Mathematics for Machine Learning: The Free eBook

- Dive Into Deep Learning: The Free eBook

- A Concise Course in Statistical Inference: The Free eBook

Deep Learning: The Free eBook

Deep Learning: The Free eBook