Google’s Model Search is a New Open Source Framework that Uses Neural Networks to Build Neural Networks

Google’s Model Search is a New Open Source Framework that Uses Neural Networks to Build Neural Networks

The new framework brings state-of-the-art neural architecture search methods to TensorFlow.

Source: https://github.com/google/model_search

I recently started a new newsletter focus on AI education and already has over 50,000 subscribers. TheSequence is a no-BS( meaning no hype, no news etc) AI-focused newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers and concepts. Please give it a try by subscribing below:

Automated machine learning(AutoML) is this idea of using machine learning to automate the creation of machine learning models. AutoML has become one of the hottest areas of research in the deep learning space. One of the subdisciplines of AutoML that has been gaining a lot of momentum is known as neural architecture search(NAS). Conceptually, NAS focuses on building neural network architectures for a given problem. Most successful NAS techniques use methods such as reinforcement learning or evolutionary algorithms which have proven to achieve state-of-the-art results in different domains. However, despite all its success, NAS methods remain really difficult to use.

The challenges for implementing NAS are everywhere. For starters, there are not many NAS frameworks integrated into mainstream deep learning stacks such as TensorFlow or PyTorch. Also, many NAS implementation require a lot of domain expertise to start with a series of architectures that make sense for a given problem. Finally, NAS stacks are really expensive and difficult to use.

To overcome some of this challenges, Google Research just open sourced Model Search, a framework to help data scientists find the optimal architecture for a deep learning problem in a fast and cost-effective way. Model Search enables a series of key functionalities that are essential for successful NAS experiments:

- Run several NAS algorithms for a given dataset.

- Compare different models produced during the search process.

- Create a custom search space for a given problem.

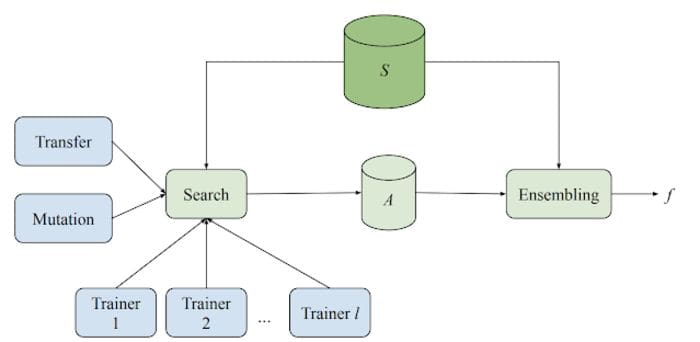

The Model Search architecture is based on four foundational components:

- Model Trainers: These components train and evaluate models asynchronously.

- Search Algorithms: The search algorithm selects the best trained architectures and adds some “mutations” to it and sends it to the trainers for further evaluation.

- Transfer Learning Algorithm: Model Search uses transfer learning techniques such as knowledge distillation to reuse knowledge across different experiments.

- Model Database: The model database persists the results of the experiments in ways that can be reused on different cycles.

Source: https://ai.googleblog.com/2021/02/introducing-model-search-open-source.html

Model Search is based on TensorFlow which allow data scientists to fully interoperate with modern deep learning techniques. The open source release came accompanied by a research paper that explains the intricacies of Model Search. In the still nascent world of AutoML techniques, Model Search is one of the most compelling efforts to create a consistent and extensible experience for the use of NAS techniques. I really hope that Google doubles down in this effort.

Original. Reposted with permission.

Related:

- Microsoft Explores Three Key Mysteries of Ensemble Learning

- Inside the Architecture Powering Data Quality Management at Uber

- DeepMind’s MuZero is One of the Most Important Deep Learning Systems Ever Created

Google’s Model Search is a New Open Source Framework that Uses Neural Networks to Build Neural Networks

Google’s Model Search is a New Open Source Framework that Uses Neural Networks to Build Neural Networks