Deep Learning, Artificial Intuition and the Quest for AGI

Deep Learning systems exhibit behavior that appears biological despite not being based on biological material. It so happens that humanity has luckily stumbled upon Artificial Intuition in the form of Deep Learning.

Image credit.

There is a renaissance happening in the field of artificial intelligence. For many long term practitioners in the field, it is not too obvious. I wrote earlier about the push back that many are making against the developments of Deep Learning. Deep Learning is however an extremely radical departure from classical methods. One researcher who recognized that our classical approaches to Artificial General Intelligence (AGI) were all but broken is Monica Anderson.

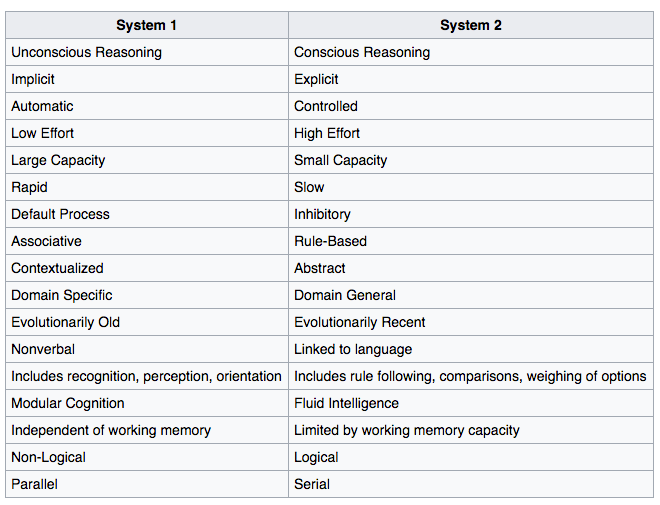

Anderson are one of the few researchers that recognized early on that the scientific approach of reductionism was fatally flawed and was leading research astray in our quest for AGI. One very good analogy that highlights the difference between Deep Learning and classical A.I. approaches, is the difference between intuition and logic. Dual Process Theory theorizes that there are two kinds of cognition:

Image credit.

Classical A.I. techniques has focused mostly on the logical basis of cognition, Deep Learning by contrast operates in the area of cognitive intuition. Deep Learning systems exhibit behavior that appears biological despite not being based on biological material. It so happens that humanity has luckily stumbled upon Artificial Intuition in the form of Deep Learning.

The argument that Anderson brings forward is that to build systems with capabilities of intuition, one cannot be dependent on constructions that are based on ‘Reductionist Methods.’ Note that Anderson also recognizes Deep Learning as an alternative approach to Artificial Intuition. Anderson characterizes Reductionist methods as having the following characteristics:

Optimality:We strive to get the best possible answer.

Completeness: We strive to get all answers.

Repeatability: We expect to get the same result every time we repeat an experiment under the same conditions.

Timeliness: We expect to get the result in bounded time.

Parsimony: We strive to discover the simplest theory that fully explains the available data.

Transparency: We want to understand how we arrived at the result.

Scrutability: We want to understand the result.

Anderson conjectures that the logic based approach needs to be abandoned in favor of an alternative ‘model-free’ approach. That is, intuition based cognition cannot arise from reduction based principles. What Anderson describes a ‘model-free’ are ‘unintelligent components”, that is she writes:

If you are attempting to build an intelligent system from intelligent components then you are just pushing the problems down one level.

Anderson proposes several ‘model-free’ mechanisms, that the combination of which, can lead to emergent behavior that we see in intuition. Here are the mechanisms that I speculate are being explored:

Trial-and-error (aka Generate-and-test), Enumeration, Remembering failures, Remembering successes, Table lookup, Mindless copying, Discovery, Recognition, Learning, Abstraction, Adaptation, Evolution, Narrative, Consultation, Delegation and Markets.

Note that the details of Anderson’s strategy are not made public for intellectual property reasons. I do agree that there are computational mechanisms, such as those described above, that are simple (or unintelligent) in their underlying mechanism that can certainly lead to complex (or intelligent) emergent behavior. We only need to realize that universal computation can arise through the presence of just 3 fundamental mechanisms (i.e. computation, signaling and memory).

I agree though that there are limitations to our present day mathematics that serves as a insurmountable barrier towards progress. This however does not imply though that we cannot make progress because of our collective weakness in our mathematical tools. On the contrary, Deep Learning researchers have made considerable progress via experimental computational means. Engineering and practice has well out paced theory and this will continue to be the trend. I’ve written earlier about 3 areas that we have trouble analyzing, that is the notion of time, emergent collective behavior and meta-level reasoning. We thus are in a fork in the road, we can either wait for our mathematical language to make a quantum leap or we can soldier on with the expectation that there comes a point where analytic techniques have limited capabilities in the realm of universal computation.

My personal bias is that a method must not require global knowledge of its agents to be successful. In other words, all agents must decide on their actions based solely on local knowledge and that emergent global behavior arises through the individual local interactions of its constituents. In many alternative models that have been proposed, there usually is an underlying assumption that some global knowledge is required by its agents to function. I am also biased towards methods that have been known to work. So far, Deep Learning is the only Artificial Intuition that has been shown to work very well. The one conceptual problem though of DL is that SGD requires global knowledge that gets back-propagated to its constituents. This requirement though is likely to be relaxed in Modular Deep Learning systems. Therefore I prefer the term ‘localized models’ and not ‘model free models’, even though both mean a measure of lack of intelligence of constituent parts. In general though, this is similar to the concept of ‘parsimony’, in that we seek the simplest of mechanisms that give rise to emergent properties.

My current analysis, based on a meta meta-model deconstruction of Deep Learning is that we are still missing the building block mechanisms that can lead to capabilities such as domain adaptation, transfer learning, continuous learning and multitask learning. In fact, we are still unable to have a solid grasp of unsupervised learning (i.e. predictive learning). These mechanisms may emerge from our existing building blocks, but it is equally likely that they could be accidentally discovered. Very similar to the discoveries in Conway’s game of life, such as the following:

Image credit.

Despite the simplicity of the cellular automata above, we are incapable of understanding why it behaves the way it does. There is very little theory that we can bank on. I believe a similar situation also exists with our quest for AGI. It is indeed possible that we already have all the building blocks like Conway’s game of life. Alternatively, we have missing mechanisms that have yet to be discovered, similar to the spaceship above. This should explain to anyone the level of unpredictability of our progress in AGI. We may just be an accidental discovery away from achieving AGI.

There is one additional topic that I should cover before I end this article. That is the notion of consciousness that I discussed in a previous post: “Modular Deep Learning could be the Penultimate Step to Consciousness”. In that post, I brought up two theories that have explanations for consciousness, the IIT model and Schmidhuber’s dual RNN model. In the Dual Process theory model, consciousness arises from logical cognition. All 3 theories, curious enough, have a mechanism to track causality between events. It just seems that you can’t separate the notion of consciousness and the notion of tracking cause and effect. More abstractly, tracking behavior that evolves over time seems to be an essential ingredient.

Extra:

Bio: Carlos Perez is a software developer presently writing a book on "Design Patterns for Deep Learning". This is where he sources his ideas for his blog posts.

Original. Reposted with permission.

Related:

- Why Deep Learning is Radically Different From Machine Learning

- The Five Capability Levels of Deep Learning Intelligence

- Game Theory Reveals the Future of Deep Learning