Types of Bias in Machine Learning

Types of Bias in Machine Learning

The sample data used for training has to be as close a representation of the real scenario as possible. There are many factors that can bias a sample from the beginning and those reasons differ from each domain (i.e. business, security, medical, education etc.)

In one my previous posts I talke about the biases that are to be expected in machine learning and can actually help build a better model. Here is the follow-up post to show some of the bias to be avoided.

1. Sample Bias

We all have to consider sampling bias on our training data as a result of human input. Machine learning models are predictive engines that train on a large mass of data based on the past. They are made to predict based on what they have been trained to predict. These predictions are only as reliable as the human collecting and analyzing the data. The decision makers have to remember that if humans are involved at any part of the process, there is a greater chance of bias in the model.

The sample data used for training has to be as close a representation of the real scenario as possible. There are many factors that can bias a sample from the beginning and those reasons differ from each domain (i.e. business, security, medical, education etc.)

2. Prejudice Bias

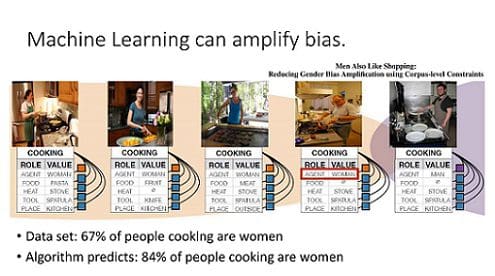

This again is a cause of human input. Prejudice occurs as a result of cultural stereotypes in the people involved in the process. Social class, race, nationality, gender can creep into a model that can completely and unjustly skew the results of your model. Unfortunately it is not hard to believe that it may have been the intention or just neglected throughout the whole process.

Involving some of these factors in statistical modelling for research purposes or to understand a situation at a point in time is completely different to predicting who should get a loan when the training data is skewed against people of a certain race, gender and/or nationality.

3. Confirmation Bias

Confirmation bias, the tendency to process information by looking for, or interpreting, information that is consistent with one’s existing beliefs. Source https://www.britannica.com/science/confirmation-bias

This is a well-known bias that has been studied in the field of psychology and directly applicable to how it can affect a machine learning process. If the people of intended use have a pre-existing hypothesis that they would like to confirm with machine learning (there are probably simple ways to do it depending on the context) the people involved in the modelling process might be inclined to intentionally manipulate the process towards finding that answer. I would personally think it is more common than we think just because heuristically, many of us in industry might be pressured to get a certain answer before even starting the process than just looking to see what the data is actually saying.

4. Group attribution Bias

This type of bias results from when you train a model with data that contains an asymmetric view of a certain group. For example, in a certain sample dataset if the majority of a certain gender would be more successful than the other or if the majority of a certain race makes more than another, your model will be inclined to believe these falsehoods. There is label bias in these cases. In actuality, these sorts of labels should not make it into a model in the first place. The sample used to understand and analyse the current situation cannot just be used as training data without the appropriate pre-processing to account for any potential unjust bias. Machine learning models are becoming more ingrained in society without the ordinary person even knowing which makes group attribution bias just as likely to punish a person unjustly because the necessary steps were not taken to account for the bias in the training data.

Related:

- Data Literacy: Using the Socratic Method

- All Models Are Wrong – What Does It Mean?

- What 70% of Data Science Learners Do Wrong

Types of Bias in Machine Learning

Types of Bias in Machine Learning