Tokenization and Text Data Preparation with TensorFlow & Keras

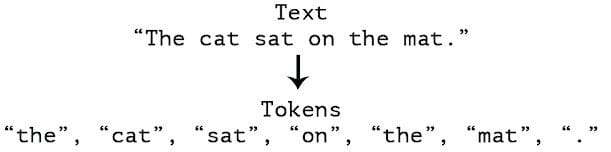

This article will look at tokenizing and further preparing text data for feeding into a neural network using TensorFlow and Keras preprocessing tools.

In the past we have had a look at a general approach to preprocessing text data, which focused on tokenization, normalization, and noise removal. We then followed that up with an overview of text data preprocessing using Python for NLP projects, which is essentially a practical implementation of the framework outlined in the former article, and which encompasses a mainly manual approach to text data preprocessing. We have also had a look at what goes into building an elementary text data vocabulary using Python.

There are numerous tools available for automating much of this preprocessing and text data preparation, however. These tools existed prior to the publication of those articles for certain, but there has been an explosion in their proliferation since. Since much NLP work is now accomplished using neural networks, it makes sense that neural network implementation libraries such as TensorFlow — and also, yet simultaneously, Keras — would include methods for achieving these preparation tasks.

This article will look at tokenizing and further preparing text data for feeding into a neural network using TensorFlow and Keras preprocessing tools. While the additional concept of creating and padding sequences of encoded data for neural network consumption were not treated in these previous articles, it will be added herein. Conversely, while noise removal was covered in the previous articles, it will not be here. What constitutes noise in text data can be a task-specific undertaking, and the previous treatment of this topic is still relevant as it is.

For what we will accomplish today, we will make use of 2 Keras preprocessing tools: the Tokenizer class, and the pad_sequences module.

Instead of using a real dataset, either a TensorFlow inclusion or something from the real world, we use a few toy sentences as stand-ins while we get the coding down. Next time we can extend our code to both use a real dataset and perform some interesting tasks, such as classification or something similar. Once this process is understood, extending it to larger datasets is trivial.

Let's start with the necessary imports and some "data" for demonstration.

from tensorflow.keras.preprocessing.text import Tokenizer from tensorflow.keras.preprocessing.sequence import pad_sequences train_data = [ "I enjoy coffee.", "I enjoy tea.", "I dislike milk.", "I am going to the supermarket later this morning for some coffee." ] test_data = [ "Enjoy coffee this morning.", "I enjoy going to the supermarket.", "Want some milk for your coffee?" ]

Next, some hyperparameters for performing tokenization and preparing the standardized data representation, with explanations below.

num_words = 1000 oov_token = '<UNK>' pad_type = 'post' trunc_type = 'post'

num_words = 1000

This will be the maximum number of words from our resulting tokenized data vocabulary which are to be used, truncated after the 1000 most common words in our case. This will not be an issue in our small dataset, but is being shown for demonstration purposes.oov_token = <UNK>

This is the token which will be used for out of vocabulary tokens encountered during the tokenizing and encoding of test data sequences, created using the word index built during tokenization of our training data.pad_type = 'post'

When we are encoding our numeric sequence representations of the text data, our sentences (or arbitrary text chunk) lengths will not be uniform, and so we will need to select a maximum length for sentences and pad unused sentence positions in shorter sentences with a padding character. In our case, our maximum sentence length will be determined by searching our sentences for the one of maximum length, and padding characters will be '0'.trunc_type = 'post'

As in the above, when we are encoding our numeric sequence representations of the text data, our sentences (or arbitrary text chunk) lengths will not be uniform, and so we will need to select a maximum length for sentences and pad unused sentence positions in shorter sentences with a padding character. Whether we pre-pad or post-pad sentences is our decision to make, and we have selected 'post', meaning that our sentence sequence numeric representations corresponding to word index entries will appear at the left-most positions of our resulting sentence vectors, while the padding characters ('0') will appear after our actual data at the right-most positions of our resulting sentence vectors.

Now let's perform the tokenization, sequence encoding, and sequence padding. We will walk through this code chunk by chunk below.

# Tokenize our training data

tokenizer = Tokenizer(num_words=num_words, oov_token=oov_token)

tokenizer.fit_on_texts(train_data)

# Get our training data word index

word_index = tokenizer.word_index

# Encode training data sentences into sequences

train_sequences = tokenizer.texts_to_sequences(train_data)

# Get max training sequence length

maxlen = max([len(x) for x in train_sequences])

# Pad the training sequences

train_padded = pad_sequences(train_sequences, padding=pad_type, truncating=trunc_type, maxlen=maxlen)

# Output the results of our work

print("Word index:\n", word_index)

print("\nTraining sequences:\n", train_sequences)

print("\nPadded training sequences:\n", train_padded)

print("\nPadded training shape:", train_padded.shape)

print("Training sequences data type:", type(train_sequences))

print("Padded Training sequences data type:", type(train_padded))

Here's what's happening chunk by chunk:

# Tokenize our training data

This is straightforward; we are using the TensorFlow (Keras)Tokenizerclass to automate the tokenization of our training data. First we create theTokenizerobject, providing the maximum number of words to keep in our vocabulary after tokenization, as well as an out of vocabulary token to use for encoding test data words we have not come across in our training, without which these previously-unseen words would simply be dropped from our vocabulary and mysteriously unaccounted for. To learn more about other arguments for the TensorFlow tokenizer, check out the documentation. After theTokenizerhas been created, we then fit it on the training data (we will use it later to fit the testing data as well).# Get our training data word index

A byproduct of the tokenization process is the creation of a word index, which maps words in our vocabulary to their numeric representation, a mapping which will be essential for encoding our sequences. Since we will reference this later to print out, we assign it a variable here to simplify a bit.# Encode training data sentences into sequences

Now that we have tokenized our data and have a word to numeric representation mapping of our vocabulary, let's use it to encode our sequences. Here, we are converting our text sentences from something like "My name is Matthew," to something like "6 8 2 19," where each of those numbers match up in the index to the corresponding words. Since neural networks work by performing computation on numbers, passing in a bunch of words won't work. Hence, sequences. And remember that this is only the training data we are working on right now; testing data is necessarily tokenized and encoded afterwards, below.# Get max training sequence length

Remember when we said we needed to have a maximum sequence length for padding our encoded sentences? We could set this limit ourselves, but in our case we will simply find the longest encoded sequence and use that as our maximum sequence length. There would certainly be reasons you would not want to do this in practice, but there would also be times it would be appropriate. Themaxlenvariable is then used below in the actual training sequence padding.# Pad the training sequences

As mentioned above, we need our encoded sequences to be of the same length. We just found out the length of the longest sequence, and will use that to pad all other sequences with extra '0's at the end ('post') and will also truncate any sequences longer than maximum length from the end ('post') as well. Here we use the TensorFlow (Keras)pad_sequencesmodule to accomplish this. You can look at the documentation for additional padding options.# Output the results of our work

Now let's see what we've done. We would expect to note the longest sequence and the padding of those which are shorter. Also note that when padded, our sequences are converted from Python lists to Numpy arrays, which is helpful since that is what we will ultimately feed into our neural network. The shape of our training sequences matrix is the number of sentences (sequences) in our training set (4) by the length of our longest sequence (maxlen, or 12).

Word index:

{'<UNK>': 1, 'i': 2, 'enjoy': 3, 'coffee': 4, 'tea': 5, 'dislike': 6, 'milk': 7, 'am': 8, 'going': 9, 'to': 10, 'the': 11, 'supermarket': 12, 'later': 13, 'this': 14, 'morning': 15, 'for': 16, 'some': 17}

Training sequences:

[[2, 3, 4], [2, 3, 5], [2, 6, 7], [2, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 4]]

Padded training sequences:

[[ 2 3 4 0 0 0 0 0 0 0 0 0]

[ 2 3 5 0 0 0 0 0 0 0 0 0]

[ 2 6 7 0 0 0 0 0 0 0 0 0]

[ 2 8 9 10 11 12 13 14 15 16 17 4]]

Padded training shape: (4, 12)

Training sequences data type: <class 'list'>

Padded Training sequences data type: <class 'numpy.ndarray'>

Now let's use our tokenizer to tokenize the test data, and then similarly encode our sequences. This is all quite similar to the above. Note that we are using the same tokenizer we created for training in order to facilitate simpatico between the 2 datasets, using the same vocabulary. We also pad to the same length and specifications as the training sequences.

test_sequences = tokenizer.texts_to_sequences(test_data)

test_padded = pad_sequences(test_sequences, padding=pad_type, truncating=trunc_type, maxlen=maxlen)

print("Testing sequences:\n", test_sequences)

print("\nPadded testing sequences:\n", test_padded)

print("\nPadded testing shape:",test_padded.shape)

Testing sequences: [[3, 4, 14, 15], [2, 3, 9, 10, 11, 12], [1, 17, 7, 16, 1, 4]] Padded testing sequences: [[ 3 4 14 15 0 0 0 0 0 0 0 0] [ 2 3 9 10 11 12 0 0 0 0 0 0] [ 1 17 7 16 1 4 0 0 0 0 0 0]] Padded testing shape: (3, 12)

Can you see, for instance, how having different lengths of padded sequences between training and testing sets would cause a problem?

Finally, let's check out are encoded test data.

for x, y in zip(test_data, test_padded):

print('{} -> {}'.format(x, y))

print("\nWord index (for reference):", word_index)

Enjoy coffee this morning. -> [ 3 4 14 15 0 0 0 0 0 0 0 0]

I enjoy going to the supermarket. -> [ 2 3 9 10 11 12 0 0 0 0 0 0]

Want some milk for your coffee? -> [ 1 17 7 16 1 4 0 0 0 0 0 0]

Word index (for reference): {'<UNK>': 1, 'i': 2, 'enjoy': 3, 'coffee': 4, 'tea': 5, 'dislike': 6, 'milk': 7, 'am': 8, 'going': 9, 'to': 10, 'the': 11, 'supermarket': 12, 'later': 13, 'this': 14, 'morning': 15, 'for': 16, 'some': 17}

Note that, since we are encoding some words in the test data which were not seen in the training data, we now have some out of vocabulary tokens which we encoded as <UNK> (specifically 'want', for example).

Now that we have padded sequences, and more importantly know how to get them again with different data, we are ready to do something with them. Next time, we will replace the toy data we were using this time with actual data, and with very little change to our code (save the possible necessity of classification labels for our train and test data), we will move forward with an NLP task of some sort, most likely classification.

Related:

- 10 Python String Processing Tips & Tricks

- Getting Started with Automated Text Summarization

- How to Create a Vocabulary for NLP Tasks in Python