Can Neural Networks Show Imagination? DeepMind Thinks They Can

DeepMind has done some of the relevant work in the area of simulating imagination in deep learning systems.

I recently started a new newsletter focus on AI education. TheSequence is a no-BS (meaning no hype, no news etc) AI-focused newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers and concepts. Please give it a try by subscribing below:

Creating agents that resemble the cognitive abilities of the human brain has been one of the most elusive goals of the artificial intelligence(AI) space. Recently, I’ve been spending time on a couple of scenarios that relate to imagination in deep learning systems which reminded me of a very influential paper Alphabet’s subsidiary DeepMind published last year in this subject.

Imagination is one of those magical features of the human mind that differentiate us from other species. From the neuroscience standpoint, imagination is the ability of the brain to form images or sensations without any immediate sensorial input. Imagination is a key element of our learning process as it enable us to apply knowledge to specific problems and better plan for future outcomes. As we execute tasks in our daily lives, we are constantly “imagining” potential outcomes in order to optimize our actions. Not surprisingly, imagination is often perceived as a foundational enables of planning from a cognitive standpoint.

Incorporating imagination into artificial intelligence(AI) agents have long been an elusive goal of researchers in the space. Imagine (very appropriately ????) AI programs that are not only able to lean new tasks to plan and reason about the future. Recently, we have seen some impressive results in the area of adding imagination to AI agents in systems such as AlphaGo. It has been precisely the DeepMind team who has been helping to formulate to initial theory of imagination-augmented AI agents. Last year, they published a new revision of a famous research paper that outlined one of the first neural network architectures to achieve this goal.

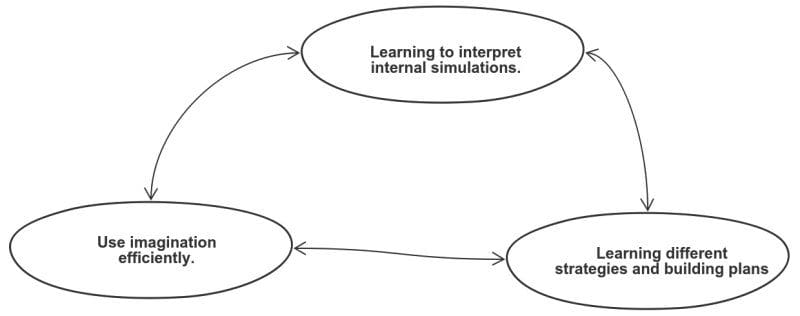

How can we define imagination in the context of AI agents? In the case of DeepMind, they define imagination-augmented agents to systems that include the following characteristics:

Deep reinforcement learning(RL) is often seen as the hallmark of imagination-augmented AI agents as it attempts to correlate observations with actions. However, deep RL systems typically require large amounts of training that results on knowledge tailored to a very specific set of tasks in an environment. The DeepMind paper proposes an alternative to traditional models by using models that use environment simulations to learn to “interpret” imperfect predictions. The idea is to have parallel models that use simulations to extract useful knowledge that can be used in the core model. Just like we often judge the level of imagination of an individual [that guy has no imagination], we can see the imagination models as an augmented capability of deep learning programs.

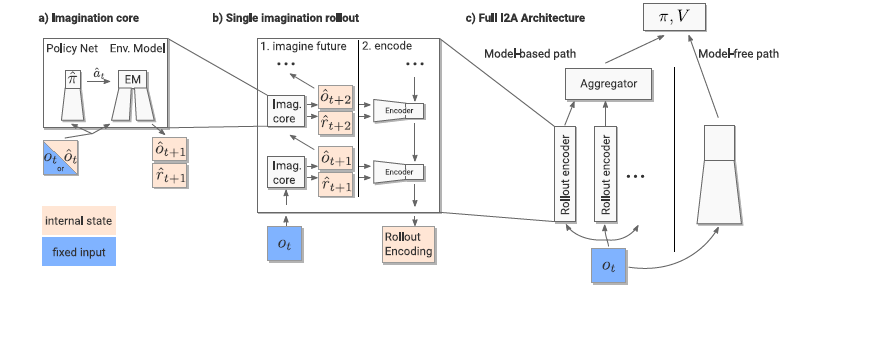

The I2A Architecture

To enable “imagination” in deep learning agents, the DeepMind team relied on a clever neural network architecture known as I2A. The key element of the I2A architecture is a component called Imagination Core, that uses an environmental model to, given information about the current environment, make predictions about its future state. Given a past state and current action, the environment model predicts the next state and any number of signals from the environment. The I2A architecture, rolls out the environment model over multiple time steps into the future, by initializing the imagined trajectory with the present time real observation, and subsequently feeding simulated observations into the model. The actions produced in each rollout help to define the agent policy that is then used by the imagination core module.

One of the key elements of the I2A architecture are the rollout encoders that are responsible for “interpreting” the information produced by the imagination core, extracting any information useful for the agent’s decision, or even ignoring it when necessary.

Playing Sokoban

To see the I2A model in action, the DeepMind team created an implementation that tried to play the famous Sokoban game. Sokoban is a classic planning problem, where the agent has to push a number of boxes onto given target locations. Because boxes can only be pushed (as opposed to pulled), many moves are irreversible, and mistakes can render the puzzle unsolvable. A human player is thus forced to plan moves ahead of time. The imagination augmented models showed impressive abilities to learn from imperfect environments like Sokoban as shown in the following video:

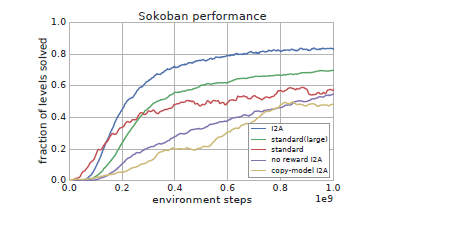

The DeepMind team benchmarked the I2A model against more traditional deep RL techniques and the results were remarkable. I2A achieved a shocking 85% performance which was vastly superior to other strategies.

One of the most impressive takeaways from the Sokoban experiments was the ability of imagination-augmented agents to imagine trajectories in potentially imperfect environment models and ignore inaccurate information. This is particularly relevant given the growing number of scenarios that require AI agents to operate with imperfect information and limited data.

Imagination is one of those key capabilities that can open the door to a new generation of AI agents. Techniques like I2A are still in the very nascent state but can become a key building block of reinforcement learning architectures in which agents are not only able to learn the present but to “imagine” the future.

Original. Reposted with permission.

Related:

- A Deep Learning Dream: Accuracy and Interpretability in a Single Model

- DeepMind’s Three Pillars for Building Robust Machine Learning Systems

- Netflix’s Polynote is a New Open Source Framework to Build Better Data Science Notebooks