What is Adversarial Neural Cryptography?

The novel approach combines GANs and cryptography in a single, powerful security method.

I recently started a new newsletter focus on AI education and already has over 50,000 subscribers. TheSequence is a no-BS( meaning no hype, no news etc) AI-focused newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers and concepts. Please give it a try by subscribing below:

Sharing data securely is one of the biggest roadblocks in the mainstream implementation of artificial intelligence(AI) solutions. AI is as much about intelligence as it is about data and large datasets are privileged of a few companies in this world. As a result, companies tend to be incredibly protective of their data assets and very conservative when comes to share them with other people. At the same token, data scientists need access to large labeled datasets in order to validate their models. That constant friction between privacy and intelligence has become a governing dynamic in modern AI applications.

For any company, it will be immensely more valuable to give access to their data to a large group of data scientists and researchers so that they can extract intelligence from it, compare and evaluate models and arrive to the right solution. However, how can they guarantee that their data will be protected, that the interest of their customers will be preserved or that some unscrupulous data scientists won’t share their intelligence with the competition? When confronted with that issue, many companies result to mechanisms for anonymizing their datasets but that is hardly a solution in many scenarios.

Why Anonymization Doesn’t Work?

Data anonymization seems to be the obvious solution to the data privacy challenge. After all, if we can obfuscate the sensitive aspects of the dataset we can achieve certain levels of privacy. While a theoretically sound argument, the problem with anonymization is that it doesn’t prevent inference. Let’s take the scenario in which a hedge fund shares a dataset of proprietary research indicators in a stock (let’s say Apple) correlated with their stock prices. Even if the name Apple is obfuscated in the dataset, any clever data scientists could figure it out by doing some basic inferences. Typically, the rule of thumb is

“if the other attributes in the dataset can serve as a predictor to the obfuscated data, then anonymization is not a good idea” ????

Homomorphic Encryption

Homomorphic encryption represents one of the biggest breakthroughs in the cryptography space and one that is likely to become the foundation of many AI applications in the near future. Conceptually, the term homomorphic encryption describes a class of encryption algorithms which satisfy the homomorphic property: that is certain operations, such as addition, can be carried out on cipher texts directly so that upon decryption the same answer is obtained as operating on the original messages. In the context of AI, homomorphic encryption could enable data scientists to perform operations on encrypted data that will yield the same results as if they were operating on clear data.

The main issue with homomorphic encryption is that the technology is still too computationally expensive to be considered in mainstream applications. Basic homomorphic encryption techniques can turn 1MB of data into 16GB which makes it completely impractical in AI scenarios. Even more relevant is the fact that homomorphic encryption techniques(like most cryptography algorithms) are generally not differentiable which is a requirement for with algorithms such as stochastic gradient descent (SGD), the main optimization technique for deep neural networks.

Recently, a few companies in the emergent space of decentralized AI have claimed that they are relying on homomorphic encryption as part of their blockchain-based platform. I suspect that most of those claims are purely theoretical because the practical applications of homomorphic encryptions are still very limited.

GAN Cryptography

Somewhere between anonymization methods and homomorphic encryption, we find a novel technique pioneered by Google that uses adversarial neural networks to protect information from other neural models. The research paper detailing this technique was published at the end of 2016 under the title “Learning to Protect Communications with Adversarial Neural Cryptography” and it’s, easily, one of the most fascinating AI papers I’ve read in the last two years.

Wait, we are talking about using neural networks for cryptography? Traditionally, neural networks have been considered to be very bad at cryptographic operations as they have a hard time performing a simple XOR computation. While that’s true, it turns out that neural networks can learn to protect the confidentiality of their data from other neural networks: they discover forms of encryption and decryption, without being taught specific algorithms for these purposes.

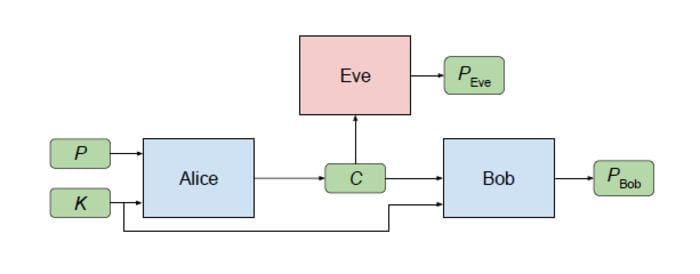

The setup for the GAN cryptography scenario involved three parties: Alice, Bob, and Eve. Typically, Alice and Bob wish to communicate securely, and Eve wishes to eavesdrop on their communications. Thus, the desired security property is secrecy (not integrity), and the adversary is a “passive attacker” that can intercept communications but that is otherwise quite limited.

Source: https://arxiv.org/abs/1610.06918

In the scenario depicted above, Alice wishes to send a single confidential message P to Bob. The message P is an input to Alice. When Alice processes this input, it produces an output C. (“P” stands for “plaintext” and “C” stands for “ciphertext”.) Both Bob and Eve receive C, process it, and attempt to recover P. Let’s represent those computations by PBob and PEve, respectively. Alice and Bob have an advantage over Eve: they share a secret key K. That secret Key[K] is used as an additional input to Alice and Bob.

Informally, the objectives of the participants are as follows. Eve’s goal is simple: to reconstruct P accurately (in other words, to minimize the error between P and PEve). Alice and Bob want to communicate clearly (to minimize the error between P and PBob), but also to hide their communication from Eve.

Using generative adversarial network techniques, Alice and Bob were trained jointly to communicate successfully while learning to defeat Eve. Here is the kicker, Alice and Bob have no predefined notion of the cryptography algorithms they are going to use to accomplish their goal neither the techniques Even will use. Following GAN principles, Alice and Bob are trained to defeat the best version of Eve rather than a fixed Eve.

The results from the GAN cryptography experiments were remarkable. As you can see in the following chart, somewhere around 8000 training steps both Bob and Eve start to be able to reconstruct the original message. Somewhere around 10,000 training steps the Alice and Bob networks seem to figure this out and Eve’s error rate climbs again. In other words, Bob was able to learn from Eve behavior and protect the communication to avoid the attacked while still improving its efficiency.

Source: https://arxiv.org/abs/1610.06918

Going back to AI applications, GAN cryptography can be used to exchange information between a company and neural networks without while maintaining high levels of privacy. What if neural networks could not also learn how to protect the information but what to protect given the objectives of the adversary? That will even be a more viable solution for AI applications as models can learn to protect information selectively, keeping some elements in the dataset unencrypted but preventing any form of inference that will reveal the sensitive data.

The Google team adapted the GAN cryptography architecture in a model in which Alice and Bob still share a key, but here Alice receives A, B, C and produces D-public in addition to a ciphertext; both Bob and Eve have access to Alice’s outputs; Bob uses them for producing an improved estimate of D, while Eve attempts to recover C. The goal is to demonstrate that the adversarial training permits approximating D without revealing C, and that this approximation can be combined with encrypted information and with a key in order to obtain a better approximation of D.

To understand whether the system is learning to hide information properly, the researchers used a separate evaluator that we call “Blind Eve”, which is aware of the distribution of C. Blind Eve tries to guess C relying only upon this baseline information, whereas the real Eve also knows the intermediate output(D-public) and the ciphertext. If Eve’s reconstruction error becomes equal to that of Blind Eve, that’s a sign that Eve is not successfully extracting information from the public estimate and the ciphertext. And that’s exactly what happened. After a few training steps, Eve’s advantage over “Blind Eve” becomes neglectable indicating that Eve is not able to reconstruct any more information about C than would be possible by simply knowing the distribution of values of C.

Source: https://arxiv.org/abs/1610.06918

GAN cryptography is a relatively unknown technique that can be pivotal in mainstream AI applications. Conceptually, GAN cryptography can allow companies to share datasets with data scientists without having to disclose the sensitive data in it. At the same time, neural models can performed computations on GAN-encrypted data without having to fully decrypt it.

Original. Reposted with permission.

Related:

- An overview of synthetic data types and generation methods

- Adversarial generation of extreme samples

- Deploying Secure and Scalable Streamlit Apps on AWS with Docker Swarm, Traefik and Keycloak